Large language models (LLMs) have revolutionized natural language processing by making strides in text generation, summarization, and translation. Even though they excel at language tasks, they need help handling complex, multi-step reasoning tasks that require careful progression through each step. Researchers have been exploring structured frameworks that enhance these models’ reasoning abilities, moving beyond conventional prompt-based methods.

A significant challenge in advancing LLMs’ capabilities lies in enabling these models to break down and navigate through intricate tasks that involve multiple interconnected steps. Traditional language models often need more attention to critical subtasks within a complex problem, leading to inaccurate or incomplete outcomes. This problem is particularly evident in tasks that demand sequential decision-making or synthesis across various pieces of information. Researchers aim to address this by creating systems that decompose complex tasks into simpler, more manageable parts, enabling models to handle sophisticated tasks more reliably.

Several methods have been proposed to address these challenges, each with its unique approach. Chain-of-thought (CoT) prompting allows models to perform reasoning sequentially, providing prompts that guide step-by-step logic. However, CoT is often limited by its need for manual prompt engineering and needs help with tasks outside its training domain. To enhance this approaches like Tree of Thoughts (ToT) and Graph of Thoughts (GoT) organize problem-solving paths into structured hierarchies, each representing a potential solution route. Despite these advancements, these approaches can become overly intricate for certain problem types, introducing unnecessary complexity into models that perform best with direct prompts.

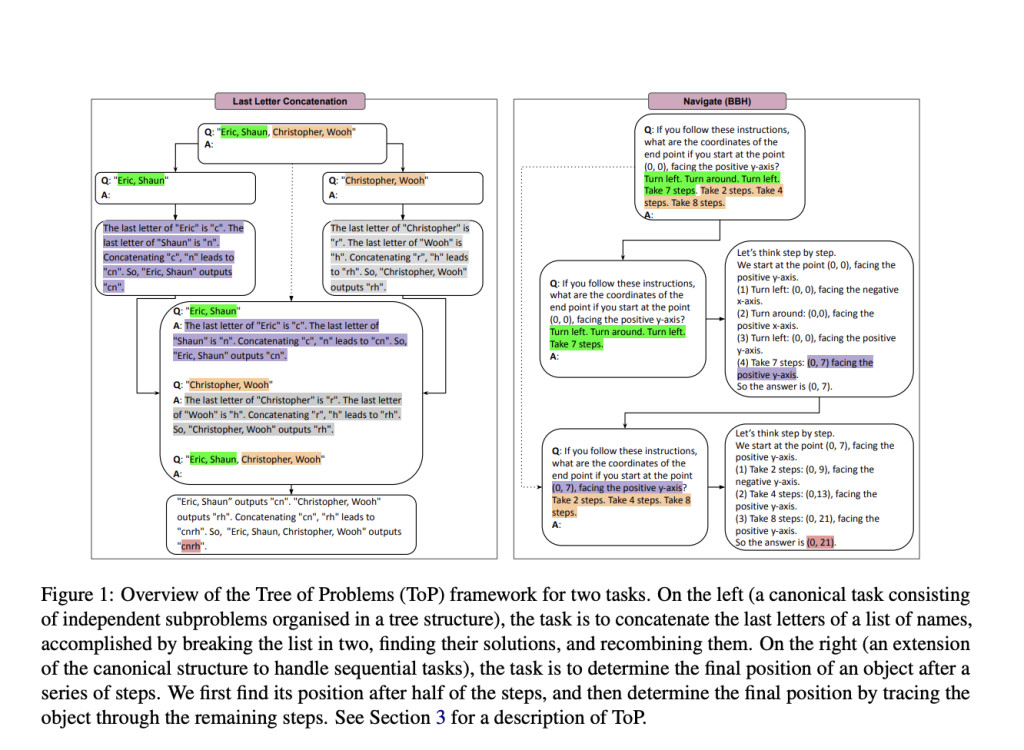

Researchers from Inria in Paris, France, introduced an innovative framework known as the Tree of Problems (ToP) to overcome these limitations. This method provides a simpler yet effective structure for problem decomposition, focusing on problems that can be divided into analogous subtasks. Unlike the more complex ToT or GoT frameworks, ToP organizes tasks into a hierarchical structure where each node represents a subproblem directly related to the original task. This allows LLMs to solve smaller, similar instances of a larger problem before combining these solutions into a cohesive answer, ultimately reducing computational load and enhancing accuracy.

The ToP framework systematically breaks down a problem into a tree structure composed of simpler sub-tasks. The process starts with a decomposer that divides the primary task into related subtasks and then organizes them within a tree where each node corresponds to a subproblem. A solver, often an LLM configured for task-specific objectives, addresses these atomic problems at the tree’s base. Each node is solved independently, and solutions are merged bottom-up, with the final solution forming at the tree’s root. This method ensures that the LLM only focuses on one problem component at a time, simplifying the reasoning process and minimizing error.

Empirical evaluations have demonstrated the efficiency and performance of the ToP approach, particularly when applied to structured tasks. For example, ToP achieved an accuracy improvement of 40% over GoT in sorting tasks, outperforming CoT and ToT methods by considerable margins. In set intersection tasks, ToP showed an increase in accuracy by 19% over CoT, and in keyword counting tasks, it achieved a 5% improvement, demonstrating its effectiveness across various problem domains. The framework also excelled in tasks such as the Last Letter Concatenation, which recorded higher accuracy rates than CoT in scenarios involving 4, 8, and 16 names. These numbers indicate ToP’s scalability and adaptability across different problem types, making it a promising solution for improving LLM reasoning in complex settings.

Further analysis revealed ToP’s advantages over Least-to-Most (L2M) prompting, another structured approach that involves processing a task step-by-step. In tests with various list lengths, ToP consistently outperformed L2M while requiring fewer computational calls. For lists of 4 and 8 names, ToP achieved comparable or superior accuracy with half as many calls, highlighting its efficiency. On tasks requiring sequential processing, such as coin flipping and object tracking, ToP also demonstrated robustness by handling increased complexity with minimal drop in performance, showing its adaptability for canonical and sequential tasks.

The Tree of Problems framework represents a promising direction for large language model development by addressing key limitations in multi-step reasoning. By breaking down complicated tasks into manageable subproblems and organizing them in a simple, effective tree structure, ToP enhances both accuracy and computational efficiency. This approach outperforms traditional methods and introduces a scalable framework for applying LLMs to more complex reasoning tasks in natural language processing. Through innovations like ToP, LLMs are poised to become more reliable tools in handling diverse, complex tasks, marking a significant step forward in the field.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Sponsorship Opportunity with us] Promote Your Research/Product/Webinar with 1Million+ Monthly Readers and 500k+ Community Members

The post This AI Paper by Inria Introduces the Tree of Problems: A Simple Yet Effective Framework for Complex Reasoning in Language Models appeared first on MarkTechPost.

Source: Read MoreÂ