Customer Relationship Management (CRM) has become integral to business operations as the center for managing customer interactions, data, and processes. Integrating advanced AI into CRM can transform these systems by automating routine processes, delivering personalized experiences, and streamlining customer service efforts. As organizations increasingly adopt AI-driven approaches, the need for intelligent agents capable of performing complex CRM tasks has grown. Large language models (LLMs) are at the forefront of this movement, potentially enhancing CRM systems by automating complex decision-making and data management tasks. However, deploying these agents requires robust, realistic benchmarks to ensure they can handle the complexities typical of CRM environments, which include managing multifaceted data objects and following specific interaction protocols.

Existing tools such as WorkArena, WorkBench, and Tau-Bench provide elementary assessments for CRM agent performance. Still, these benchmarks primarily evaluate simple operations, such as data navigation and filtering, and do not capture the complex dependencies and dynamic interrelations typical of CRM data. For instance, these tools must improve modeling relationships between objects, such as orders linked to customer accounts or cases spanning multiple touchpoints. This lack of complexity limits organizations from understanding the full capabilities of LLM agents, creating an ongoing need for a more comprehensive evaluation framework. One of the key challenges in this field is the lack of benchmarks that accurately reflect the intricate, interconnected tasks required in real CRM systems.

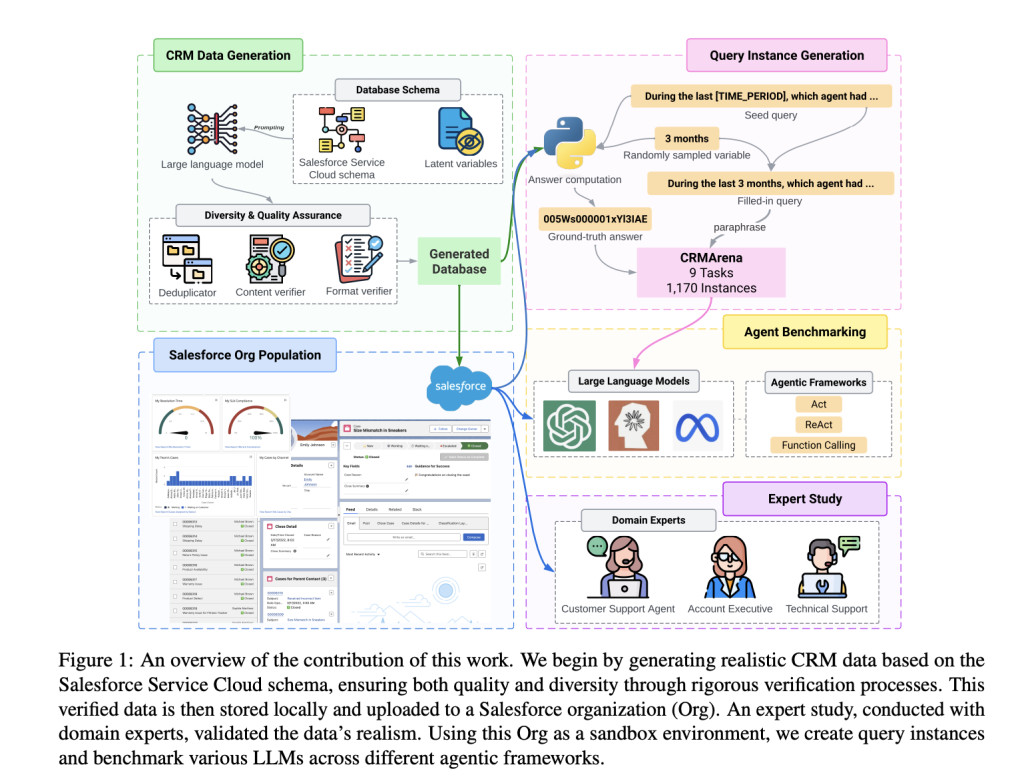

Salesforce’s AI Research team addressed this gap by introducing CRMArena, a sophisticated benchmark developed specifically to evaluate the capabilities of AI agents in CRM environments. Unlike previous tools, CRMArena simulates a real-world CRM system complete with complex data interconnections, enabling a robust evaluation of AI agents on professional CRM tasks. The development process involved collaboration with CRM domain experts who contributed to the design of nine realistic tasks based on three distinct personas: service agents, analysts, and managers. These tasks include essential CRM functions, such as monitoring agent performance, handling complex customer inquiries, and analyzing data trends to improve service. CRMArena includes 1,170 unique queries across these nine tasks, providing a comprehensive platform for testing CRM-specific scenarios.

The architecture of CRMArena is grounded in a CRM schema modeled after Salesforce’s Service Cloud. The data generation pipeline produces an interconnected dataset of 16 objects, such as accounts, orders, and cases, with complex dependencies that mirror real-world CRM environments. To enhance realism, CRMArena integrates latent variables replicating dynamic business conditions, such as seasonal buying trends and agent skill variations. This high level of interconnectivity, which involves an average of 1.31 dependencies per object, ensures that CRMArena represents CRM environments accurately, presenting agents with challenges similar to those they would face in professional settings. Additionally, CRMArena’s setup supports both UI and API access to CRM systems, allowing for direct interactions through API calls and realistic response handling.

Performance testing with CRMArena has revealed that current state-of-the-art LLM agents struggle with CRM tasks. Using the ReAct prompting framework, the highest-performing agent achieved only 38.2% task completion. When supplemented with specialized function-calling tools, performance improved to a completion rate of 54.4%, highlighting a significant performance gap. The tasks evaluated included challenging functions such as Named Entity Disambiguation (NED), Policy Violation Identification (PVI), and Monthly Trend Analysis (MTA), all requiring agents to analyze and interpret complex data. For example, only 90% of domain experts confirmed that the synthetic data environment felt authentic, with over 77% rating individual objects within the CRM system as “realistic†or “very realistic.†These insights reveal critical gaps in the LLM agents’ ability to understand nuanced dependencies in CRM data. This area must be addressed for the full deployment of AI-driven CRM.

CRMArena’s ability to deliver high-fidelity testing comes from its two-tiered quality assurance process. The data generation pipeline is optimized to maintain diversity across various data objects, using a mini-batch prompting approach that limits content duplication. Further, CRMArena’s quality assurance processes include format and content verification to ensure the consistency and accuracy of generated data. Regarding query formulation, CRMArena consists of a mix of answerable and non-answerable queries, with non-answerable queries making up 30% of the total. These are designed to test the agents’ capability to identify and handle questions that do not have solutions, thus closely mirroring real CRM environments where information may not always be immediately available.

Key Takeaways from the research on CRMArena include:

- CRM Task Coverage: CRMArena includes nine diverse CRM tasks representing service agents, analysts, and managers, covering over 1,170 unique queries.

- Data Complexity: CRMArena involves 16 interconnected objects, averaging 1.31 dependencies per object, achieving realism in CRM modeling.

- Realism Validation: Over 90% of domain experts rated CRMArena’s test environment as realistic or very realistic, indicating the high validity of its synthetic data.

- Agent Performance: Leading LLM agents completed only 38.2% of tasks using standard prompting and 54.4% with function-calling tools, underscoring challenges in current AI capabilities.

- Non-Answerable Queries: About 30% of CRMArena’s queries are non-answerable, pushing agents to identify and appropriately handle incomplete information.

In conclusion, the introduction of CRMArena highlights significant advancements and key insights in assessing AI agents for CRM tasks. CRMArena is a major contributor to the CRM industry, offering a scalable, accurate, and rigorous benchmark for evaluating agent performance in CRM environments. As the research demonstrates, there is a substantial gap between the current capabilities of AI agents and the high-performance standards required in CRM systems. CRMArena’s extensive testing framework provides a necessary tool for developing and refining AI agents to meet these demands.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[AI Magazine/Report] Read Our Latest Report on ‘SMALL LANGUAGE MODELS‘

The post Is Your LLM Agent Enterprise-Ready? Salesforce AI Research Introduces CRMArena: A Novel AI Benchmark Designed to Evaluate AI Agents on Realistic Tasks Grounded on Professional Work Environments appeared first on MarkTechPost.

Source: Read MoreÂ