Current Text-to-Speech (TTS) systems, such as VALL-E and Fastspeech, face persistent challenges related to processing complex linguistic features, managing polyphonic expressions, and producing natural-sounding multilingual speech. These limitations become particularly evident when dealing with context-dependent polyphonic words and cross-lingual synthesis. Traditional TTS approaches, which rely on grapheme-to-phoneme (G2P) conversion, often struggle to manage phonetic complexity across multiple languages, leading to inconsistent quality. With the growing demand for more sophisticated voice cloning and multilingual AI, these challenges hinder advancements in real-world applications like conversational AI and accessibility tools.

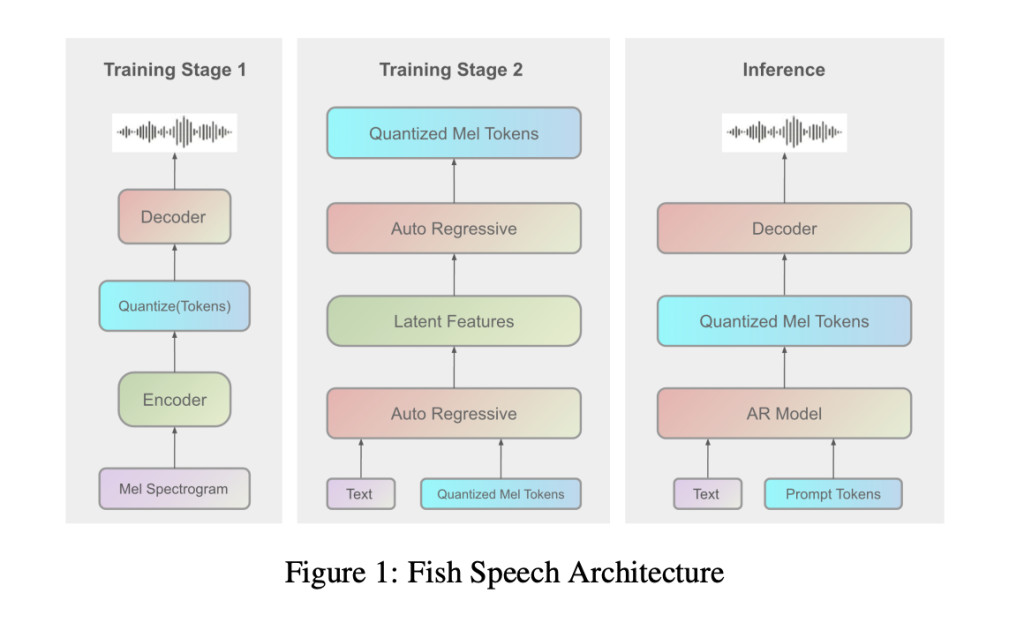

The Fish Audio Team has recently unveiled Fish Agent v0.1 3B, an innovative solution designed to address these challenges in TTS. Fish Agent is built on the Fish-Speech framework, leveraging a novel Dual Autoregressive (Dual-AR) architecture and an advanced vocoder called Firefly-GAN (FF-GAN). Unlike traditional TTS systems, Fish Agent v0.1 3B relies on Large Language Models (LLMs) to extract linguistic features directly from the text, bypassing the need for G2P conversion. This approach enhances the synthesis pipeline’s efficiency and multilingual capabilities, addressing the shortcomings of current TTS models and simplifying multilingual text processing.

Fish Agent v0.1 3B features a serial fast-slow Dual Autoregressive (Dual-AR) architecture consisting of Slow and Fast Transformers. The Slow Transformer handles global linguistic structures, while the Fast Transformer captures detailed acoustic features, ensuring high-quality and natural-sounding speech synthesis. By integrating Grouped Finite Scalar Vector Quantization (GFSQ), the model achieves superior codebook utilization and compression, leading to efficient synthesis with minimal latency. Moreover, Firefly-GAN (FF-GAN), the model’s vocoder, employs enhanced vector quantization techniques to deliver high-fidelity output and stability during sequence generation. These architectural choices enable Fish Agent to excel in multilingual processing, voice cloning, and real-time applications, making it a significant step forward in the TTS field.

The importance of Fish Agent v0.1 3B lies in its ability to tackle the bottlenecks that have long caused troubles in TTS systems. Its non-G2P approach simplifies the synthesis process, allowing better management of complex linguistic phenomena and mixed-language content. Fish-Speech was trained on a vast dataset comprising 720,000 hours of multilingual audio data, which has enabled the model to generalize effectively across different languages and maintain quality in multilingual contexts. Experimental evaluations indicate that Fish-Speech achieves a Word Error Rate (WER) of 6.89%, significantly outperforming baseline models such as CosyVoice (22.20%) and F5-TTS (13.98%). Additionally, Fish Agent delivers a latency of just 150ms, making it an optimal choice for real-time applications. These performance metrics demonstrate the potential of Fish Agent v0.1 3B to advance AI-driven speech technologies.

Fish Agent v0.1 3B, developed by the Fish Audio Team, represents a significant breakthrough in TTS technology. By leveraging a novel Dual-AR architecture and advanced vocoder capabilities, Fish Agent addresses the inherent limitations of traditional TTS systems, particularly in multilingual and polyphonic scenarios. Its impressive performance in both linguistic feature extraction and voice cloning sets a new benchmark for AI-driven speech synthesis.

Check out the Paper, GitHub, and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Sponsorship Opportunity with us] Promote Your Research/Product/Webinar with 1Million+ Monthly Readers and 500k+ Community Members

The post Fish Agent v0.1 3B Released: A Groundbreaking Voice-to-Voice Model Capable of Capturing and Generating Environmental Audio Information with Unprecedented Accuracy appeared first on MarkTechPost.

Source: Read MoreÂ