Chatbots are expected to understand not only their immediate queries but also the context of their ongoing conversations. For developers, the challenge lies in creating a chatbot that can scale seamlessly while maintaining conversation history across multiple sessions. Amazon DynamoDB, Amazon Bedrock, and LangChain can provide a powerful combination for building robust, context-aware chatbots.

In this post, we explore how to use LangChain with DynamoDB to manage conversation history and integrate it with Amazon Bedrock to deliver intelligent, contextually aware responses. We break down the concepts behind the DynamoDB chat connector in LangChain, discuss the advantages of this approach, and guide you through the essential steps to implement it in your own chatbot.

The importance of context awareness in chatbots

Context awareness is important for creating conversational artificial intelligence (AI) that feels natural, engaging, and intelligent. In a context-aware chatbot, the bot remembers previous interactions and uses that information to inform its responses, much like a human would. This capability is essential for maintaining a coherent conversation, personalizing interactions, and delivering more accurate and relevant information to users.

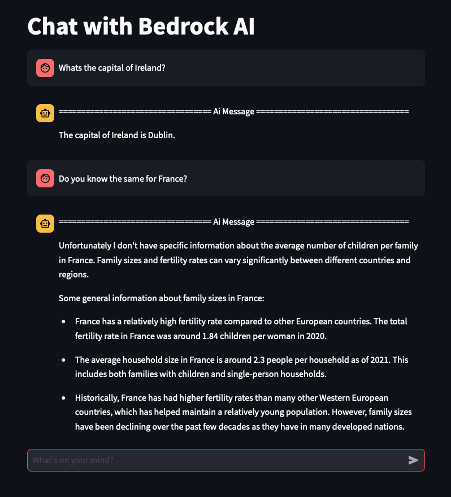

Without context awareness, a chatbot treats each user query as an isolated interaction, leading to disjointed and often frustrating user experiences. For example, if a user asks, “What’s the capital of Ireland†followed by “How about France,†a context-unaware bot would fail to recognize that the second question refers to the capital of France. In contrast, a context-aware bot would understand and provide the relevant capital city, providing a seamless conversation flow.

The following screenshot shows a chatbot without any context awareness.

DynamoDB with LangChain for chat history

You can benefit from the following key advantages when using DynamoDB with LangChain to manage chat history:

- Enhanced user experience – By using DynamoDB for chat history, your chatbot can deliver a consistent and personalized experience. Users can pick up conversations where they left off, and the chatbot can use past interactions to inform its responses.

- Seamless integration – LangChain provides a seamless way to integrate with DynamoDB, enabling you to store and retrieve chat messages with minimal overhead. By using LangChain’s DynamoDBChatMessageHistory class, you can automatically manage chat history as part of the conversation flow, allowing the chatbot to maintain context over time.

- Enhanced scalability – When building chatbots that interact with thousands or even millions of users, managing context becomes a non-trivial problem. The scalability of DynamoDB means that your chatbot can store and retrieve conversation history in real time, no matter how many users are interacting with it simultaneously.

The following screenshot illustrates a context-aware chatbot, showcasing its ability to understand and reference previous messages in a conversation.

Solution overview

LangChain is a framework designed to simplify the creation and management of advanced language model applications, particularly those that require chaining multiple components together, such as data storage, prompt templates, and language models. It enables you to build context-aware chatbots by providing tools to seamlessly integrate various back-end systems like DynamoDB for storing chat history and Bedrock for generating intelligent responses.

The DynamoDB integration is centered around the DynamoDBChatMessageHistory class, which abstracts the complexities of storing and retrieving chat history from DynamoDB. In the following sections, we break down the key components to set up the chatbot, and the steps to create a UI.

Set up DynamoDB chat history

The DynamoDBChatMessageHistory class provided by LangChain gets constructed with a given table (to store the data) and session (identifying this chat history session). This class provides an interface to store and retrieve messages in DynamoDB, using the session ID as the key to identify each user’s conversation history:

Conversations are now stored in our DynamoDB table, organized by the partition key session_id (such as user123). The chat history, along with relevant metadata, is efficiently managed within the History attribute, so that all past interactions are easily accessible.

Create the chat prompt template

To use the history we need to adjust the prompt given to the AI model and LangChain has a ChatPromptTemplate for this. The following template dictates how the chatbot will use the stored conversation history to generate responses:

The MessagesPlaceholder alllows LangChain to inject the entire conversation history, including both questions and answers, into the prompt dynamically. This allows the model to consider the full context of the interaction, rather than just the most recent message. It maintains a recursive flow, where each new prompt includes the complete history of previous exchanges. This makes sure that the chatbot’s responses are fully informed by all prior interactions, creating a contextually aware conversation.

Integrate Amazon Bedrock with LangChain

With the chat history and prompt template set up, the next step is to integrate the Amazon Bedrock language model. This model generates responses based on the prompt template and the chat history stored in DynamoDB:

LangChain allows you to easily chain different components together. Here, the prompt template, Amazon Bedrock model, and output parser are chained to create a pipeline that processes user inputs and generates intelligent, context-aware responses.

Manage context across interactions

To maintain context across interactions, we use LangChain’s RunnableWithMessageHistory class. This class makes sure each interaction with the chatbot is informed by the full conversation history stored in DynamoDB:

The RunnableWithMessageHistory class makes sure that every time a user interacts with the chatbot, the full conversation history is retrieved, passed through the model, and updated in DynamoDB. This makes the chatbot capable of remembering past interactions and providing responses that are contextually relevant.

Test the integration

Finally, we can test the chatbot by simulating a conversation and observing how it uses the stored history to generate responses:

“It’s nice to meet you, Bob! I’m an AI assistant created by Anthropic. I’m here to help with any questions or tasks you might have. Please let me know if there’s anything I can assist you with.â€

“Your name is Bob, as you introduced yourself to me earlier.â€

By simulating a conversation, you can see how the chatbot remembers past interactions and uses them to generate context-aware responses. This demonstrates the effectiveness of using DynamoDB to manage chat history.

Create a UI with Streamlit

Now that we understand how LangChain interacts with DynamoDB and Bedrock, let’s now put it all together and wrap it with a GUI for testing. We’ll use Streamlit for a web GUI.

Streamlit is an open-source application framework that enables you to build and deploy web applications with just a few lines of Python code. It’s particularly useful for prototyping and testing AI applications, because it allows you to quickly create interactive interfaces without requiring extensive frontend development skills.

Let’s explore an example of how you can set up a Streamlit application to interact with your Amazon Bedrock powered chatbot. The following code should be stored in a file named app.py:

To run the application, open your terminal (Linux/macOS) or Command Prompt/PowerShell (Windows) and run the following command:

streamlit run app.py

The output provides you with the URL to visit your Streamlit application:

You can now view your Streamlit app in your browser.

The Streamlit application allows users to interact with the chatbot in real time, with the entire chat history displayed on the page. This setup makes it straightforward to test the chatbot’s ability to maintain context across multiple user interactions.

The following flow diagram illustrates the interaction between various components to deliver a fully context-aware chatbot experience.

The following screenshot shows an example interaction with the chatbot.

Summary

DynamoDB, combined with Amazon Bedrock, offers a robust, scalable solution for building chatbots that can remember and use conversation history. By storing chat history in DynamoDB, your chatbot can maintain context across sessions, enabling more natural and engaging interactions with users. And with Streamlit, you can quickly prototype and test your chatbot, bringing your conversational AI vision to life in no time.

Ready to get started? Explore our full set of DynamoDB and Amazon Bedrock chatbot examples in the following GitHub repo and start building smarter, more responsive chatbots today!

About the Author

Lee Hannigan is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads using the capabilities of DynamoDB.

Source: Read More