In Part 1 of this series, we introduced the concept of using a decentralized autonomous organization (DAO) to govern the lifecycle of an AI model, focusing on the ingestion of training data. We outlined the overall architecture, set up a large language model (LLM) knowledge base with Amazon Bedrock, and synchronized it with Ethereum Improvement Proposals (EIPs). In Part 2, we created and deployed a minimalistic smart contract on the Ethereum Sepolia testnet using Remix and MetaMask, establishing a mechanism to govern which training data can be uploaded to the knowledge base and by whom. In Part 3, we set up Amazon API Gateway and deployed AWS Lambda functions to copy data from InterPlanetary File System (IPFS) to Amazon Simple Storage Service (Amazon S3) and start a knowledge base ingestion job, creating a seamless data flow from IPFS to the knowledge base.

In this post, we demonstrate how to configure MetaMask authentication, create a frontend interface, and test the solution.

Solution overview

We add a Lambda authorizer so that the ipfs2s3 function we created in Part 3 can only be called by allowed users. To authenticate a user, we use MetaMask authentication and validate that the signer corresponds to an allowlisted Ethereum address in the smart contract.

Prerequisites

Review the prerequisites outlined in Part 1 of this series, and complete the steps in Part 1, Part 2, and Part 3 to set up the necessary components of the solution.

Set up the web3-authorizer Lambda function

In this section, we walk through the steps to set up the web3-authorizer function.

Create the web3-authorizer IAM role

Complete the following steps to create a web3-authorizer AWS Identity and Access Management (IAM) role:

- Open an AWS CloudShell terminal and upload the following files:

- web3-authorizer_trust_policy.json – The trust policy you use to create the role.

- web3-authorizer_inline_policy_template.json – The inline policy you use to create the role.

- web3-authorizer.py – The Python code of the function.

- web3-authorizer.py.zip – A .zip version of the previous file that you use to create the web3-authorizer

- Create a JSON document for the inline policy (this policy is required to grant the Lambda function the rights to write logs to Amazon CloudWatch):

- Create a Lambda execution role:

- Attach the inline policy previously generated:

Prepare a web3 Lambda layer

The Lambda authorizer needs to validate the signature generated by MetaMask and connect to the Sepolia testnet to check the content of the smart contract. To do that, it uses the web3.py library. To make this library available to the Lambda execution environment, you first create a web3 Lambda layer. Complete the following steps:

- Validate that the installed Python version is 3.9 (you may need to modify the following instructions if the Python version is higher):

- Create a virtual environment and install the web3 Python package inside it:

- Validate that the web3 Python package and all its dependencies have been installed inside the virtual environment:

- Copy all those packages inside a

pythonfolder and compress it into a .zip file:When used inside a Lambda layer, this .zip file will be automatically decompressed and mounted inside the Lambda execution environment, so the Lambda function will be able to use the web3 package.

- Finally, create the layer:

- Record the layer version ARN:

Create the web3-authorizer Lambda function

Complete the following steps to create the web3-authorizer Lambda function:

- Open the web3-authorizer.py file and review its content.

The main function is a Lambda handler that extracts a CID and a signed message from the request, then connects to the smart contract and validates that it contains a mapping between the signer of the message and the CID.The function uses three environment variables:- CHAIN_ID – The ID of the chain (

11155111for Sepolia). - RPC_ENDPOINT – The web3 Sepolia endpoint the authorizer needs to connect to. You can request an API key from Infura to generate such an endpoint (you may want to use your own RPC endpoint here, or an endpoint from another provider).

- CONTRACT_ADDRESS – The address of the contract you deployed in Part 2, in hexadecimal format. You should have recorded this value in Part 2. If not, you can look it up on Etherscan.

- CHAIN_ID – The ID of the chain (

- Configure these variables:

- Create the Lambda function:

Test the web3-authorizer Lambda function

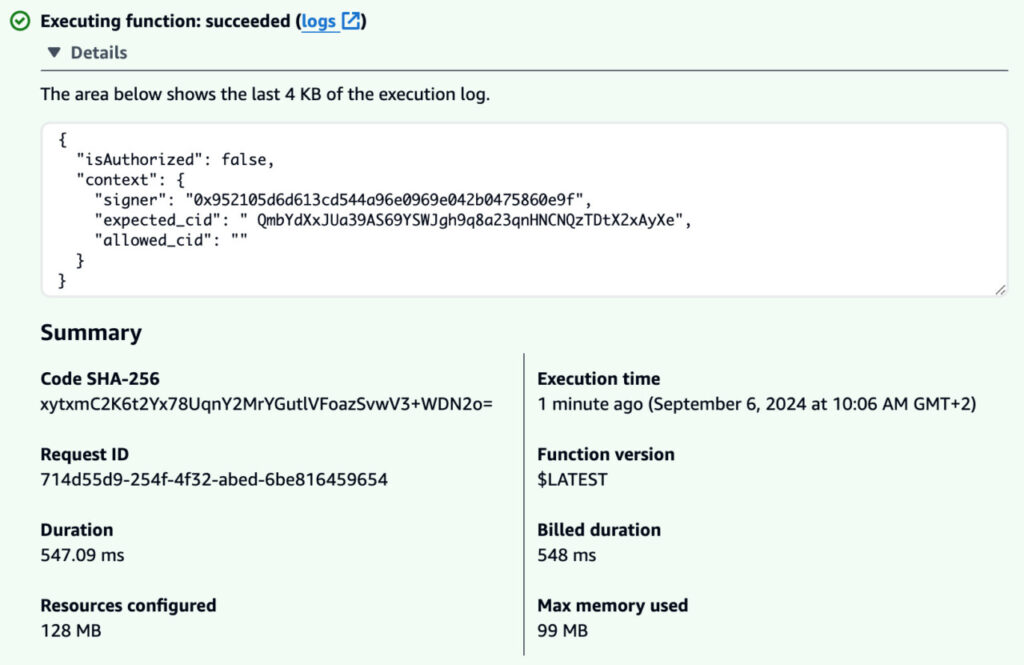

Let’s test the web3-authorizer function using the CID you uploaded to the smart contract in Part 2, and a bad signature. We expect the web3-authorizer function to return a negative result. We simulate a request to the web3-authorizer function using the HTTP authorizer payload format v2.0.

- On the Lambda console, open the

web3-authorizerfunction. - Create the following test event:

This test event contains a JSON document with the three key-value pairs that are expected by the authorizer (

cid,message, andsignature). For this test, they’re embedded in an array given as the value of thequeryStringParameterskey. This structure will be generated automatically by the API gateway. - Choose Test to test the function.

The execution logs should be similar to the following screenshot.

Set up the crypto-ai-frontend Lambda function

We use MetaMask to generate the signature using the same account as the one that we recorded in the smart contract. To do that, you create a new index Lambda function that will return a frontend page to your browser.

Create the crypto-ai-frontend IAM role

Complete the following steps to create a crypto-ai-frontend IAM role:

- Open a CloudShell terminal and upload the following files:

- crypto-ai-frontend_trust_policy.json – The trust policy you use to create the role.

- crypto-ai-frontend_inline_policy_template.json – The inline policy you use to create the role.

- crypto-ai-frontend.py – The file containing the code of the

crypto-ai-frontendfunction. - crypto-ai-frontend.py.zip – A .zip version of the previous file.

- Create a JSON document for the inline policy (this policy is required to grant the Lambda function the rights to write logs to CloudWatch):

- Create a Lambda execution role:

- Attach the inline policy previously generated:

Create the crypto-ai-frontend Lambda function

Review the function contained in the crypto-ai-frontend.py file. It returns HTTP code that will be rendered in the browser. This code contains JavaScript functions that interact with MetaMask through the window.ethereum object and ask MetaMask to sign a message containing the CID that is provided in a form. The signed message is then used as a query parameter (along with CID and the message) in a query to the ipfs2s3 route of the API gateway.

Create the crypto-ai-frontend Lambda function using the following command:

Create a route to the crypto-ai-frontend Lambda function

Complete the following steps to create a route to the crypto-ai-frontend function:

- On the API Gateway console, choose APIs in the navigation pane.

- Choose the

crypto-aiAPI - Under Routes, choose Create.

- Keep the default settings and choose Create.

- Open the route you created.

- Under Route details and Integration, choose Attach integration.

- Choose Create and attach an integration.

- For Integration type, choose Lambda function.

- For Lambda function, choose the

crypto-ai-frontendfunction. - Choose Create.

Now you can connect to the API’s default endpoint with your browser. You should see the following page.

Create an authorizer for the ipfs2s3 route

Complete the following steps to use the web3-authorizer Lambda function to create an authorizer for the ipfs2s3 route:

- On the API Gateway console, choose APIs in the navigation pane.

- Choose the

crypto-aiAPI - Choose the

ipfs2s3route - Under Route details and Authorization, choose Attach authorization.

- Choose Create and attach an authorizer.

- For Authorizer type, choose Lambda.

- For Name, enter a name (for example,

web3-authorizer). - For Lambda function, choose the

web3-authorizerfunction. - For Payload format version, choose 2.0.

- For Response mode, choose Simple.

- For Identity sources, configure the following expressions:

$request.querystring.cid$request.querystring.message$request.querystring.signature

- Choose Create and attach.

Make an authenticated request to the ipfs2s3 Lambda function

Complete the following steps to make an authenticated request to the ipfs2s3 function:

- In your browser, enter the following values in the form:

- For the IPFS CID, enter the value of the CID that you recorded in Part 2 after uploaded the Ethereum yellowpaper to the IPFS pinning service (yours might be different):

QmbYdXxJUa39AS69YSWJgh9q8a23qnHNCNQzTDtX2xAyXe. - For the filename, enter

ethereum-yellowpaper.pdf.

- For the IPFS CID, enter the value of the CID that you recorded in Part 2 after uploaded the Ethereum yellowpaper to the IPFS pinning service (yours might be different):

- Choose Upload Training Data and confirm the message signature in MetaMask.

- Check the logs of the

web3-authorizerandipfs2s3Lambda functions to confirm that the request was successfully authorized and that the IPFS file was successfully uploaded to the S3 bucket. - Validate that the knowledge base data source has been re-synced.

You could also query the knowledge base about information that is specifically mentioned in the Ethereum yellowpaper.

Clean up

Complete the following steps to clean up the different elements that you built over the last four posts:

- On the Amazon Bedrock console, delete the

crypto-ai-kbknowledge base. - On the Amazon OpenSearch Service console, delete the collection that corresponds to the knowledge base (its name should start with

bedrock-knowledge-base) - On the Amazon S3 console, empty and delete the

crypto-ai-<your account number>S3 bucket. - On the API Gateway console, delete the

crypto-aiAPI. - On the Lambda console, delete the

web3-authorizer,ipfs2s3,crypto-ai-frontend, ands32kb. Delete all the versions of theweb3-layerlayer. - On the IAM console, delete the

web3-authorizer,ipfs2s3,crypto-ai-frontend, ands32kb. Delete the role that was created for the knowledge base (its name should start withAmazonBedrockExecutionRoleForKnowledgeBase).

Conclusion

Congratulations! Going through the four posts of this series, you successfully implemented an architecture that allowed you to authenticate yourself using your web3 identity and to upload AI training data after being allowed to do so using a smart contract.

In Part 1, you used Amazon Bedrock Knowledge Bases to build a repository of information to be used with an LLM. In Part 2, you created a smart contract. In Part 3, you created an API gateway and Lambda functions to interact with IPFS, Amazon S3, and an Amazon Bedrock knowledge base. Finally, in Part 4, you built a user experience frontend using MetaMask for authentication.

There is much to be explored at the intersection of blockchain and generative AI using AWS, where you can get the broadest and deepest generative AI services to build your customer experiences. Share your ideas and experiences as you continue to experiment on your own with web3 and AI solutions on AWS.

About the Authors

Guillaume Goutaudier is a Sr Enterprise Architect at AWS. He helps companies build strategic technical partnerships with AWS. He is also passionate about blockchain technologies, and is a member of the Technical Field Community for blockchain.

Guillaume Goutaudier is a Sr Enterprise Architect at AWS. He helps companies build strategic technical partnerships with AWS. He is also passionate about blockchain technologies, and is a member of the Technical Field Community for blockchain.

Shankar Subramaniam is a Sr Enterprise Architect in the AWS Partner Organization aligned with Strategic Partnership Collaboration and Governance (SPCG) engagements. He is a member of the Technical Field Community for Artificial Intelligence and Machine Learning.

Shankar Subramaniam is a Sr Enterprise Architect in the AWS Partner Organization aligned with Strategic Partnership Collaboration and Governance (SPCG) engagements. He is a member of the Technical Field Community for Artificial Intelligence and Machine Learning.

Source: Read More