In Part 1 of this series, we introduced the concept of using a decentralized autonomous organization (DAO) to govern the lifecycle of an AI model, focusing on the ingestion of training data. We outlined the overall architecture, set up a large language model (LLM) knowledge base with Amazon Bedrock, and synchronized it with Ethereum Improvement Proposals (EIPs). In Part 2, we created and deployed a minimalistic smart contract on the Ethereum Sepolia testnet using Remix and MetaMask, establishing a mechanism to govern which training data can be uploaded to the knowledge base and by whom.

In this post, we set up Amazon API Gateway and deploy AWS Lambda functions to copy data from InterPlanetary File System (IPFS) to Amazon Simple Storage Service (Amazon S3) and start a knowledge base ingestion job.

Solution overview

In Part 2, we created a smart contract that contains IPFS file identifiers and Ethereum addresses that are allowed to upload the content of those IPFS files to train the model.

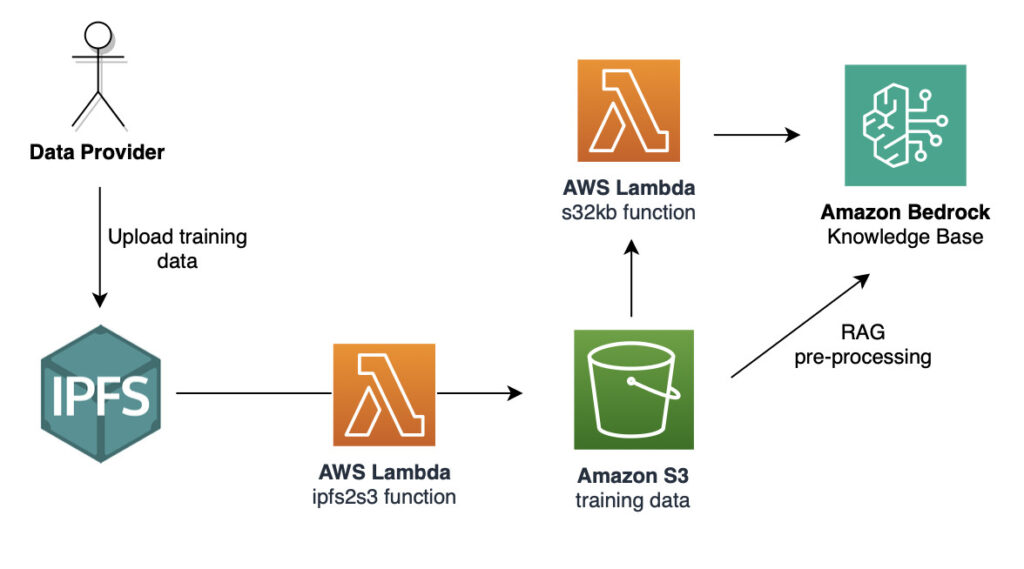

In this post, we focus on the path that this data can take from a trusted data provider (the owner of one of those Ethereum addresses) to the LLM knowledge base. The following diagram illustrates the data flow.

We create the following elements to implement this data flow:

- A Lambda function called

s32kbthat refreshes the content of the knowledge base when new content is added to the S3 bucket - An S3 trigger to invoke the

s32kbLambda function - A Lambda function called

ipfs2s3that uploads content from IPFS to the S3 bucket - An API gateway to call the

ipfs2s3function

Prerequisites

Review the prerequisites outlined in Part 1 of this series, and complete the steps in Part 1 and Part 2 to set up the necessary components of the solution.

Set up the s32kb Lambda function

In this section, we walk through the steps to set up the s32kb function.

Create the s32kb IAM role

Before you create the Lambda function, you need to create an AWS Identity and Access Management (IAM) role that the Lambda function will use during its execution. Complete the following steps:

- Open an AWS CloudShell terminal and upload the following files:

- s32kb_trust_policy.json – The trust policy you use to create the role used by the s32kb function.

- s32kb_inline_policy_template.json – The inline policy you use to create the role used by the s32kb function.

- s32kb.py – The Python script you use to create a Lambda function that automatically updates the knowledge base when new files are uploaded to the S3 bucket.

- s32kb.py.zip – The .zip file containing the Lambda code (s32kb.py).

- Create a JSON document for the inline policy (this policy is required to grant the Lambda function the rights to write logs to Amazon CloudWatch):

- Create the IAM role:

- Attach the AmazonBedrockFullAccess managed policy:

- Open an AWS CloudShell terminal and upload the following files:

- Attach the inline policy previously generated:

Create the s32kb Lambda function

Complete the following steps to create the s32kb function:

- Open the

s32kb.pyfile with your preferred editor (for this post, we use vi), and explore its content.

The file initializes an Amazon Bedrock agent, and uses this agent to start a knowledge base ingestion job. Two environment variables also need to be set:- The

KB_IDvariable, which contains the ID of the knowledge base - The

KB_DATA_SOURCE_IDvariable, which contains the ID of the data source (the S3 bucket)

- The

- Complete the following steps to look up those values:

- On the Amazon Bedrock console, choose Knowledge bases in the navigation pane.

- Choose the

crypto-ai-kbknowledge base. - Note the knowledge base ID under Knowledge base overview.

- Under Data source, choose the

EIPsdata source. - Note the data source ID under Data source overview.

- Record those values in CloudShell:

- Create the Lambda function:

Create an S3 trigger to invoke the s32kb Lambda function

Complete the following steps to configure a trigger to run the s32kb function automatically whenever a new file is uploaded to the S3 bucket:

- On the Lambda console, choose Functions in the navigation pane.

- Choose the

s32kbfunction - Choose Add trigger.

- Under Trigger configuration, for Select a source, choose S3.

- For Bucket, choose the

crypto-ai-kb-<your_account_id> - For Event types, select All object create events and All object delete events.

- Select the acknowledgement check box.

- Choose Add.

Test the s32kb Lambda function

Let’s add a new file to the bucket and validate that the Lambda function is invoked through the trigger. To follow up on our previous investigations on danksharding in Part 1, we enrich the knowledge base with the network upgrade specification of the “Cancun†upgrade:

- Open a CloudShell terminal and enter the following commands:

- Check that the Lambda function was successfully run:

- On the CloudWatch console, navigate to the

/aws/Lambda/s32kblog group. - Check that a log stream with a Last event time value corresponding to the current time exists, and choose it.

- Review the logs and confirm that the Lambda function returned a 202 HTTPStatusCode.

- On the CloudWatch console, navigate to the

- Also check the status of the Amazon Bedrock job:

- On the Amazon Bedrock console, navigate to the

crypto-ai-kbknowledge base. - Under Data source, validate that the Last sync time value corresponds to the current time.

- On the Amazon Bedrock console, navigate to the

If you want to go further, you can query the knowledge base about information that is specifically mentioned in the network upgrade specification.

Set up the ipfs2s3 Lambda function

In this section, we walk through the steps to set up the ipfs2s3 function

Create the ipfs2s3 IAM role

Complete the following steps to create the ipfs2s3 IAM role:

- Open a CloudShell terminal and upload the following files:

- ipfs2s3_trust_policy.json – The trust policy you use to create the role.

- ipfs2s3_inline_policy_template.json – The inline policy you use to create the role.

- ipfs2s3.py – The Python script you use to create a Lambda function that uploads files from IPFS to an S3 bucket.

- ipfs2s3.py.zip – The. zip file containing the Lambda code (ipfs2s3.py).

- Create a JSON document for the inline policy (this policy is required to grant the Lambda function the rights to write logs to CloudWatch):

- Create a Lambda execution role:

- Attach the

AmazonS3FullAccessmanaged policy: - Attach the inline policy previously generated:

Create the ipfs2s3 Lambda function

To download an IPFS file from the IPFS network and upload it to Amazon S3, we create an ipfs2s3 Lambda function that connects to a public IPFS gateway to download the CID that is given as an event parameter to the Lambda function. The function then uploads the downloaded file to an S3 bucket that is configured as an environment variable. You provide the file name to use as an event parameter.

For increased security and resiliency, you could create an IPFS node (or cluster) in your environment, and update the IPFS_GW_ENDPOINT environment variable to point to your own IPFS gateway. For detailed instructions on how to create your own IPFS infrastructure, refer to the IPFS on AWS series.

Open the file ipfs2s3.py and review its content. Then create the Lambda function using the following command:

Create an API gateway

Complete the following steps to create an API gateway:

- On the API Gateway console, choose APIs in the navigation pane.

- Choose Create API.

- Under HTTP API, choose Build.

- For API name, enter a name (for example,

crypto-ai). - Under Create and configure integrations, choose Add integration.

- Choose Lambda.

- For Lambda function, choose the

ipfs2s3function - For Version, choose 2.0.

- Choose Next.

- Review the default route and choose Next.

- Review the default stage and choose Next.

- Choose Create.

- Open the newly created API and record the default endpoint:

Test the ipfs2s3 Lambda function

You can now validate that you can call the Lambda function through the API gateway endpoint.

Let’s assume that we want to complement our knowledge base with the Amazon Managed Blockchain (AMB) – Ethereum Developer Guide.

- Upload the guide to an IPFS pinning service such as Filebase and record its CID (your CID could be different):

- Call the API endpoint:

You should get a message similar to the following:

"Successfully uploaded amazon-managed-blockchain-ethereum-dev.pdf from CID QmWGTo7gVXvdX2YWJg3hKH5JksXsL5tRBfiKLY9MxRbuLS" - Check that the file

amazon-managed-blockchain-ethereum-dev.pdfhas been uploaded to the S3 bucket and that the knowledge base data source has been re-synced.

Additionally, you could query the knowledge base about information that is specifically mentioned in this guide.

Clean up

You can keep the components that you built in this post, because you’ll reuse them in the next post in this series. Alternatively, you can follow the cleanup instructions in Part 4 to delete them.

Conclusion

In Part 3 of this four-part series, we showed how to create two Lambda functions and an API gateway that allowed you to automatically update an Amazon Bedrock knowledge base with data from the IPFS network. In Part 4 of the series, we demonstrate how to create a frontend and use MetaMask to authenticate users with their web3 identity.

About the Authors

Guillaume Goutaudier is a Sr Enterprise Architect at AWS. He helps companies build strategic technical partnerships with AWS. He is also passionate about blockchain technologies, and is a member of the Technical Field Community for blockchain.

Guillaume Goutaudier is a Sr Enterprise Architect at AWS. He helps companies build strategic technical partnerships with AWS. He is also passionate about blockchain technologies, and is a member of the Technical Field Community for blockchain.

Shankar Subramaniam is a Sr Enterprise Architect in the AWS Partner Organization aligned with Strategic Partnership Collaboration and Governance (SPCG) engagements. He is a member of the Technical Field Community for Artificial Intelligence and Machine Learning.

Shankar Subramaniam is a Sr Enterprise Architect in the AWS Partner Organization aligned with Strategic Partnership Collaboration and Governance (SPCG) engagements. He is a member of the Technical Field Community for Artificial Intelligence and Machine Learning.

Source: Read More