This post is co-written with David Gildea and Tom Nijs from Druva.

Druva enables cyber, data, and operational resilience for thousands of enterprises, and is trusted by 60 of the Fortune 500. Customers use Druva Data Resiliency Cloud to simplify data protection, streamline data governance, and gain data visibility and insights. Independent software vendors (ISVs) like Druva are integrating AI assistants into their user applications to make software more accessible.

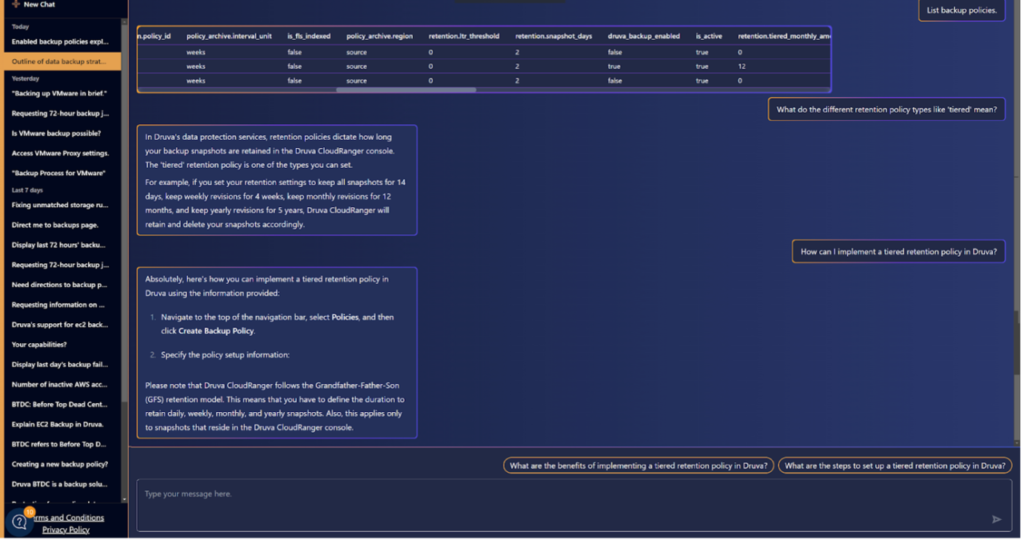

Dru, the Druva backup AI copilot, enables real-time interaction and personalized responses, with users engaging in a natural conversation with the software. From finding inconsistencies and errors across the environment to scheduling backup jobs and setting retention policies, users need only ask and Dru responds. Dru can also recommend actions to improve the environment, remedy backup failures, and identify opportunities to enhance security.

In this post, we show how Druva approached natural language querying (NLQ)—asking questions in English and getting tabular data as answers—using Amazon Bedrock, the challenges they faced, sample prompts, and key learnings.

Use case overview

The following screenshot illustrates the Dru conversation interface.

In a single conversation interface, Dru provides the following:

- Interactive reporting with real-time insights – Users can request data or customized reports without extensive searching or navigating through multiple screens. Dru also suggests follow-up questions to enhance user experience.

- Intelligent responses and a direct conduit to Druva’s documentation – Users can gain in-depth knowledge about product features and functionalities without manual searches or watching training videos. Dru also suggests resources for further learning.

- Assisted troubleshooting – Users can request summaries of top failure reasons and receive suggested corrective measures. Dru on the backend decodes log data, deciphers error codes, and invokes API calls to troubleshoot.

- Simplified admin operations, with increased seamlessness and accessibility – Users can perform tasks like creating a new backup policy or triggering a backup, managed by Druva’s existing role-based access control (RBAC) mechanism.

- Customized website navigation through conversational commands – Users can instruct Dru to navigate to specific website locations, eliminating the need for manual menu exploration. Dru also suggests follow-up actions to speed up task completion.

Challenges and key learnings

In this section, we discuss the challenges and key learnings of Druva’s journey.

Overall orchestration

Originally, we adopted an AI agent approach and relied on the foundation model (FM) to make plans and invoke tools using the reasoning and acting (ReAct) method to answer user questions. However, we found the objective too broad and complicated for the AI agent. The AI agent would take more than 60 seconds to plan and respond to a user question. Sometimes it would even get stuck in a thought-loop, and the overall success rate wasn’t satisfactory.

We decided to move to the prompt chaining approach using a directed acyclic graph (DAG). This approach allowed us to break the problem down into multiple steps:

- Identify the API route.

- Generate and invoke private API calls.

- Generate and run data transformation Python code.

Each step became an independent stream, so our engineers could iteratively develop and evaluate the performance and speed until they worked well in isolation. The workflow also became more controllable by defining proper error paths.

Stream 1: Identify the API route

Out of the hundreds of APIs that power Druva products, we needed to match the exact API the application needs to call to answer the user question. For example, “Show me my backup failures for the past 72 hours, grouped by server.†Having similar names and synonyms in API routes make this retrieval problem more complex.

Originally, we formulated this task as a retrieval problem. We tried different methods, including k-nearest neighbor (k-NN) search of vector embeddings, BM25 with synonyms, and a hybrid of both across fields including API routes, descriptions, and hypothetical questions. We found that the simplest and most accurate way was to formulate it as a classification task to the FM. We curated a small list of examples in question-API route pairs, which helped improve the accuracy and make the output format more consistent.

Stream 2: Generate and invoke private API calls

Next, we API call with the correct parameters and invoke it. FM hallucination of parameters, particularly those with free-form JSON object, is one of the major challenges in the whole workflow. For example, the unsupported key server can appear in the generated parameter:

We tried different prompting techniques, such as few-shot prompting and chain of thought (CoT), but the success rate was still unsatisfactory. To make API call generation and invocation more robust, we separated this task into two steps:

- First, we used an FM to generate parameters in a JSON dictionary instead of a full API request headers and body.

- Afterwards, we wrote a postprocessing function to remove parameters that didn’t conform to the API schema.

This method provided a successful API invocation, at the expense of getting more data than required for downstream processing.

Stream 3: Generate and run data transformation Python code

Next, we took the response from the API call and transformed it to answer the user question. For example, “Create a pandas dataframe and group it by server column.†Similar to stream 2, FM hallucination is again an obstacle. Generated code can contain syntax errors, such as confusing PySpark functions with Pandas functions.

After trying many different prompting techniques without success, we looked at the reflection pattern, asking the FM to self-correct code in a loop. This improved the success rate at the expense of more FM invocations, which were slower and more expensive. We found that although smaller models are faster and more cost-effective, at times they had inconsistent results. Anthropic’s Claude 2.1 on Amazon Bedrock gave more accurate results on the second try.

Model choices

Druva selected Amazon Bedrock for several compelling reasons, with security and latency being the most important. A key factor in this decision was the seamless integration with Druva’s services. Using Amazon Bedrock aligned naturally with Druva’s existing environment on AWS, maintaining a secure and efficient extension of their capabilities.

Additionally, one of our primary challenges in developing Dru involved selecting the optimal FMs for specific tasks. Amazon Bedrock effectively addresses this challenge with its extensive array of available FMs, each offering unique capabilities. This variety enabled Druva to conduct the rapid and comprehensive testing of various FMs and their parameters, facilitating the selection of the most suitable one. The process was streamlined because Druva didn’t need to delve into the complexities of running or managing these diverse FMs, thanks to the robust infrastructure provided by Amazon Bedrock.

Through the experiments, we found that different models performed better in specific tasks. For example, Meta Llama 2 performed better with code generation task; Anthropic Claude Instance was good in efficient and cost-effective conversation; whereas Anthropic Claude 2.1 was good in getting desired responses in retry flows.

These were the latest models from Anthropic and Meta at the time of this writing.

Solution overview

The following diagram shows how the three streams work together as a single workflow to answer user questions with tabular data.

The following are the steps of the workflow:

- The authenticated user submits a question to Dru, for example, “Show me my backup job failures for the last 72 hours,†as an API call.

- The request arrives at the microservice on our existing Amazon Elastic Container Service (Amazon ECS) cluster. This process consists of the following steps:

- A classification task using the FM provides the available API routes in the prompt and asks for the one that best matches with user question.

- An API parameters generation task using the FM gets the corresponding API swagger, then asks the FM to suggest key-value pairs to the API call that can retrieve data to answer the question.

- A custom Python function verifies, formats, and invokes the API call, then passes the data in JSON format to the next step.

- A Python code generation task using the FM samples a few records of data from the previous step, then asks the FM to write Python code to transform the data to answer the question.

- A custom Python function runs the Python code and returns the answer in tabular format.

To maintain user and system security, we make sure in our design that:

- The FM can’t directly connect to any Druva backend services.

- The FM resides in a separate AWS account and virtual private cloud (VPC) from the backend services.

- The FM can’t initiate actions independently.

- The FM can only respond to questions sent from Druva’s API.

- Normal customer permissions apply to the API calls made by Dru.

- The call to the API (Step 1) is only possible for authenticated user. The authentication component lives outside the Dru solution and is used across other internal solutions.

- To avoid prompt injection, jailbreaking, and other malicious activities, a separate module checks for these before the request reaches this service (Amazon API Gateway in Step 1).

For more details, refer to Druva’s Secret Sauce: Meet the Technology Behind Dru’s GenAI Magic.

Implementation details

In this section, we discuss Steps 2a–2e in the solution workflow.

2a. Look up the API definition

This step uses an FM to perform classification. It takes the user question and a full list of available API routes with meaningful names and descriptions as the input, and responds The following is a sample prompt:

2b. Generate the API call

This step uses an FM to generate API parameters. It first looks up the corresponding swagger for the API route (from Step 2a). Next, it passes the swagger and the user question to an FM and responds with some key-value pairs to the API route that can retrieve relevant data. The following is a sample prompt:

2c. Validate and invoke the API call

In the previous step, even with an attempt to ground responses with swagger, the FM can still hallucinate wrong or nonexistent API parameters. This step uses a programmatic way to verify, format, and invoke the API call to get data. The following is the pseudo code:

2d. Generate Python code to transform data

This step uses an FM to generate Python code. It first samples a few records of input data to reduce input tokens. Then it passes the sample data and the user question to an FM and responds with a Python script that transforms data to answer the question. The following is a sample prompt:

2e. Run the Python code

This step involves a Python script, which imports the generated Python package, runs the transformation, and returns the tabular data as the final response. If an error occurs, it will invoke the FM to try to correct the code. When everything fails, it returns the input data. The following is the pseudo code:

Conclusion

Using Amazon Bedrock for the solution foundation led to remarkable achievements in accuracy, as evidenced by the following metrics in our evaluations using an internal dataset:

- Stream 1: Identify the API route – Achieved a perfect accuracy rate of 100%

- Stream 2: Generate and invoke private API calls – Maintained this standard with a 100% accuracy rate

- Stream 3: Generate and run data transformation Python code – Attained a highly commendable accuracy of 90%

These results are not just numbers; they are a testament to the robustness and efficiency of the Amazon Bedrock based solution. With such high levels of accuracy, Druva is now poised to confidently broaden their horizons. Our next goal is to extend this solution to encompass a wider range of APIs across Druva products. The next expansion will be scaling up usage and substantially enrich the experience of Druva customers. By integrating more APIs, Druva will offer a more seamless, responsive, and contextual interaction with Druva products, further enhancing the value delivered to Druva users.

To learn more about Druva’s AI solutions, visit the Dru solution page, where you can see some of these capabilities in action through recorded demos. Visit the AWS Machine Learning blog to see how other customers are using Amazon Bedrock to solve their business problems.

About the Authors

David Gildea is the VP of Product for Generative AI at Druva. With over 20 years of experience in cloud automation and emerging technologies, David has led transformative projects in data management and cloud infrastructure. As the founder and former CEO of CloudRanger, he pioneered innovative solutions to optimize cloud operations, later leading to its acquisition by Druva. Currently, David leads the Labs team in the Office of the CTO, spearheading R&D into generative AI initiatives across the organization, including projects like Dru Copilot, Dru Investigate, and Amazon Q. His expertise spans technical research, commercial planning, and product development, making him a prominent figure in the field of cloud technology and generative AI.

David Gildea is the VP of Product for Generative AI at Druva. With over 20 years of experience in cloud automation and emerging technologies, David has led transformative projects in data management and cloud infrastructure. As the founder and former CEO of CloudRanger, he pioneered innovative solutions to optimize cloud operations, later leading to its acquisition by Druva. Currently, David leads the Labs team in the Office of the CTO, spearheading R&D into generative AI initiatives across the organization, including projects like Dru Copilot, Dru Investigate, and Amazon Q. His expertise spans technical research, commercial planning, and product development, making him a prominent figure in the field of cloud technology and generative AI.

Tom Nijs is an experienced backend and AI engineer at Druva, passionate about both learning and sharing knowledge. With a focus on optimizing systems and using AI, he’s dedicated to helping teams and developers bring innovative solutions to life.

Tom Nijs is an experienced backend and AI engineer at Druva, passionate about both learning and sharing knowledge. With a focus on optimizing systems and using AI, he’s dedicated to helping teams and developers bring innovative solutions to life.

Corvus Lee is a Senior GenAI Labs Solutions Architect at AWS. He is passionate about designing and developing prototypes that use generative AI to solve customer problems. He also keeps up with the latest developments in generative AI and retrieval techniques by applying them to real-world scenarios.

Corvus Lee is a Senior GenAI Labs Solutions Architect at AWS. He is passionate about designing and developing prototypes that use generative AI to solve customer problems. He also keeps up with the latest developments in generative AI and retrieval techniques by applying them to real-world scenarios.

Fahad Ahmed is a Senior Solutions Architect at AWS and assists financial services customers. He has over 17 years of experience building and designing software applications. He recently found a new passion of making AI services accessible to the masses.

Fahad Ahmed is a Senior Solutions Architect at AWS and assists financial services customers. He has over 17 years of experience building and designing software applications. He recently found a new passion of making AI services accessible to the masses.

Source: Read MoreÂ