As the world is evolving towards a personal digital experience, recommendation systems, while being a must, from e-commerce to media streaming, fail to simulate users’ preferences to make better recommendations. Conventional models do not capture the subtlety of reasons behind user-item interactions thus generalized recommendations are presented. With such restrictions on the limited rationale, large language model agents would, therefore, act only on the basic descriptions and past interactions of the users without having the needed depth for interpreting and reasoning in user preferences. This restriction to limited rationale inflates the incompleteness or lack of specificity of the user profiles that maintain agents, hence making it difficult for the agents to make recommendations that are both accurate and context-rich. Thus, effective modeling of such intricate preferences in recommendation systems plays an important role in enhancing recommendation accuracy and improving user satisfaction.

While classic BPR and state-of-the-art deep learning-based frameworks like SASRec improve the prediction performance of user preferences, the improvement is in a non-interpretable manner; it lacks any rationale-driven understanding of user behavior. Traditional models in this respect are based either on interaction matrices or simple textual similarity, which severely limits their interpretability concerning insights into user motivation. Deep learning methods, though powerful in capturing sequential user interactions, fall short when reasoning capability is needed. While the LLM-based systems are more powerful, they primarily rely on mere item descriptions that do not encapsulate the full rationale behind user preferences. This gap thus points out the need for a new approach that is based on a structured, interpretable basis for capturing and simulating such complex user-item interactions

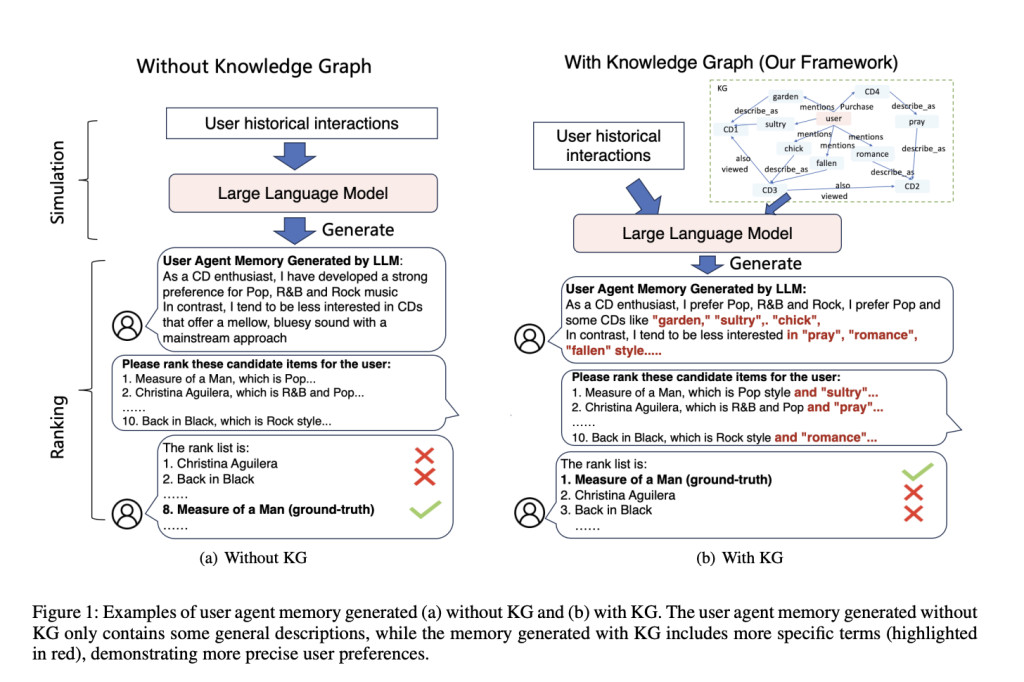

To address these gaps, the researchers from the University of Notre Dame and Amazon introduce Knowledge Graph Enhanced Language Agents (KGLA), a framework that enriches language agents with the contextual depth of knowledge graphs (KGs) to simulate more accurate and rationale-based user profiles. In KGLA, KG paths are used as natural language descriptions to feed the language agents the rationale behind the preferences, and this makes simulations more meaningful and closer to real-world behavior. KGLA includes three major modules: Path Extraction, focusing on the discovery of paths within KG that connect users and items; Path Translation, converting such connections into understandable, language-based descriptions; and finally, Path Incorporation, incorporating such descriptions into agent simulations. As KGLA leverages KG paths to explain user choices, it allows the agents to learn a fine-grained profile that reflects user preferences much more precisely than previous methods and addresses the limitations of both traditional and language model-based methods.

In this paper, the KGLA framework is evaluated on three benchmark recommendation datasets, including structured knowledge graphs comprising entities such as users, items, product features, and relations such as Sanchez “produced by†or “belongs to.†For every user-item pair, KGLA retrieves 2-hop and 3-hop paths with the help of its Path Extraction module, encapsulating elaborate preference information. These are then converted to natural language descriptions that are much shorter, such that token lengths for 2-hop are reduced by about 60% and up to 98% for 3-hop paths. In this way, the language models will handle them in one go without the hassle of exceeding the token limits. Path Incorporation embeds these descriptions directly into user-agent profiles to enhance the simulations with both positive and negative samples, creating well-rounded profiles. This structure enables user agents to make preference-based selections along with a detailed supporting rationale, hence refining the profiles based on various sets of interactions with different attributes of items.

The KGLA framework achieves substantial improvements over existing models on all tested datasets, including a 95.34% gain in NDCG@1 accuracy in the CDs dataset. These performance gains are attributed to the enriched user-agent profiles, as the addition of KG paths allows agents to better simulate real-world user behavior by providing interpretable rationales for preferences. The model also demonstrates incremental accuracy increases with the inclusion of 2-hop and 3-hop KG paths, confirming that a multi-layered approach enhances recommendation precision, especially for scenarios with sparse data or complex user interactions.

In summary, KGLA represents a novel approach to recommendation systems by combining structured knowledge from knowledge graphs with language-based simulation agents to enrich user-agent profiles with meaningful rationales. The framework’s components—Path Extraction, Path Translation, and Path Incorporation—work cohesively to enhance recommendation accuracy, outperforming traditional and LLM-based methods on benchmark datasets. By introducing interpretability into user preference modeling, KGLA offers a robust foundation for the development of rationale-driven recommendation systems, moving the field closer to personalized, context-rich digital interactions.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Trending] LLMWare Introduces Model Depot: An Extensive Collection of Small Language Models (SLMs) for Intel PCs

The post Knowledge Graph Enhanced Language Agents (KGLA): A Machine Learning Framework that Unifies Language Agents and Knowledge Graph for Recommendation Systems appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)