This is a guest post by Paulo Ruy, Vagner Chagas, Database Engineers at Dafiti; along with Lucas Ferrari, Solutions Architect at AWS, and Sandro Mendes, Technical Account Manager at AWS.

In the dynamic world of digital retail, performance, resilience, and availability are not only desirable qualities, they are essential. Recently, Dafiti, a leading fashion and lifestyle ecommerce conglomerate operating in Brazil, Argentina, Chile, and Colombia, undertook a significant transformation of its critical database infrastructure by migrating from self-managed MySQL Server 5.7 on Amazon Elastic Compute Cloud (Amazon EC2) to Amazon Aurora MySQL-Compatible Edition. This strategic move improved the resiliency and efficiency of its database operations.

At Dafiti, the decision to migrate to Amazon Aurora was driven by several critical business needs. The core database, which handles all purchasing transactions for digital platforms and businesses, needs to be scalable and highly resilient to support ongoing and future business growth. As a 100% digital company, Dafiti needed a database solution that could deliver transaction performance, provide high availability, and increase operational efficiency.

The architectural redesign encompassed decoupling the application layer from the database layer with the primary goals of streamlining database management tasks. It addressed features such as automatic failover and read replicas for enhanced reliability and optimizing performance at the database layer.

In this post, we show you why we chose Aurora MySQL-Compatible and how we migrated our critical database infrastructure.

The strategy migration

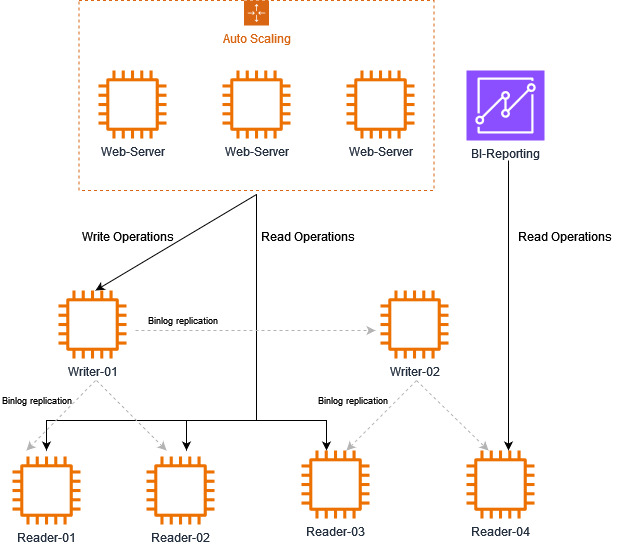

To understand the challenges of migration, let’s review the previous MySQL architecture running on Amazon EC2, as shown in the following figure.

The architecture consisted of the following key components:

- Writer-01 – Used for processing write traffic, including transactions from the e-commerce platform, and replicating data to other database nodes

- Writer-02 – Hot standby, used for manual failover of Writer-01, in a different Availability Zone

- Reader-01, Reader-02, Reader-03 – Used for read transactions from shopping platforms

- Reader-04 – Used for business intelligence (BI) workloads, monitoring, and observability

The existing database environment on EC2 instances faced numerous obstacles. As a self-managed database, there were no easy ways to achieve scalability or visibility into monitoring processes. Database management tasks required manual implementation, and some of these tasks were complex and time-consuming to execute. During critical situations, promptly pinpointing and addressing bottlenecks proved challenging because maintaining an adequate baseline was difficult. Furthermore, the efforts of maintaining and managing this environment became increasingly unsustainable.

Given these challenges, we looked for a solution that could simplify database management tasks while offering advanced features like automatic failover and read replicas for improved reliability and performance. Amazon Aurora MySQL emerged as a promising option, as it natively provides these capabilities. We decided to test Aurora MySQL to evaluate its potential in meeting their critical business requirements.

The migration to Aurora MySQL was planned and deployed in three phases to allow for full testing and validation:

- Creating a hybrid environment – This process involved three key steps:

- We used Percona XtraBackup to perform an initial backup to Amazon Simple Storage Service (Amazon S3). This option is faster for larger databases than migrating data using

mysqldump. - We created a new Aurora MySQL cluster by restoring from Amazon S3. This option is available in the Amazon Relational Database Service (Amazon RDS) console or through AWS Command Line Interface (AWS CLI) by calling the API restore-db-cluster-from-s3.

- We established asynchronous binary log replication to maintain near-real-time database replication while preserving the existing MySQL configuration on Amazon EC2. During this process, data validation was performed to promote consistency and accuracy between the source and target databases. This typically included techniques such as row counts, in which we compared the total number of rows in corresponding tables between the source database and the target database by running a simple

SELECT COUNT(*)query on each table. It also included data sampling, which involves selecting a subset of data from both the source and target databases and comparing them to confirm that they match. Additionally, binary log replication lag metrics were continuously monitored to assess the performance of the replication setup, with the replication lag consistently maintained at less than 1 second.

- We used Percona XtraBackup to perform an initial backup to Amazon Simple Storage Service (Amazon S3). This option is faster for larger databases than migrating data using

- Redirecting read traffic – In the first 20 days, read transactions were redirected to Aurora reader nodes using custom endpoints. This allowed Dafiti to monitor the performance and behavior of applications, such as analytics and observability tools. At that time, the writes were carried out in the MySQL database on Amazon EC2. This strategy allowed the technology team to demonstrate improvements in performance and stability with the service and gain confidence in moving forward with the last stage.

- Redirecting write traffic – Within the planned maintenance window, the write transactions were redirected to the Aurora MySQL cluster. The migration was completed with minimal interruption time for the digital platforms (website and mobile apps).

The following figure represents the hybrid architecture used during the migration, with write operations in MySQL running on Amazon EC2 and read operations in Aurora MySQL.

The following figure represents the final architecture in Aurora after migration, with 100% of writes and reads in Aurora MySQL.

In the custom endpoints layer, we made fine-tuning adjustments to achieve the desired failover behavior, making sure the application’s availability and scalability requirements were met. We configured the failover tier to set the failover priority, assigning to Aurora MySQL Writer-1 and Aurora MySQL Reader-1 a failover tier of 0. Additionally, we created custom endpoints to segregate the BI-Reporting workload from the read operations of the web servers.

Each custom endpoint has an associated type that determines which DB instances are eligible to be associated with that endpoint. As of this writing, the type can be READER or ANY.

When the endpoint type is set to READER, the endpoint membership is automatically adjusted during failovers and promotions. Therefore, if a reader node is promoted to be the writer, then it will no longer be part of that custom endpoint. The previous writer node becomes reader, as part of that custom endpoint.

In our case, the custom endpoint was set up to READER (see the following AWS CLI sample command), and we excluded the read replicas from the custom endpoint for reporting purposes to isolate the read operations from the ecommerce platform.

Results

The benefits of migrating to Aurora MySQL were immediate:

- Automatic failover in up to 30 seconds. Previously, the manual process took 30 minutes.

- The auto scaling capability of Amazon Aurora promoted enhanced availability and seamless scalability of the database environment, dynamically adjusting resources to accommodate fluctuating workloads without compromising performance or uptime.

- Most environmental maintenance routines were carried out online and automatically.

- The creation of new Aurora reader nodes was drastically accelerated, reducing the time required from several hours on self-managed MySQL to a fraction of that duration on Aurora MySQL.

- Performance Insights and Enhanced Monitoring have significantly improved the observability of the environment by providing detailed insights into performance bottlenecks and comprehensive metrics.

- Future upgrades with Amazon RDS blue/green deployment, providing lower risk and simplified rollback.

- Right-sizing instances. Amazon Aurora on AWS Graviton 3 offered a 30% more performance boost as compared to their MySQL on Amazon EC2, deployed on Graviton 2 instances.

Conclusion

The success of this migration project has motivated Dafiti to expedite the transition of other databases from Amazon EC2 to Amazon Aurora MySQL. Encouraged by the positive outcomes, we plan to apply a similar strategic approach to migrate additional database workloads, leveraging the lessons learned and best practices established during this initial migration.

Do you want to know more about what we’re doing in the data area at Dafiti? Check out the following resources:

- Migrate Amazon Redshift from DC2 to RA3 to accommodate increasing data volumes and analytics demands

- How Dafiti made Amazon QuickSight its primary data visualization tool

- (Portuguese) AWS Summit SP 2022 – Dafiti, habilitando uma Empresa Data Driven na AWS

- (Portuguese) Caso de sucesso: Dafiti reduz custos em 70% e otimiza tomadas de decisões com dados na nuvem da AWS

About the Authors

Paulo Ruy is LATAM Database Reliability Engineer at Dafiti, responsible for maintaining, designing, and sustaining all related and unrelated databases using AWS solutions and partners.

Paulo Ruy is LATAM Database Reliability Engineer at Dafiti, responsible for maintaining, designing, and sustaining all related and unrelated databases using AWS solutions and partners.

Vagner Chagas is LATAM Database Reliability Engineer at Dafiti, responsible for maintaining, designing, and sustaining all related and unrelated databases using AWS solutions and partners.

Vagner Chagas is LATAM Database Reliability Engineer at Dafiti, responsible for maintaining, designing, and sustaining all related and unrelated databases using AWS solutions and partners.

Lucas Ferrari is an expert database solutions architect at AWS, where he helps customers design and implement cloud database solutions. He has been working with databases since 2008 and his main specializations are Amazon DynamoDB and Amazon Aurora.

Lucas Ferrari is an expert database solutions architect at AWS, where he helps customers design and implement cloud database solutions. He has been working with databases since 2008 and his main specializations are Amazon DynamoDB and Amazon Aurora.

Sandro Mendes acts as Technical Account Manager at AWS, with experience in IT management and project management. He works with AWS Enterprise Support customers, supporting them on their cloud journey.

Sandro Mendes acts as Technical Account Manager at AWS, with experience in IT management and project management. He works with AWS Enterprise Support customers, supporting them on their cloud journey.

Source: Read More

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)