Amazon SageMaker Ground Truth enables the creation of high-quality, large-scale training datasets, essential for fine-tuning across a wide range of applications, including large language models (LLMs) and generative AI. By integrating human annotators with machine learning, SageMaker Ground Truth significantly reduces the cost and time required for data labeling. Whether it’s annotating images, videos, or text, SageMaker Ground Truth allows you to build accurate datasets while maintaining human oversight and feedback at scale. This human-in-the-loop approach is crucial for aligning foundation models with human preferences, enhancing their ability to perform tasks tailored to your specific requirements.

To support various labeling needs, SageMaker Ground Truth provides built-in workflows for common tasks like image classification, object detection, and semantic segmentation. Additionally, it offers the flexibility to create custom workflows, enabling you to design your own UI templates for specialized data labeling tasks, tailored to your unique requirements.

Previously, setting up a custom labeling job required specifying two AWS Lambda functions: a pre-annotation function, which is run on each dataset object before it’s sent to workers, and a post-annotation function, which is run on the annotations of each dataset object and consolidates multiple worker annotations if needed. Although these functions offer valuable customization capabilities, they also add complexity for users who don’t require additional data manipulation. In these cases, you would have to write functions that merely returned your input unchanged, increasing development effort and the potential for errors when integrating the Lambda functions with the UI template and input manifest file.

Today, we’re pleased to announce that you no longer need to provide pre-annotation and post-annotation Lambda functions when creating custom SageMaker Ground Truth labeling jobs. These functions are now optional on both the SageMaker console and the CreateLabelingJob API. This means you can create custom labeling workflows more efficiently when you don’t require extra data processing.

In this post, we show you how to set up a custom labeling job without Lambda functions using SageMaker Ground Truth. We guide you through configuring the workflow using a multimodal content evaluation template, explain how it works without Lambda functions, and highlight the benefits of this new capability.

Solution overview

When you omit the Lambda functions in a custom labeling job, the workflow simplifies:

- No pre-annotation function – The data from the input manifest file is inserted directly into the UI template. You can reference the data object fields in your template without needing a Lambda function to map them.

- No post-annotation function – Each worker’s annotation is saved directly to your specified Amazon Simple Storage Service (Amazon S3) bucket as an individual JSON file, with the annotation stored under a worker-response key. Without a post-annotation Lambda function, the output manifest file references these worker response files instead of including all annotations directly within the manifest.

In the following sections, we walk through how to set up a custom labeling job without Lambda functions using a multimodal content evaluation template, which allows you to evaluate model-generated descriptions of images. Annotators can review an image, a prompt, and the model’s response, then evaluate the response based on criteria such as accuracy, relevance, and clarity. This provides crucial human feedback for fine-tuning models using Reinforcement Learning from Human Feedback (RLHF) or evaluating LLMs.

Prepare the input manifest file

To set up our labeling job, we begin by preparing the input manifest file that the template will use. The input manifest is a JSON Lines file where each line represents a dataset item to be labeled. Each line contains a source field for embedded data or a source-ref field for references to data stored in Amazon S3. These fields are used to provide the data objects that annotators will label. For detailed information on the input manifest file structure, refer to Input manifest files.

For our specific task—evaluating model-generated descriptions of images—we structure the input manifest to include the following fields:

- “source†– The prompt provided to the model

- “image†– The S3 URI of the image associated with the prompt

- “modelResponse†– The model’s generated description of the image

By including these fields, we’re able to present both the prompt and the related data directly to the annotators within the UI template. This approach eliminates the need for a pre-annotation Lambda function because all necessary information is readily accessible in the manifest file.

The following code is an example of what a line in our input manifest might look like:

Insert the prompt in the UI template

In your UI template, you can insert the prompt using {{ task.input.source }}, display the image using an <img> tag with src="{{ task.input.image | grant_read_access }}" (the grant_read_access Liquid filter provides the worker with access to the S3 object), and show the model’s response with {{ task.input.modelResponse }}. Annotators can then evaluate the model’s response based on predefined criteria, such as accuracy, relevance, and clarity, using tools like sliders or text input fields for additional comments. You can find the complete UI template for this task in our GitHub repository.

Create the labeling job on the SageMaker console

To configure the labeling job using the AWS Management Console, complete the following steps:

- On the SageMaker console, under Ground Truth in the navigation pane, choose Labeling job.

- Choose Create labeling job.

- Specify your input manifest location and output path.

- Select Custom as the task type.

- Choose Next.

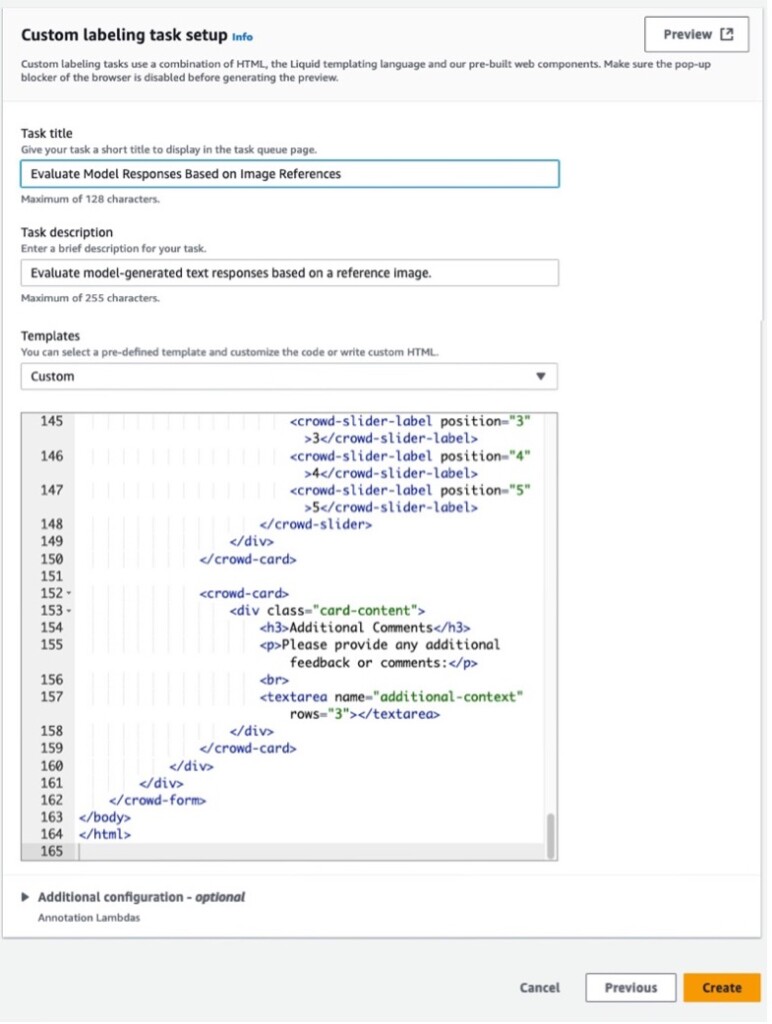

- Enter a task title and description.

- Under Template, upload your UI template.

The annotation Lambda functions are now an optional setting under Additional configuration.

- Choose Preview to display the UI template for review.

- Choose Create to create the labeling job.

Create the labeling job using the CreateLabelingJob API

You can also create the custom labeling job programmatically by using the AWS SDK to invoke the CreateLabelingJob API. After uploading the input manifest files to an S3 bucket and setting up a work team, you can define your labeling job in code, omitting the Lambda function parameters if they’re not needed. The following example demonstrates how to do this using Python and Boto3.

In the API, the pre-annotation Lambda function is specified using the PreHumanTaskLambdaArn parameter within the HumanTaskConfig structure. The post-annotation Lambda function is specified using the AnnotationConsolidationLambdaArn parameter within the AnnotationConsolidationConfig structure. With the recent update, both PreHumanTaskLambdaArn and AnnotationConsolidationConfig are now optional. This means you can omit them if your labeling workflow doesn’t require additional data preprocessing or postprocessing.

The following code is an example of how to create a labeling job without specifying the Lambda functions:

When the annotators submit their evaluations, their responses are saved directly to your specified S3 bucket. The output manifest file includes the original data fields and a worker-response-ref that points to a worker response file in S3. This worker response file contains all the annotations for that data object. If multiple annotators have worked on the same data object, their individual annotations are included within this file under an answers key, which is an array of responses. Each response includes the annotator’s input and metadata such as acceptance time, submission time, and worker ID.

This means that all annotations for a given data object are collected in one place, allowing you to process or analyze them later according to your specific requirements, without needing a post-annotation Lambda function. You have access to all the raw annotations and can perform any necessary consolidation or aggregation as part of your post-processing workflow.

Benefits of labeling jobs without Lambda functions

Creating custom labeling jobs without Lambda functions offers several benefits:

- Simplified setup – You can create custom labeling jobs more quickly by skipping the creation and configuration of Lambda functions when they’re not needed.

- Time savings – Reducing the number of components in your labeling workflow saves development and debugging time.

- Reduced complexity – Fewer moving parts mean a lower chance of encountering configuration errors or integration issues.

- Cost reduction – By not using Lambda functions, you reduce the associated costs of deploying and invoking these resources.

- Flexibility – You retain the ability to use Lambda functions for preprocessing and annotation consolidation when your project requires these capabilities. This update offers simplicity for straightforward tasks and flexibility for more complex requirements.

This feature is currently available in all AWS Regions that support SageMaker Ground Truth. In the future, look out for built-in task types that don’t require annotation Lambda functions, providing a simplified experience for SageMaker Ground Truth across the board.

Conclusion

The introduction of workflows for custom labeling jobs in SageMaker Ground Truth without Lambda functions significantly simplifies the data labeling process. By making Lambda functions optional, we’ve made it simpler and faster to set up custom labeling jobs, reducing potential errors and saving valuable time.

This update maintains the flexibility of custom workflows while removing unnecessary steps for those who don’t require specialized data processing. Whether you’re conducting simple labeling tasks or complex multi-stage annotations, SageMaker Ground Truth now offers a more streamlined path to high-quality labeled data.

We encourage you to explore this new feature and see how it can enhance your data labeling workflows. To get started, check out the following resources:

- Browse over 80 available UI templates to suit your labeling needs on GitHub

- Follow the step-by-step guide on creating custom labeling workflows to tailor your data labeling tasks

About the Authors

Sundar Raghavan is an AI/ML Specialist Solutions Architect at AWS, helping customers leverage SageMaker and Bedrock to build scalable and cost-efficient pipelines for computer vision applications, natural language processing, and generative AI. In his free time, Sundar loves exploring new places, sampling local eateries and embracing the great outdoors.

Sundar Raghavan is an AI/ML Specialist Solutions Architect at AWS, helping customers leverage SageMaker and Bedrock to build scalable and cost-efficient pipelines for computer vision applications, natural language processing, and generative AI. In his free time, Sundar loves exploring new places, sampling local eateries and embracing the great outdoors.

Alan Ismaiel is a software engineer at AWS based in New York City. He focuses on building and maintaining scalable AI/ML products, like Amazon SageMaker Ground Truth and Amazon Bedrock Model Evaluation. Outside of work, Alan is learning how to play pickleball, with mixed results.

Alan Ismaiel is a software engineer at AWS based in New York City. He focuses on building and maintaining scalable AI/ML products, like Amazon SageMaker Ground Truth and Amazon Bedrock Model Evaluation. Outside of work, Alan is learning how to play pickleball, with mixed results.

Yinan Lang is a software engineer at AWS GroundTruth. He worked on GroundTruth, MechanicalTurk and Bedrock infrastructure, as well as customer facing projects for GroundTruth Plus. He also focuses on product security and worked on fixing risks and creating security tests. In leisure time, he is an audiophile and particularly loves to practice keyboard compositions by Bach.

Yinan Lang is a software engineer at AWS GroundTruth. He worked on GroundTruth, MechanicalTurk and Bedrock infrastructure, as well as customer facing projects for GroundTruth Plus. He also focuses on product security and worked on fixing risks and creating security tests. In leisure time, he is an audiophile and particularly loves to practice keyboard compositions by Bach.

George King is a summer 2024 intern at Amazon AI. He studies Computer Science and Math at the University of Washington and is currently between his second and third year. George loves being outdoors, playing games (chess and all kinds of card games), and exploring Seattle, where he has lived his entire life.

George King is a summer 2024 intern at Amazon AI. He studies Computer Science and Math at the University of Washington and is currently between his second and third year. George loves being outdoors, playing games (chess and all kinds of card games), and exploring Seattle, where he has lived his entire life.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)