Migrating a MySQL database from an on-premises environment to AWS brings with it an array of advantages. However, it can be challenging, especially when dealing with legacy centralized databases that serve multiple applications. One of the key steps in this journey is making sure your cloud setup can handle real-world production traffic.

Realistically simulating data flows and query patterns can be a complex task that can delay a team’s ability to migrate confidently. Although synthetic tests can provide a good baseline to evaluate a new system, and provide a good first step in a migration process, it is best to supplement synthetic tests with real-world testing of your queries and workload. This provides a greater degree of confidence in the new platform’s ability to handle your real-world traffic, providing greater insights into how your workload will perform in the new environment.

In this series of posts, we dive deep into performance testing of MySQL environments being migrated from on-premises to AWS. In this post, we review two different approaches to testing migrated environments with traffic that is representative of real production traffic: capturing and replaying traffic using a playback application, and mirroring traffic as it comes in using a proxy. This means you’re validating your environment using realistic data access patterns.

Part 2 dives into the setup and configuration of query playback, and Part 3 dives into the setup and configuration of traffic mirroring.

For the purposes of this blog series we have focused on the migration of an environment from on-premises to AWS. However, pt-upgrade and ProxySQL mirroring can also be used for testing RDS MySQL to Amazon Aurora migrations, as well as testing how your workload performs against an upgraded engine version both for RDS MySQL and Amazon Aurora.

Query playback architecture

In this first solution, we discuss query playback. Query playback differs from mirroring because instead of mirroring traffic in parallel to a second environment, playback relies on capturing a time frame of your traffic, like a busy weekend or peak traffic event, and then replaying that recorded traffic against another environment at your team’s time of choice.

This solution provides more control over when you run tests, enabling teams to run their tests at convenient times. Because you can replay your captured traffic multiple times, the solution also allows for consistent and repeatable load testing that can enable iterative testing for optimization of your migration environment.

For query playback, we focus on the more recently updated Percona pt-upgrade tool; Percona also has an older tool, Query Playback, available.

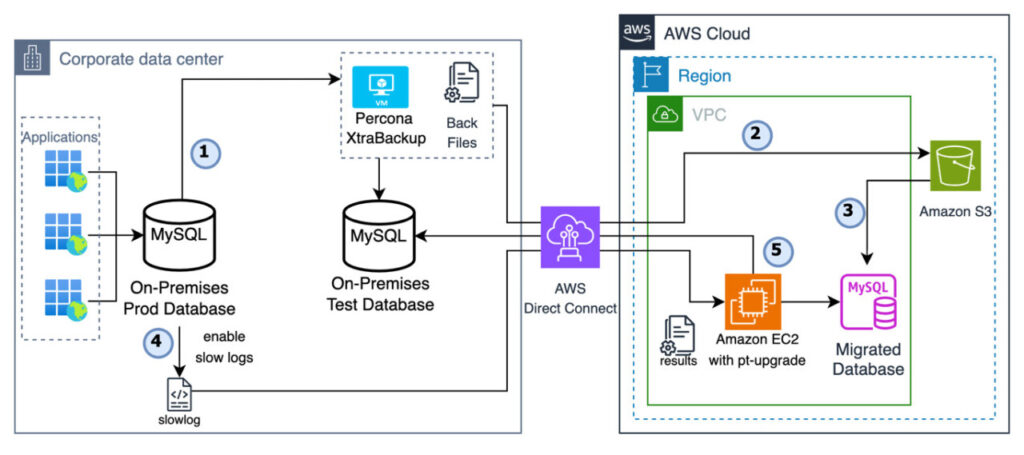

The following diagram illustrates the query playback architecture using the Percona pt-upgrade tool.

The workflow includes the following steps:

- Back up the production database.

- Upload the backup to Amazon Simple Storage Service (Amazon S3).

- Restore the backup as both a migrated database in AWS and an on-premises test database.

- Enable slow logs on the production database and copy the slow logs to an Amazon Elastic Compute Cloud (Amazon EC2) instance or the virtual machine (VM) running pt-upgrade.

- Run pt-upgrade against both the migrated database and the on-premises test database.

Capturing the set of queries that will be used for testing is the first step, which can be accomplished by enabling slow query logs on the source database. Enabling slow query log on your production database can have performance impact, so please review the “Overall considerations†section of the document for more details. Next, the Percona pt-upgrade tool needs to be set with the slow logs as input and run against the on-premises test database and the migrated database instance. This generates the results showcasing the performance differences. There will be a small latency overhead for one of the database connections based on where you want to host the pt-upgrade tool. For this example, we showcase queries being tested against an Amazon Aurora MySQL-Compatible Edition database, but the pattern is the same for migrations to Amazon Relational Database Service (Amazon RDS) for MySQL, MySQL on Amazon EC2, and other MySQL-compatible options.

In Part 2 of the series, we focus on the use of Percona pt-upgrade.

Query playback considerations

In order to get consistent results for workloads that aren’t read only, you need to be able to guarantee a consistent starting point for your database before you begin a replay test. This can be accomplished by reloading your migration environment’s database from a snapshot or from your source database’s mysql dump. We have achieved this in our architecture using Percona XtraBackup.

In addition to replaying traffic to the target migration environment in AWS, we also set up a test environment on-premises with a copy of the production database. Replaying to this copy enables a cleaner comparison of performance between the target AWS environment and on-premises test environment, removing the cost of processes like logging that run on the production database from consideration in the comparison.

Database traffic mirroring architecture

For our second solution, we discuss mirroring SQL query traffic using ProxySQL from an on-premises production workload into a migration environment in AWS. The following diagram illustrates an example architecture.

The workflow includes the following steps:

- Integrate ProxySQL between your production traffic and your production database.

- Configure the host group and query rules on the ProxySQL cluster.

- Mirror traffic to the migrated database and the on-premises test database.

For this example, we showcase traffic being mirrored to an Aurora MySQL database. The pattern is the same for migrations to Amazon RDS for MySQL, MySQL on Amazon EC2, and other MySQL-compatible options.

As in query playback architecture, we set up a test environment on-premises with a copy of the production database. Mirroring to this copy and the AWS migration environment enables a cleaner comparison of performance between the target AWS environment and on-premises test environment, removing the cost of processes like logging that run on the production database from consideration in the comparison.

We also use AWS Direct Connect to provide guaranteed bandwidth for our data connection to not introduce unwanted variables into the load testing.

This approach requires that your migration environment is synchronized to your on-premises environment before mirroring, either through binary log replication or through other data migration tooling.

In Part 3 of this series, we focus on how to set up traffic mirroring from on premises with ProxySQL.

Mirroring considerations

It’s important to thoroughly test and set up monitoring to understand the impact of ProxySQL’s mirroring overhead on the latency of your application. This helps make sure you can take full advantage of the benefits ProxySQL provides, without unexpected degradation of performance. By proactively evaluating the effects on latency, you can make informed decisions and adjustments to optimize your application’s performance and deliver a seamless user experience, even with the addition of the ProxySQL layer.

To maintain the validity and success of your migration process, it is crucial to establish data consistency between your source and target environments before you commence mirroring. By verifying that the data in both environments is aligned, you can have confidence that any subsequent evaluation of your migration environment will provide accurate, actionable insights. This proactive step helps lay a strong foundation for the migration, allowing you to identify and address any discrepancies early on. Prioritizing data consistency upfront is an essential best practice that will pay dividends throughout the migration journey.

ProxySQL’s ability to mirror traffic to multiple targets provides valuable flexibility during your migration process. This capability allows you to create a local copy of your production environment, as well as a separate copy hosted on Amazon RDS. By having these two isolated environments, you can thoroughly test your application’s behavior and performance under realistic conditions, without risking disruption to your live production system.

The effectiveness of this approach is directly influenced by the characteristics of your production traffic. By basing your testing and evaluation on the actual traffic sources, you can gain valuable insights that closely resemble real-world conditions. This approach makes sure your migration planning and preparations are grounded in the realities of your live environment. However, if your peak traffic loads occur infrequently, you may need to take additional steps or perform testing during different windows in order to provide broad coverage.

Overall considerations

When choosing your strategy, consider the following:

- Determine the query set used for the test – Using slow query logging to capture queries is a common strategy. Although setting the long_query_time parameter to 0 has the advantage of capturing all queries, it’s important to be mindful of the potential impact on your production instance’s performance. Please note, if the

long_query_timeparameter is not set to 0, you may not capture all queries that are executed against your production database. In that scenario the replay is not identical to your original production traffic. By using the on-premises test database, you can still get a like for like comparison between the on-premises test database and the migrated database instance, albeit with a subset of production traffic. As a best practice, we suggest starting with a higher value forlong_query_time. This more conservative approach allows you to monitor the effect on your production environment and make sure there are no unexpected performance issues. If you find that the higherlong_query_timevalue is not significantly impacting your production instance, you can consider gradually reducing the value to capture a larger volume of queries. This iterative process enables you to strike the right balance between comprehensive data gathering and maintaining the stability of your live environment. - Maintain data and security compliance during your testing – Organizations often have different security and compliance regulations or standards for their production and testing environments. By taking proactive steps to align your migration testing environment to your production system, you can have confidence that your testing activities are being conducted in a secure and compliant manner.

- High-level differentiation – Note the following for these two solutions:

- Mirroring – You should validate the migration environment against production traffic before cutting over for peace of mind. ProxySQL mirroring doesn’t provide the same level of transactional consistency as MySQL replication and the testing environment should not be used for production cut over.

- Playback – For iterative optimization efforts, you should replay captured production queries as a baseline performance load test.

Conclusion

In this post, we reviewed two approaches to replay and mirror production traffic to a newly migrated environment, allowing for direct comparison of all or part of the on-premises environment’s workload. This makes sure performance has been properly validated before cutting over traffic from on premises. These methods empower you to take a proactive, data-driven approach to your migration project. Rather than relying on assumptions or guesswork, you can make informed decisions based on performance comparisons between the source and the target environments. In the following posts in this series, we dive deeper into each tool. For more details about query playback, see Part 2. For traffic mirroring, see Part 3.

For more information about traffic mirroring between AWS environments, refer to Mirror production traffic to test environment with VPC Traffic Mirroring. To learn more about ProxySQL, visit the ProxySQL webpage. For details on the Percona Query Playback tool, visit the GitHub repo.

About the Authors

Arnab Ghosh is a Sr. Solutions Architect for AWS in North America, helping enterprise customers build resilient and cost-efficient architectures. He has over 15 years of experience in architecting, designing, and developing enterprise applications solving complex business problems.

Arnab Ghosh is a Sr. Solutions Architect for AWS in North America, helping enterprise customers build resilient and cost-efficient architectures. He has over 15 years of experience in architecting, designing, and developing enterprise applications solving complex business problems.

Patrick Gryczka is a Sr. Solutions Architect specializing in serverless technologies and the sports industry. Beyond the cloud, Patrick fills his time and space with cats, science fiction, Python, and Rust.

Patrick Gryczka is a Sr. Solutions Architect specializing in serverless technologies and the sports industry. Beyond the cloud, Patrick fills his time and space with cats, science fiction, Python, and Rust.

Simon Stewart is a Database Specialist Solutions Architect at AWS, focusing on MySQL and Amazon Aurora MySQL. Simon helps AWS customers with their architecture design and provides efficient solutions to run their database workloads on AWS. When not helping customers with their databases on AWS, he enjoys tinkering with his homelab.

Simon Stewart is a Database Specialist Solutions Architect at AWS, focusing on MySQL and Amazon Aurora MySQL. Simon helps AWS customers with their architecture design and provides efficient solutions to run their database workloads on AWS. When not helping customers with their databases on AWS, he enjoys tinkering with his homelab.

Source: Read More