In Part 1 of this post, we covered how Retrieval Augmented Generation (RAG) can be used to enhance responses in generative AI applications by combining domain-specific information with a foundation model (FM). However, we stayed focused on the semantic search aspect of the solution, assuming that our vector store was already built and fully populated. In this post, we explore how to generate vector embeddings on Wikipedia data stored in a SQL Server database hosted on Amazon Relational Database Service (Amazon RDS). We also use Amazon Bedrock to invoke the appropriate FM APIs and an Amazon SageMaker Jupyter Notebook to help us orchestrate the overall process.

Amazon RDS for SQL Server and Amazon Bedrock

Before we explore vector embeddings, let’s discuss two key Amazon Web Services (AWS) services in this solution: Amazon RDS for SQL Server and Amazon Bedrock.

Amazon RDS for SQL Server is a fully managed database service that simplifies the setup, operation, and scaling of SQL Server databases in the cloud. By using RDS for SQL Server, we can store and manage our sample Wikipedia data, including vector embeddings, and achieve high availability, durability, and performance.

Amazon Bedrock is a fully managed service that offers a choice of industry leading foundation models (FMs) along with a broad set of capabilities that you need to build generative AI applications, simplifying development with security, privacy, and responsible AI. With the comprehensive capabilities of Amazon Bedrock, you can experiment with a variety of top FMs, customize them privately with your data using techniques such as fine-tuning and RAG, and create managed agents that execute complex business tasks—from booking travel and processing insurance claims to creating advertising campaigns and managing inventory—all without writing any code. Because Amazon Bedrock is serverless, you don’t have to manage any infrastructure, and you can securely integrate and deploy generative AI capabilities into your applications using the AWS services that you’re already familiar with. It supports a wide range of data sources, including RDS for SQL Server instances, and provides built-in capabilities for generating vector embeddings using state-of-the-art language models supported on AWS.

Integrate RDS for SQL Server and Amazon Bedrock

The first step is to establish a connection between the RDS for SQL Server instance and Amazon Bedrock. This integration facilitates access to the Wikipedia data stored in the RDS for SQL Server database using the capabilities of Amazon Bedrock, making subsequent analysis straightforward.

Solution overview

Tokenizing and vectorizing content data into vector embeddings can be accomplished through various approaches. Regardless of the vector data store employed, it’s the responsibility of an individual such as a data engineer, database developer, or data scientist to convert the original data into a compatible vector format.

In this example, we have a modest dataset sourced from Wikipedia, stored in a SQL Server database table consisting of 25,000 rows. This table comprises columns labeled id, title, contents, and url. We want to incorporate a new column named contents_vector into the existing table to facilitate vector similarity searches. We run the code in Jupyter Notebook so you can see step-by-step instructions and the corresponding results from each step.

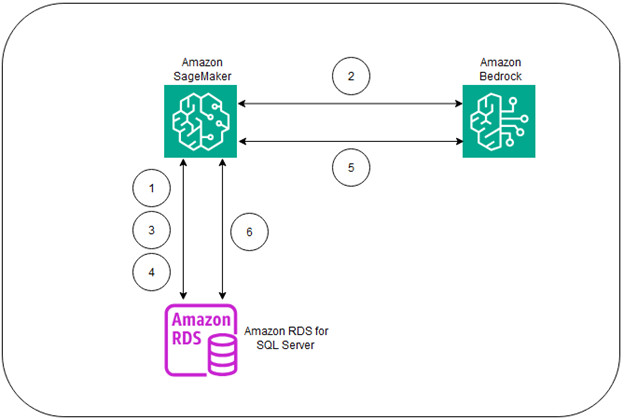

The following diagram shows the flow of steps in the solution.

The flow of the steps is as follows:

- Get raw data from RDS for SQL Server

- Generate vector embeddings of the raw data using Amazon Bedrock through Amazon SageMaker

- Store the embeddings into RDS for SQL Server

- Create a vector table and

columnstoreindex - Generate embeddings from the prompts

- Perform similarity search on RDS for SQL Server

Prerequisites

This post assumes that you’re familiar with navigating the AWS Management Console. For this example, you need the following resources and services enabled in your AWS account:

- Amazon RDS for SQL Server instance (vector data store)

- Amazon SageMaker sample notebook

- Amazon Bedrock Python library

- Amazon Bedrock. You need to request access to use specific FMs in Amazon Bedrock. In this post, we use Amazon Titan Embeddings G1 – Text.

- Set up Amazon Bedrock API

To use RDS for SQL Server as a vector data store to implement a generative AI use case that involves similarity search, follow the steps in Part 1. Data embedding takes approximately 90 minutes to complete.

Generate vector embeddings

Follow these steps to generate vector embeddings and perform a similarity search on RDS for SQL Server:

- In the SageMaker console, when the notebook status is In Service, choose Open JupyterLab. You will be redirected to the Jupyter Lab IDE, as shown in the following screenshot. Select the Terminal

- Run the following code in the Linux terminal window to install the Microsoft Open Database Connectivity (ODBC) driver for SQL Server (Linux).

- Upload the SageMaker notebook file provided in the prerequisites section (ipynb). Choose the Open JupyterLab link at the right of your SageMaker notebook instance. Choose the folder icon located in the navigation pane of your JupyterLab window. Select the upload files icon, browse to the Downloads folder, and select the file to upload. After the file is uploaded, you can continue to the embedding process.

- Install the prerequisites using the following pip

- Import the necessary modules.

- Assign the start time and the Amazon Bedrock runtime variable. Start time is used to calculate total time taken towards the end to perform embedding.

- In the Wikipedia article source data provided in the prerequisites section, select all article-related data into a DataFrame, then apply the embedding algorithm to some of the columns.

- Read the data from Amazon RDS.

- Convert the data into a DataFrame format.

- Remove all single quotation marks from the column as part of the data cleansing process. This is to minimize syntax errors when you form the INSERT command later.

- Fill the NaN column to 0 and set the

content_idto integer type.

The next section in the notebook embeds the data by calling Amazon Titan Text Embeddings. The execution takes approximately 90 minutes to be completed. You can see the progress of the embedding as the code is executed.

The progress of the embedding will look similar to the following figure. As shown in the figure, the script skips articles that have a text length of more than 20,000.

- Convert the DataFrame data into a CSV file, which will be stored locally in SageMaker.

- Remove all single quotation marks in the file.

- Delete rows where the content and

content_idcolumns are equal to 0.0 and 0.

- Match the columns in the Pandas frame to the columns in the table and rename the Pandas DataFrame columns in place.

- You can use the following command to display the cleansed DataFrame. We show only one record instead of all.

- Instead of inserting the embeddings back into the same table, in this example, we created a new table using the following command.

- Prepare the data for insertion and insert the data into the new table by forming an INSERT statement.

- Create a vector table and a

columnstoreindex for efficient vector similarity search.

- To test the vector similarity search, create a prompt to input a search string to search for the keyword, Warrior.

- Using the prompt, connect to Amazon Bedrock to convert the string into an embedding using Amazon Titan Text Embeddings.

- Convert the text embedding into a JSON string.

- Connect to RDS for SQL Server to perform the similarity search and display the result.

We recognize the limitations of the code, which is neither perfect nor efficient, but it serves the purpose of illustrating potential challenges that might arise during even basic data cleansing and transformation tasks. This code snippet serves as a quick test that uses an Amazon Bedrock embedding model to vectorize 25,000 Wikipedia articles and validates a vector similarity search within RDS for SQL Server. The execution time will vary based on your computing instance. The entire process, from vectorizing 25,000 Wikipedia articles to inserting vector data into SQL Server tables and creating columnar indexes, might take approximately 2 hours. We use the Amazon Bedrock embed function to generate embeddings for the content column of the data.

You can choose from various pre-trained models such as bert-base-uncased or roberta-base, but be aware that these haven’t been tested for this solution. The embed function uses the power of pre-trained language models like bert-base-uncased and roberta-base to generate dense vector representations of your textual data. These embeddings capture the semantic relationships between words, phrases, and concepts, and with them, you can perform a wide range of analyses and tasks.

Cleanup

Running this solution created a few AWS resources:

- An RDS for SQL Server database instance

- An Amazon SageMaker Notebook instance

If you don’t need these resources going forward, delete the RDS for SQL Server and SageMaker Notebook instances to avoid unnecessary charges by following the instructions in the provided URLs.

Conclusion

This post has provided a comprehensive overview of how to generate vector embeddings, from setting up the environment and generating embeddings to exploring their applications and advanced techniques. As the field of natural language processing continues to evolve, new techniques and models will emerge, further enhancing the ability to extract insights and knowledge from textual data. With the right tools and techniques, you can use this vast repository of knowledge to discover actionable insights that drive innovation and effective decision-making across various domains. Try out this solution and if you have any comments or questions, leave them in the comments section.

About the Authors

Joshua Jin is a database benchmark engineer at Amazon Web Services (AWS), where he specializes in meticulously evaluating the performance of databases.

Joshua Jin is a database benchmark engineer at Amazon Web Services (AWS), where he specializes in meticulously evaluating the performance of databases.

Sudarshan Roy is a Senior Database Specialist Cloud Solution Architect with World Wide AWS Database Services Organization (WWSO). He has led large scale database migration and modernization engagements for enterprise customers and is passionate about solving complex migration challenges while moving database workloads to the AWS Cloud.

Sudarshan Roy is a Senior Database Specialist Cloud Solution Architect with World Wide AWS Database Services Organization (WWSO). He has led large scale database migration and modernization engagements for enterprise customers and is passionate about solving complex migration challenges while moving database workloads to the AWS Cloud.

Barry Ooi is a Senior Database Specialist Solution Architect at AWS. His expertise is in designing, building and implementing data platforms cloud native services for customers as part of their journey on AWS. His areas of interest include data analytics and visualization.

Barry Ooi is a Senior Database Specialist Solution Architect at AWS. His expertise is in designing, building and implementing data platforms cloud native services for customers as part of their journey on AWS. His areas of interest include data analytics and visualization.

Source: Read More