This is a guest post co-written with Itay Kirshenbaum from Claroty.

Claroty is a leading provider of industrial cybersecurity solutions, protecting cyber-physical systems (CPS), such as industrial control systems, operational technology networks, and healthcare networks from cyber threats. With a strong focus on innovation and customer satisfaction, Claroty relies on its database infrastructure to power its applications and deliver high-performance services to its customers. As a fast-growing company, Claroty needs to make sure its database infrastructure can scale to meet the demands of its growing customer base while keeping costs under control.

Amazon Aurora is a highly scalable and high-performance relational database engine that is fully compatible with MySQL and PostgreSQL. It is designed to deliver the performance and reliability of high-end commercial databases at a fraction of the cost.

In this post, we share how Claroty improved database performance and scaled Claroty xDome using the advanced features of Aurora.

Claroty’s business case and technical challenges

Claroty’s business is rooted in its need to efficiently manage large volumes of data and run complex queries to ensure a great user experience for its customers who are reducing security risks to cyber-physical systems. One key workload involves an API that provides users with an interface to extract device, alert, and vulnerability data from the Claroty xDome dashboard, enabling seamless integration into their own data stores. The users of Claroty’s customers are security, risk, and IT teams. They use this data to conduct advanced analytics, gain deeper insights into their security posture, and strengthen their cybersecurity measures through asset visibility, exposure management, network protection, secure access, and threat detection.

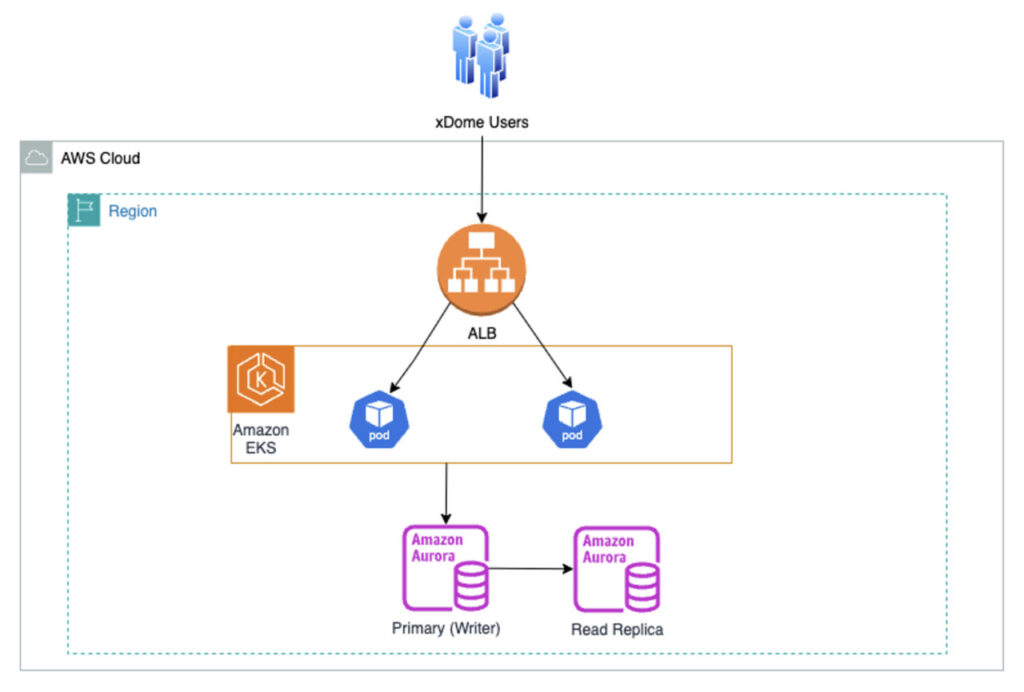

The following diagram presents a simplified architecture, illustrating the interaction between xDome users, a public-facing Application Load Balancer (ALB), a group of API pods within an Amazon Elastic Kubernetes Service (Amazon EKS) cluster, and an Aurora cluster comprising a writer instance and a read replica.

The data’s extensive nature, consisting of millions of rows and hundreds of columns, combined with the API’s advanced features, such as the ability to create compound filters across numerous attributes, results in SQL queries with complex operations that sort, group, and join large data. Database systems like PostgreSQL try to perform operations in memory for optimal performance, but there are situations where they must use a temporary work area on disk. PostgreSQL uses a temporary work area for several operations, including to hold intermediate results to sort or group records for queries. In Amazon Aurora, when these temporary objects don’t fit into memory, they are written to and read from Amazon Elastic Block Store (Amazon EBS). This workload poses challenges, particularly in managing the substantial I/O load and write operations of temporary objects to EBS which adds latency. This can result in a bottleneck, where the temporary storage bandwidth becomes saturated, as evidenced by the flat peak in the os.diskio.rdstemp.readkbps/writekbps metric observed in Amazon RDS Performance Insights.

The business task Claroty is trying to accomplish is to sync important data, into their customers’ own data stores and data lakes. This syncing happens through APIs, which provide access to Claroty’s data for internal reporting or analysis, to help the Claroty’s customers, specifically security, risk, and IT teams, better understand and manage security risks.

However, due to a saturation of these API requests, which are likely being used by many customers simultaneously, each request takes longer to process. As a result, customers experience delays or even API timeouts, meaning they are unable to receive the data they need in real time. This disruption impacts their ability to quickly analyze security data, thus delaying important decision-making and reporting.

The traditional approach of scaling up to a larger instance type, such as r6g.12xlarge from the original r6g.8xlarge, would result in a substantial cost increase Claroty hoped to avoid.

Aurora Optimized Reads and Aurora I/O Optimized

Among the many advanced features available in Aurora, Aurora Optimized Reads and Aurora I/O Optimized stand out for their ability to significantly enhance database performance and cost-efficiency.

Aurora Optimized Reads improves query performance by using locally attached NVMe SSDs. It supports two capabilities:

Tiered cache – This allows you to extend your DB instance caching capacity by utilizing the local NVMe storage. It automatically caches database pages about to be evicted from the in-memory database buffer pool, offering up to eight times better latency for queries that were previously fetching data from Aurora storage.

Temporary objects – These are hosted on local NVMe storage instead of Amazon Elastic Block Store(Amazon EBS) based storage. This enables better latency and throughput for queries that sort, join, or merge large volumes of data that don’t fit within the memory configured for those operations.

Aurora I/O Optimized is designed to optimize the cost structure for I/O-intensive workloads. By decoupling I/O costs from storage costs, it offers a predictable and lower TCO (total cost of onwership) for businesses with heavy I/O requirements. This feature allows you to scale your database operations without the financial unpredictability typically associated with I/O-intensive tasks. For an in-depth look at Aurora I/O Optimized, see New – Amazon Aurora I/O-Optimized Cluster Configuration with Up to 40% Cost Savings for I/O-Intensive Applications.

The tiered cache feature of Aurora Optimized Reads is only available when your Amazon Aurora cluster storage is configured as I/O Optimized.

Why Claroty chose Aurora Optimized Reads and Aurora I/O Optimized

To effectively address Claroty’s challenges, Claroty chose to use Aurora Optimized Reads instead of upgrade their instance immediately. By deploying db.r6gd instances with locally attached NVMe SSDs, they could significantly reduce the latency associated with I/O operations and temporary storage operations by keeping more data in cache compared to storage. This setup provided the necessary flexibility to manage their working dataset beyond the memory limitations of even the largest database instances. Claroy gains better latency and throughput for queries that sort, join, or merge large volumes of data that don’t fit within the memory configured for those operations. This eliminated the flat peak in the os.diskio.rdstemp.readkbps/writekbps metric observed in Amazon RDS Performance Insights.

In addition, Claroty adopted the Aurora I/O Optimized feature. Given that I/O operations constituted the most significant component of their costs, this feature was particularly beneficial. It offered predictable and lower I/O pricing, enabling Claroty to manage their expenses better while scaling their workload efficiently and lowering TCO.

Positive impact on cost and performance

The adoption of Aurora Optimized Reads had a transformative impact on Claroty’s database performance. This improvement was evident in Amazon RDS Performance Insights, where it not only eliminated spikes in temporary storage bandwidth but also significantly reduced the IO:DataFileRead wait event. This event was replaced by IO:AuroraOptimizedReadsCacheRead, indicating that I/O operations were now occurring on local NVMe storage rather than Aurora storage, resulting in reduced latency.

The reduction of IO:DataFileRead wait event was also reflected in the ReadThroughput CloudWatch metric, which indicated a decrease in the number of bytes read from Aurora storage per second.

This reduced the application’s API processing time, significantly enhancing the user experience for Claroty’s clients. The following screenshot shows an example of API requests taking over 30 seconds before moving to an Aurora Optimized Reads instance.

The following screenshot shows an example of API requests after moving to an Aurora Optimized Reads instance where the same queries are now processed in less than a second on average.

Claroty’s API monitoring revealed a significant improvement in query performance, particularly in reducing the maximum query duration and minimizing query timeout errors. Previously, these timeouts occurred when queries reached their maximum allowed duration. However, by leveraging Optimized Reads, query processing times were reduced, effectively eliminating the timeout errors that had previously disrupted the system.

The following graph shows improvements in query performance:

The following graph shows improvements in query timeout errors:

Moreover, the implementation of Aurora I/O Optimized led to substantial cost savings. This change resulted in a 50% reduction in their Aurora related expenses. The financial savings allowed Claroty to invest in other critical areas, such as launching a cloud-managed secure access product and fostering further innovation and development in their cybersecurity solutions.

Conclusion

Claroty’s strategic use of Aurora Optimized Reads and Aurora I/O Optimized demonstrates the significant benefits of using advanced database features to overcome performance bottlenecks and reduce operational costs. By addressing the challenges of write-heavy workloads and I/O-intensive operations, Claroty not only improved its application performance, but also achieved substantial cost savings. These enhancements have strengthened Claroty’s ability to provide robust and reliable cybersecurity solutions, providing the protection and security of critical infrastructure worldwide.

If your business faces similar challenges, consider taking advantage of the advanced features of Aurora to optimize database performance and improve cost-efficiency. Learn more about Amazon Aurora features.

Claroty has redefined cyber-physical systems (CPS) protection with an unrivaled industry-centric platform built to secure mission-critical infrastructure. The Claroty Platform provides the deepest asset visibility and the broadest, built-for-CPS solution set in the market comprising exposure management, network protection, secure access, and threat detection – whether in the cloud with Claroty xDome or on-premise with Claroty Continuous Threat Detection (CTD). Backed by award-winning threat research and a breadth of technology alliances, The Claroty Platform enables organizations to effectively reduce CPS risk, with the fastest time-to-value and lower total cost of ownership. Claroty is deployed by hundreds of organizations at thousands of sites globally. The company is headquartered in New York City and has a presence in Europe, Asia-Pacific, and Latin America. To learn more, visit claroty.com.

About the Authors

Itay Kirshenbaun is Chief Architect at Claroty. Itay was also the Co-Founder and VP R&D of Medigate, Cyber-Physical Security in the Modern Healthcare Network, which was acquired by Claroty in 2022.

Pini Dibask is a Senior Database Solutions Architect at AWS, bringing 20 years of experience in database technologies, with a focus on relational databases. In his role, Pini serves as a trusted advisor, helping customers architect, migrate, and optimize their databases on AWS.

Source: Read More