Generative artificial intelligence (AI) models are designed to create realistic, high-quality data, such as images, audio, and video, based on patterns in large datasets. These models can imitate complex data distributions, producing synthetic content resembling samples. One widely recognized class of generative models is the diffusion model. It has succeeded in image and video generation by reversing a sequence of added noise to a sample until a high-fidelity output is achieved. However, diffusion models typically require dozens to hundreds of steps to complete the sampling process, demanding extensive computational resources and time. This challenge is especially pronounced in applications where quick sampling is essential or where many samples must be generated simultaneously, such as in real-time scenarios or large-scale deployments.

A significant limitation in diffusion models is the computational load of the sampling process, which involves systematically reversing a noising sequence. Each step in this sequence is computationally expensive, and the process introduces errors when discretized into time intervals. Continuous-time diffusion models offer a way to address this, as they eliminate the need for these intervals and thus reduce sampling errors. However, continuous-time models have not been widely adopted because of inherent instability during training. The instability makes it difficult to train these models at large scales or with complex datasets, which has slowed their adoption and development in areas where computational efficiency is critical.

Researchers have recently developed methods to make diffusion models more efficient, with approaches such as direct distillation, adversarial distillation, progressive distillation, and variational score distillation (VSD). Each method has shown potential in speeding up the sampling process or improving sample quality. However, these techniques encounter practical challenges, including high computational overhead, complex training setups, and limitations in scalability. For instance, direct distillation requires training from scratch, adding significant time and resource costs. Adversarial distillation introduces challenges when using GAN (Generative Adversarial Network) architectures, which often need help with stability and consistency in output. Also, although effective for short-step models, progressive distillation and VSD usually produce results with limited diversity or smooth, less detailed samples, especially at high guidance levels.

A research team from OpenAI introduced a new framework called TrigFlow, designed to simplify, stabilize, and scale continuous-time consistency models (CMs) effectively. The proposed solution specifically targets the instability issues in training continuous-time models and streamlines the process by incorporating improvements in model parameterization, network architecture, and training objectives. TrigFlow unifies diffusion and consistency models by establishing a new formulation that identifies and mitigates the main causes of instability, enabling the model to handle continuous-time tasks reliably. This allows the model to achieve high-quality sampling with minimal computational costs, even when scaled to large datasets like ImageNet. Using TrigFlow, the team successfully trained a 1.5 billion-parameter model with a two-step sampling process that reached high-quality scores at lower computational costs than existing diffusion methods.

At the core of TrigFlow is a mathematical redefinition that simplifies the probability flow ODE (Ordinary Differential Equation) used in the sampling process. This improvement incorporates adaptive group normalization and an updated objective function that uses adaptive weighting. These features help stabilize the training process, allowing the model to operate continuously without discretization errors that often compromise sample quality. TrigFlow’s approach to time-conditioning within the network architecture reduces the reliance on complex calculations, making it feasible to scale the model. The restructured training objective progressively anneals critical terms in the model, enabling it to reach stability faster and at an unprecedented scale.

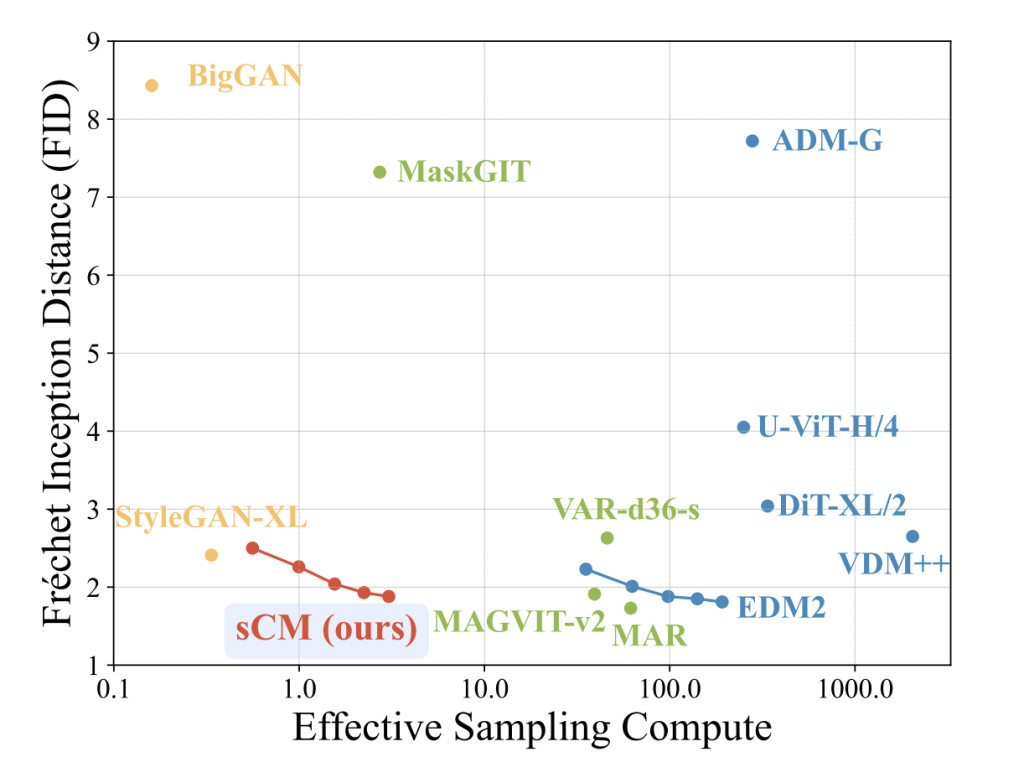

The model, named “sCM†for simple, stable, and scalable Consistency Model, demonstrated results comparable to state-of-the-art diffusion models. For instance, it achieved a Fréchet Inception Distance (FID) of 2.06 on CIFAR-10, 1.48 on ImageNet 64×64, and 1.88 on ImageNet 512×512, significantly reducing the gap between the best diffusion models, even when only two sampling steps were used. The two-step model showed nearly a 10% FID improvement over prior approaches requiring many more steps, marking a substantial increase in sampling efficiency. The TrigFlow framework represents an essential advancement in model scalability and computational efficiency.

This research offers several key takeaways, demonstrating how to address traditional diffusion models’ computational inefficiencies and limitations through a carefully structured continuous-time model. By implementing TrigFlow, the researchers stabilized continuous-time CMs and scaled them to larger datasets and parameter sizes with minimal computational trade-offs.

The key takeaways from the research include:

Stability in Continuous-Time Models: TrigFlow introduces stability to continuous-time consistency models, a historically challenging area, enabling training without frequent destabilization.

Scalability: The model successfully scales up to 1.5 billion parameters, the largest among its peers for continuous-time consistency models, allowing its use in high-resolution data generation.

Efficient Sampling: With just two sampling steps, the sCM model reaches FID scores comparable to models requiring extensive compute resources, achieving 2.06 on CIFAR-10, 1.48 on ImageNet 64×64, and 1.88 on ImageNet 512×512.

Computational Efficiency: Adaptive weighting and simplified time conditioning within the TrigFlow framework make the model resource-efficient, reducing the demand for compute-intensive sampling, which may improve the applicability of diffusion models in real-time and large-scale settings.

In conclusion, this study represents a pivotal advancement in generative model training, addressing stability, scalability, and sampling efficiency through the TrigFlow framework. The OpenAI team’s TrigFlow architecture and sCM model effectively tackle the critical challenges of continuous-time consistency models, presenting a stable and scalable solution that rivals the best diffusion models in performance and quality while significantly lowering computational requirements.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post OpenAI Stabilizing Continuous-Time Generative Models: How TrigFlow’s Innovative Framework Narrowed the Gap with Leading Diffusion Models Using Just Two Sampling Steps appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)