Federated Learning is a distributed method of Machine Learning that puts user privacy first by storing data locally and never centralizing it on a server. Numerous applications have successfully used this technique, especially those requiring sensitive data like healthcare and banking. Each training round in classical federated learning involves a complete update of all model parameters by the local models on each client device. The client devices submit these parameters to a central server whenever their local modifications are complete, and the server averages them to create a new global model. After that, the clients are given this model again, and the training process resumes.

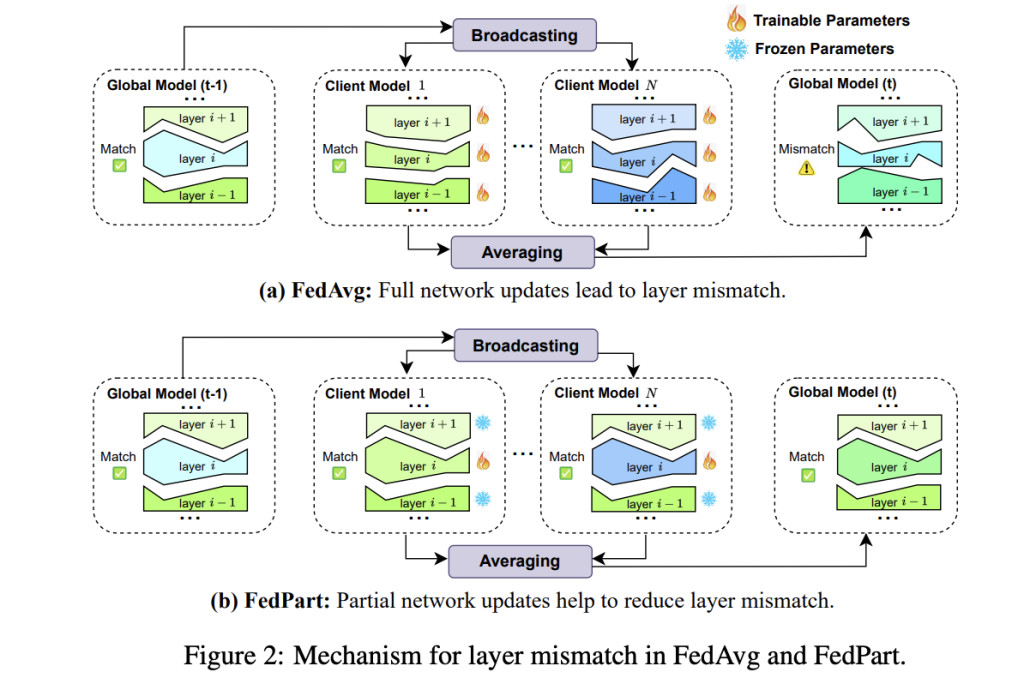

Each model layer can obtain thorough knowledge from a variety of client inputs using the complete update method, but it also leads to a persistent problem called layer mismatch. Because the averaging upsets the internal equilibrium that is formed inside the local models, the layers of the global model can find it difficult to collaborate across clients after each round of parameter averaging. The global model’s overall performance can suffer as a result, and it experiences slower convergence, which means it takes longer to achieve an ideal state.

The FedPart approach has been created to overcome this issue. FedPart selectively updates one or a limited subset of layers per training round rather than updating all layers. By restricting updates in this manner, the technique lessens layer mismatch because every trainable layer has a greater chance of matching the remainder of the model. This targeted strategy keeps layer collaboration more fluid, which improves model performance overall.Â

FedPart uses particular tactics to guarantee that knowledge acquisition stays effective. These tactics include a multi-round cycle that repeats this procedure over several training rounds and sequential updating, which updates layers in a particular order, beginning with the shallowest and working up to deeper layers. Shallow layers can catch simple features, while deeper levels pick up more intricate patterns using this cycling technique, which maintains each layer’s functional structure.

Numerous tests have demonstrated that FedPart not only increases the global model’s correctness and speed of convergence but also dramatically lowers the communication and processing load on client devices. Because of its effectiveness, FedPart is particularly well-suited for edge devices, where network connection is frequently restricted and resources are scarce. Through these developments, FedPart has proven to be a strong improvement over conventional federated learning, enhancing efficiency and performance in applications that are distributed and sensitive to privacy.

The team has summarized their primary contributions as follows.

The study has introduced FedPart, a technique for updating only specific layers in each round, together with strategies for selecting which layers to train in order to combat layer mismatch.

FedPart’s convergence rate has been examined in a non-convex environment, demonstrating potential advantages over conventional full network updates.

FedPart’s performance enhancements have been shown by numerous experiments. More studies with ablation and visualization have shed light on how FedPart improves effectiveness and convergence.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post FedPart: A New AI Technique for Enhancing Federated Learning Efficiency through Partial Network Updates and Layer Selection Strategies appeared first on MarkTechPost.

Source: Read MoreÂ