Purge is a housekeeping operation in a MySQL database. The InnoDB storage engine relies on it to clean up undo logs and delete-marked table records that are no longer needed for multiversion concurrency control (MVCC) or rollback operations. While our applications pursue a database design that aims to provide the highest write throughput upfront, it’s equally important to confirm that purge can proceed in a timely fashion in the background. The imbalance between a large amount of data changes and a slow advancement of purge can cause poor database performance.

This post outlines a set of design and tuning strategies for a high-speed purge in an Amazon Relational Database Service (Amazon RDS) for MySQL DB instance and Amazon Aurora MySQL-Compatible Edition DB cluster. It starts with a brief introduction to how purge works by harnessing our understanding of MySQL database internals. Then, it discusses common factors that pose challenges to purge and optimization techniques you can use. This post is based on MySQL 8.0 and most recommendations are applicable to a general MySQL database. It also gives specific considerations to Amazon managed database services.

Understand how purge works

Like many other mainstream relational database management systems (RDBMS), MySQL has implemented MVCC to enable concurrent read and write operations to access data. The core idea of MVCC is having the database engine create a new version of data in the table when a transaction updates a table record. The old data version isn’t physically removed but marked as deleted. Queries can choose an appropriate data version to construct their own view of the database at a desired isolation level. A great benefit of doing so is to avoid the blocking situation between reads and writes. Reads can always access old data versions while writes are working on new versions. The capability of keeping multiple versions of a table record also makes it convenient for a database engine to perform rollback operations either within a transaction or during crash recovery.

As a scalable solution, MVCC has an inherent constraint: the delete-marked table records need to be garbage collected. Various database engines are equipped with their own flavors of version tracking and garbage collection mechanism. A common challenge can come when a high volume of transactions produces old data versions too fast for garbage collection to catch up, resulting in a huge backlog of old data versions in the table structure. On the surface this directly causes unexpected growth in space usage. Underneath that, extra I/O operations need to be taken to perform read operations by checking the old versions. This increased I/O consumption competes for system resources, potentially degrading overall database performance.

In a MySQL database, InnoDB uses undo logs as the key data structure to support MVCC and rollback operations. When a table record is changed, its old data version is stored in an undo log. All undo logs related to the same table record are linked together to form a version chain. Purge, as the flip side of the coin, is the garbage collection process. It’s responsible for cleaning up not only undo logs, but also the delete-marked table records that they refer to. In essence, purge is considered as an integral part of the InnoDB transaction system.

The following graph illustrates a high-level design idea and the three-step workflow of InnoDB purge.

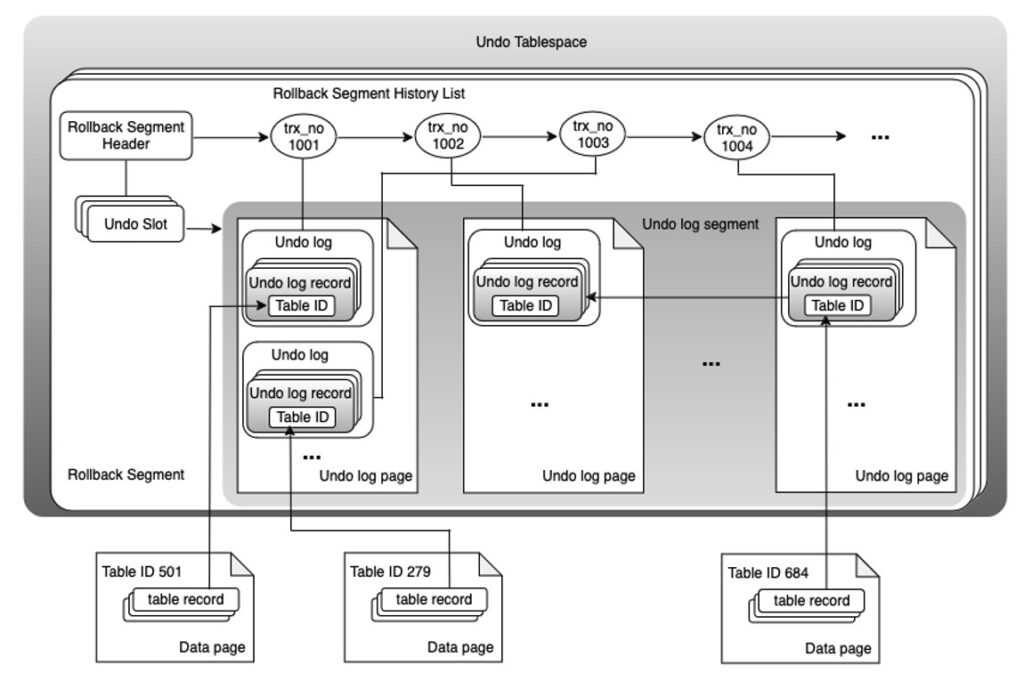

When a transaction starts, it allocates a rollback segment. A rollback segment is composed of many undo log pages in an undo tablespace. Like data pages where table records are stored, undo log pages need to be loaded into an InnoDB buffer pool to read or write.

When a transaction changes table data, it creates undo log records. An undo log record contains relevant information needed to roll back the change made to a table record, for example, table ID, clustered index, and the old data version before the change. There are different types of undo log record for INSERT, DELETE, or UPDATE statements. They will be grouped into separate undo logs based on whether they need to be purged later.

During commit, a transaction gets a transaction serialization number (trx_no) and writes it to its undo logs. If there are undo logs that need to be purged, they are added to the rollback segment history list that is ordered by trx_no. The InnoDB transaction system uses trx_no to track the sequence of all committed transactions. In that sense, the history list is a database-wide global list.

Purge is a multithreaded operation. The number of purge threads is set using innodb_purge_threads. Usually, there is one purge coordinator and several worker threads. These threads continuously repeat the purge operation, which involves three steps.

The purge coordinator thread checks if there are undo logs ready to be purged. If it finds any, it fetches a batch, parses the undo records, and sorts them by table ID. Then, it assigns each group to a worker thread for processing.

Purge worker threads process undo log records by table ID in parallel. Using the information in undo log records, they identify and remove delete-marked records, including those in clustered indexes, secondary indexes, and BLOB columns. If a data page has too little data after cleanup, it’s merged with another page to optimize storage.

After several batches of undo logs are processed, the purge coordinator thread removes them from rollback segment history list to free up rollback segments. MySQL provides an option called innodb_undo_log_truncate to automatically truncate the undo This is to shrink the physical storage space when a undo tablespace exceeds the size limit specified by innodb_max_undo_log_size and all the rollback segments contained inside are freed. After this step is completed, the purge coordinator thread will start another purge cycle.

The automatic undo tablespace truncation option is available in Aurora MySQL-Compatible version 3.06.0 and above.

Balance read and write workload

When a transaction uses data manipulation language (DML) statements such as INSERT, DELETE, or UPDATE to modify records, InnoDB creates different types of undo log records. However, not all types of undo log records need to be purged. Undo logs from INSERT statements don’t contain old data versions, so they are removed immediately after the transaction commits and not added to the rollback segment history list.

Undo log records produced by DELETE and UPDATE statements store the old data versions before table records are deleted or updated and, therefore, are the target of purge operations. Other concurrent SELECT queries or open transactions might need to access them to construct a consistent read for the purpose of MVCC. InnoDB uses read view as a mechanism to track the visibility of undo logs for active queries and transactions. It’s also used by the purge coordinator thread to decide if it’s safe to remove an undo log.

Database workloads, which both create and use undo logs, directly affect how purge threads operate. Undo logs are purged from the rollback segment history list in ascending order of trx_no. If an undo log can’t be purged, it’ll block other logs with higher trx_no from being purged, even if they belong to different tables. That is, purge is a database-wide global operation and one long running query or transaction will block the cleanup of undo records for all transactions that started after the long running one. In a MySQL database where purge is always of concern, it’s recommended you review the timing, concurrency, and transaction characteristics of your database workload.

One strategy is to choose a DROP PARTITION or DROP TABLE statement over DELETE because they don’t generate undo logs. Purge can be avoided if you partition a table so that you can remove a subset of data through DROP PARTITION, as the following diagram illustrates. When you want to delete a large amount of data from a table, it’s always worth considering the approach of creating a new table, copying data over, and dropping the old table.

Another strategy is to avoid long-running SELECT queries during a high volume of DELETE or UPDATE statements because their read views can hold undo logs and block purge. You can use max_execution_time to automatically stop SELECT queries that run too long by setting a maximum time limit. You can also switch the transaction isolation level from REPEATABLE READ to READ COMMITTED. This reduces the scope of the read view created by SQL statements, lowering the chances of blocking undo logs from being purged.

Aurora MySQL is a clustered database that consists of one or more DB instances that all use the same shared cluster storage volume. The implementation of InnoDB purge is influenced by its clustering topology. When you use an Aurora MySQL DB cluster, it has the following differences when compared with an Amazon RDS for MySQL DB instance:

The concept that purge is a database-wide global operation is consistent throughout the database architecture of Aurora MySQL. Purge runs on the primary (writer) DB instance. However, it can be blocked by SELECT queries on Aurora replica DB instances because they create read views. In an Aurora global database, SELECT queries on the secondary DB clusters also have the potential to block purge on the primary DB cluster.

On Aurora replica DB instances, READ COMMITTED isolation level is optimized to reduce the impact of long-running SELECT queries on purge threads and has Aurora MySQL specific behavior. While the query results might differ slightly from those of the primary DB instance using MySQL’s native READ COMMITTED level, it still complies with the ANSI SQL standard. You can enable this feature by setting aurora_read_replica_read_committed to be ON in either DB cluster parameter group or at session level.

Optimize table and index structure

In addition to undo logs, table records that are marked as deleted in an InnoDB table are also the target of purge operation. In a general sense, table records refer to various data structures that store or point to table row data, for example, clustered index, secondary index, and variable-length columns stored externally according to the InnoDB row format. Because undo log records only contain table ID and clustered index, purge threads will need to identify other relevant delete-marked table records and remove them if they exist. This can become the most heavy-duty part of purge operation.

Consider this example of how a secondary index impacts purge operation. The following graph compares Amazon CloudWatch metric RollbackSegmentHistoryListLength of two Aurora MySQL DB clusters. Both clusters have an r7g.2xlarge primary (writer) DB instance and use the Sysbench oltp_write_only.lua prepare workload to load one table of 80 GB data. One cluster (Cluster-A) runs the oltp_update_non_index.lua workload to update a column that isn’t covered by a secondary index. The other cluster (Cluster-B) runs the oltp_update_index.lua workload to update a column that has a secondary index built on top of it. Both Sysbench workloads use –rate to generate transactions at the same rate.

You can observe the following:

DMLThroughput shows the same pattern on both clusters, indicating they run a similar amount of UPDATE statements.

RollbackSegmentHistoryListLength goes above three million at peak time on the cluster that runs the oltp_update_index.lua workload. However, it stays close to zero on the other cluster. This shows purge threads can slow down substantially when they process undo logs that involve a secondary index. Using a secondary index isn’t necessarily a problem. Both clusters have the same table structure and both tables have a secondary index. Secondary indexes create a challenge for purge threads only when they are modified by the database workload.

The vertical line at 11:52 shows when we dropped the secondary index on Cluster-B. Dropping the secondary index immediately speeds up the purge operation because purge no longer has to find and clean up records in the secondary index. You can see that RollbackSegmentHistoryListLength drops to zero in a few minutes after dropping the secondary index. You can use the schema_unused_indexes view in SYS schema to identify secondary indexes that aren’t being used and evaluate if they are necessary.

Starting from MySQL 8.0, purge worker threads are designed to work on different tables in parallel to clean up delete-marked table records. The efficiency of parallelism depends on a couple of factors. The following graph shows an example of the correlation between the number of purge worker threads and the number of tables that have their undo logs waiting to be purged.

The data is collected from another test on the previous two Aurora MySQL DB clusters. One cluster (Cluster-A) continues using one table of 80 GB data, and the other cluster (Cluster-B) has a total of 80 GB data spreading out to 10 tables of 8 GB data each. Both clusters run the Sysbench oltp_update_index.lua workload and use –rate to generate transactions at the same rate. Because DB instance in both clusters is an r7g.2xlarge, innodb_purge_threads is set to three by default. That is, two purge worker threads (and the purge coordinator) can run simultaneously.

You can observe the following:

DMLThroughput shows the same pattern on both clusters, indicating they run a similar number of UPDATE statements.

RollbackSegmentHistoryListLength reaches around 500K at peak time on Cluster-B that has 10 tables, compared with three million on Cluster-A that is loaded with one table. The faster purge speed comes from two factors: two worker threads working in parallel and smaller size table for each worker thread to work on. Only one worker thread can purge one table at a time. To take full advantage of parallelism, it’s ideal to spread out data changes evenly to a greater or equal number of tables than purge worker threads.

innodb_purge_threads is a factor that contributes to the speed of purge operation. It might help speed up purge threads when there are other factors in play.

Choose the right instance class

By design, Purge is nonintrusive. Purge threads run in the background and operate asynchronously to user transactions. It’s expected they consume the least possible system resources to get their job done within a reasonable delay. MySQL defines the maximum value of innodb_purge_threads as 32. That is, you can configure up to 32 purge threads on a MySQL database. Such a setting isn’t intended to provide purge threads a competitive advantage when compared with max_connections, which allows thousands of concurrent user connections to land in a busy production database.

When purge threads process undo logs or delete-marked table records, they need to read data from undo log pages and data pages in InnoDB buffer pool. When those data pages are not in the buffer pool, they will issue I/O calls to fetch them from storage. The system resource, such as CPU, memory, and IO bandwidth of an RDS DB instance, can significantly impact the speed of purge operation.

A high-speed purge operation needs capacity planning not only for purge threads but also for overall database workload as well. On an DB instance that is under-resourced or experiencing heavily utilized system resource from user transactions, purge threads will slow down due to resource contention and purge lag can show up as a surprise. The following graphs come from a test conducted on an Aurora MySQL DB cluster that has a primary (writer) DB instance on r7g.2xlarge, to show an example of this type of situation.

The test starts with loading two different Sysbench datasets in a sequence. First, the cluster is loaded with 10 Sysbench tables with 8 GB data each, and secondly, it’s loaded with another table of 34 GB data. After data load, the test runs oltp_read_only.lua workload against the 34 GB table. This 34 GB table data is fully cached in the InnoDB buffer pool because innodb_buffer_pool_size is set to 42 GB by default. At the same time, most data of the other 10 tables are evicted out of buffer pool. Before the read only workload completes, the test kicks off oltp_update_index.lua workload on the other 10 tables.

You can observe the following:

SelectThroughput and DMLThroughput show that the two different types of workloads compete for the InnoDB buffer pool to load their own dataset.

RollbackSegmentHistoryListLength reaches over 5 million at the end of oltp_update_index.lua workload, which isn’t expected when we compare with the previous test. In that test, we ran the same oltp_update_index.lua workload at a higher rate (15K vs 10K) and only reached a maximum RollbackSegmentHistoryListLength value of about 3.2 million.

BufferCacheHitRatio has a sharp drop when oltp_update_index.lua workload starts due to the contention on buffer pool. It continues to be low even after the workload is completed, indicating purge threads is bottlenecked in the I/O path when they fetch undo logs or table records into buffer pool.

Adequate memory allocation to the InnoDB buffer pool can significantly affect the speed of purge operation. When the necessary undo logs or table data aren’t in the buffer pool, the performance of purge threads is correlated with IO latency.

In a MySQL database, you can configure the number of purge threads by changing the innodb_purge_threads parameter. If you use RDS for MySQL, the default value is 1, the same as MySQL Community Edition and you can change it in the DB parameter group. If you use Aurora MySQL, the default is a formula that increases as the size of the DB instance increases and you can change it in the Cluster parameter group. The following table lists the effective values for purge related settings based on the default formula for r7g instance type.

RDS instance type

vCPU

Memory (GiB)

innodb_buffer_pool_size (GiB)

innodb_purge_threads

innodb_purge_batch_size

db.r7g.large

2

16

7.76

1

600

db.r7g.xlarge

4

32

19.36

1

600

db.r7g.2xlarge

8

64

42.59

3

1800

db.r7g.4xlarge

16

128

89.11

3

1800

db.r7g.8xlarge

32

256

182.06

6

3600

db.r7g.12xlarge

48

384

275.11

12

7200

db.r7g.16xlarge

64

512

368.13

12

20000

With an Aurora Serverless v2 instance type, this setting is configured automatically as the instance scales up or down and cannot be modified by a parameter group.

Monitoring and alarm

The well-known metric to monitor InnoDB purge is the length of rollback segment history list, also called purge lag, which tells how many undo logs are waiting to be purged. In a MySQL database, you can run SHOW ENGINE INNODB STATUS to check History list length directly. Aurora MySQL provides RollbackSegmentHistoryListLength as a CloudWatch metric in all 3.0 releases. Amazon RDS for MySQL provides metric trx_rseg_history_len in Performance Insights, and you can publish it to CloudWatch.

It’s a good practice to set up a CloudWatch alarm on this metric to detect the situation where purge lag is far behind and can cause database performance issues. You can set the alarming threshold based on the historical normal value that your database has ever reached and action items that you’ll take when the alarm triggers.

If you notice that purge lag is high due to a database workload that generates too much undo logs for purge threads to process quickly enough, increase the size of your database instance to allocate more system resources for purge threads. Or you can use database sharding architecture to spread workload across multiple database shards. MySQL also provides innodb_max_purge_lag to set a threshold for the length of rollback segment history list. When it’s breached, an internal throttling kicks in for INSERT, DELETE, and UPDATE statements by introducing a delay up to innodb_max_purge_lag_delay. You can test these options to verify which one best fits your use cases.

Summary

Improving InnoDB purge efficiency involves a combination of workload optimization, database capacity planning, and configurations. This post provides a guideline to help you to do that in an RDS for MySQL DB instance, Aurora MySQL DB cluster, or a MySQL database of other flavors.

About the author

Lei Zeng is a Database Engineer at AWS.

Source: Read More