Amazon Aurora PostgreSQL-Compatible Edition supports the pgvector extension. It enables you to store and manipulate vector embeddings directly within your relational databases. Amazon Bedrock is a fully managed AWS service that offers various foundation models (FMs), including Amazon Titan Text Embeddings. By using the Aurora integration with Amazon Bedrock, you have a myriad of possibilities for analyzing and visualizing complex data structures, particularly in the context of semantic similarity exploration.

In this post, we show how you can visualize vector embeddings and explore semantic similarities.

FMs such as amazon.titan-embed-text-v1 can intake up to 8,000 tokens and output a vector of 1,536 dimensions. It’s not possible to visualize vector embeddings with more than three dimensions. Therefore, we need techniques that help convert these high-dimensional vector embeddings to lower dimensions. Various techniques are available for this dimensional reduction, such as principal component analysis (PCA), linear discriminant analysis (LDA), or t-distributed stochastic neighbor embedding (T-SNE).

In this post, we use PCA for dimensionality reduction. PCA is a well-known dimensionality reduction technique that transforms high-dimensional data into a lower-dimensional space while preserving as much of the original variance as possible. By projecting data onto orthogonal axes called principal components, PCA enables you to visualize the underlying structure of the data in a more manageable form.

Solution overview

The following steps provide a high-level overview of how to perform PCA with Amazon Bedrock and Aurora:

Prepare your dataset for generating vector embeddings. In this post, we use a sample dataset with product categories.

Generate vector embeddings of product descriptions using the Amazon Bedrock FM titan-embed-text-v1.

Store the product data and vector embeddings in an Aurora PostgreSQL database with the pgvector extension.

Import the libraries needed for PCA.

Convert high-dimensional vector embeddings into three-dimensional embeddings using PCA.

Generate a scatter plot of the three-dimensional embeddings and visualize semantic similarities in the data.

Prerequisites

To complete this solution, you must have the following prerequisites:

An Aurora PostgreSQL-Compatible cluster created in your AWS account.

Aurora PostgreSQL-Compatible credentials in AWS Secrets Manager. For more information, see Supported Regions and Aurora DB engines for Secrets Manager integration.

Model access enabled in Amazon Bedrock for the Amazon Titan Embeddings G1 – Text model.

A Jupyter notebook instance to run the Python script in the cloud with Amazon SageMaker. For more details, see Create a Jupyter notebook in the SageMaker notebook instance.

Basic knowledge of pandas, NumPy, plotly, and scikit-learn. These are essential Python libraries for data analytics and machine learning (ML).

Implement the solution

With the prerequisites in place, complete the following steps to implement the solution:

Sign in to the Jupyter notebook instance with a Python kernel. In this example, we use the conda_python3 kernel on a notebook instance created on SageMaker.

Install the required binaries and import the libraries:

Import the sample product catalog data:

In this sample data, we have 60 product samples with different categories like Fruit, Sport, Furniture, and Electronics. The resulting table looks like the following example.

.

category

name

description

0

Fruit

Apple

Juicy and crisp apple, perfect for snacking or…

1

Fruit

Banana

Sweet and creamy banana, a nutritious addition…

2

Fruit

Mango

Exotic and flavorful mango, delicious eaten fr…

3

Fruit

Orange

Refreshing and citrusy orange, packed with vit…

4

Fruit

Pineapple

Fresh and tropical pineapple, known for its sw…

Use the Amazon Bedrock titan-embed-text-v1 model to generate vector embeddings of the product descriptions.

The first step is to create an Amazon Bedrock client. You use this client later to create a text embeddings model. See the following code:

Create a function to generate text embeddings. In this example, we pass the Amazon Bedrock client and text data to the function:

Generate embeddings for each product description:

Alternatively, you can load this sample data first into an Aurora PostgreSQL database. Then you can install the Aurora machine learning extension on your Aurora PostgreSQL database and call the aws_bedrock.invoke_model_get_embeddings function to generate embeddings.

Create an Aurora PostgreSQL vector extension and create a table. Load the product catalog data, including vector embeddings, into this PostgreSQL table:

pgvector supports Inverted File with Flat Compression (IVFFlat) and Hierarchical Navigable Small World (HNSW) index types. In this example, we use the IVFFlat index because we’re operating on a small dataset and IVFFlat offers faster build times and uses less memory. To learn more about these index techniques, see Optimize generative AI applications with pgvector indexing: A deep dive into IVFFlat and HNSW techniques.

Read the records from the PostgreSQL table for visualization:

The following table shows an example of our results.

.

p_category

p_name

p_embeddings

p_description

0

Fruit

Apple

[-0.41796875, 0.7578125, -0.16308594, 0.045898…

Juicy and crisp apple, perfect for snacking or…

1

Fruit

Banana

[0.8515625, 0.036376953, 0.31835938, 0.1318359…

Sweet and creamy banana, a nutritious addition…

2

Fruit

Mango

[0.6328125, 0.73046875, 0.3046875, -0.72265625…

Exotic and flavorful mango, delicious eaten fr…

Use the PCA technique to perform dimensionality reduction on the vector embeddings:

The following table shows our results.

.

p_category

p_name

p_description

p_embeddings

pca_embed

0

Fruit

Apple

Juicy and crisp apple, perfect for snacking or…

[-0.41796875, 0.7578125, -0.16308594, 0.045898…

[0.35626856571655474, 11.501643004047386, 4.42…

1

Fruit

Banana

Sweet and creamy banana, a nutritious addition…

[0.8515625, 0.036376953, 0.31835938, 0.1318359…

[-0.3547466621907463, 10.105496442467032, 2.81…

2

Fruit

Mango

Exotic and flavorful mango, delicious eaten fr…

[0.6328125, 0.73046875, 0.3046875, -0.72265625…

[0.17147068159548648, 11.720291050641865, 4.28…

3

Fruit

Orange

Refreshing and citrusy orange, packed with vit…

[0.921875, 0.69921875, 0.29101562, 0.061523438…

[0.8320213087523731, 10.913051113510148, 3.717…

4

Fruit

Pineapple

Fresh and tropical pineapple, known for its sw…

[0.33984375, 0.70703125, 0.24707031, -0.605468…

[-0.0008173639438334911, 11.01867977558647, 3….

Plot a three-dimensional graph of the newly generated vector embeddings:

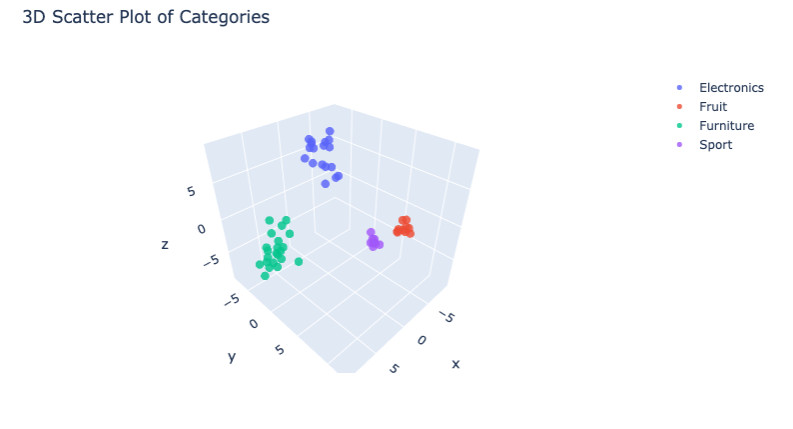

The resulting three-dimensional scatter plot will look like the following figure. Product items with similar meanings are clustered closely in the embedding space. This proximity makes sure that when we perform a semantic search on this dataset for a specific item, it returns products with similar semantics.

For a step-by-step demo of this solution, refer to the following GitHub repo.

Clean up

To avoid incurring charges, delete the resources you created as part of this post:

Delete the SageMaker Jupyter notebook instance.

Delete the PostgreSQL cluster if no longer required.

Conclusion

The integration of vector data types in Aurora PostgreSQL-Compatible opens up exciting possibilities for exploring semantic similarities and visualizing complex data structures. By using techniques such as PCA, you can gain valuable insights into your data, uncover hidden patterns, and make informed decisions. As you embark on your journey of exploring vector embeddings and semantic similarities, consider experimenting with the visualization techniques and algorithms discussed in this post. Explore the capabilities of Aurora PostgreSQL-Compatible vector storage and take advantage of the power of visual analytics in your data exploration endeavors.

About the Author

Ravi Mathur is a Sr. Solutions Architect at AWS. He works with customers providing technical assistance and architectural guidance on various AWS services. He brings several years of experience in software engineering and architecture roles for various large-scale enterprises.

Source: Read More