In this post, we highlight common challenges encountered during homogeneous database migrations and how using AWS Database Migration Service (AWS DMS) homogeneous migration can help address them. Although this feature supports migrating from specific versions of multiple source engines such as PostgreSQL, MySQL, MariaDB, and MongoDB, in this post, we primarily discuss migrating from an on-premises PostgreSQL database engine to Amazon RDS for PostgreSQL and performing a major version upgrade of Amazon RDS for PostgreSQL database.

Common challenges of homogeneous migration

During homogeneous data migrations from either on-premises infrastructure to AWS or between AWS services, you can encounter challenges such as extended downtime, tool selection, orchestration.

The conventional migration techniques use native features or replication tools specific to the database engine being migrated or third party tools to replicate the data in some cases. Using these tools require expertise, time, and effort. Even though native tools are efficient and proven, in many situations, it is complex to orchestrate and implement the migration solution if there are constraints such as any of the following:

The need to minimize downtime during the migration to avoid impacting business operations

Overhad of provisioning local storage while performing dumps or upgrades

Setting up and scaling migration infrastructure

Need to selectively migrate one or multiple databases in parallel

The need to implement encryption of connections to ensure compliance during data copy to the target over network

The cost of operational and monitoring overhead during migration

AWS DMS homogeneous migration overview

Homogeneous data migrations in AWS DMS make it straightforward to move on-premises databases to Amazon RDS. AWS DMS uses PostgreSQL’s native database tools to provide efficient, like-for-like migrations with minimal effort to to setup and run the migration solution.

Homogeneous data migrations are serverless, which means that AWS DMS automatically scales the resources that are required for your migration. With homogeneous data migrations, you can migrate data, table partitions, data types, and secondary objects such as functions and stored procedures.

AWS DMS homogeneous migration offers several benefits:

Low-touch migration – AWS DMS orchestrates native database client tools to provide straightforward and high-performing like-to-like migrations. In addition, you can migrate secondary objects including views, indexes, and stored procedures without needing separate configuration or additional steps.

Pay-as-you-go – Because homogeneous migration uses AWS DMS Serverless, you pay only for the resources by the hour for the duration of the migration.

Seamlessly migrate supported engines – As of this writing, homogeneous migration supports PostgreSQL, MySQL, and MongoDB as a source database. For supported database versions, see Source data providers for DMS homogeneous data migrations.

Data load optimization – To speed up the data loading process, multi-threaded loading is supported and the number of jobs is configurable.

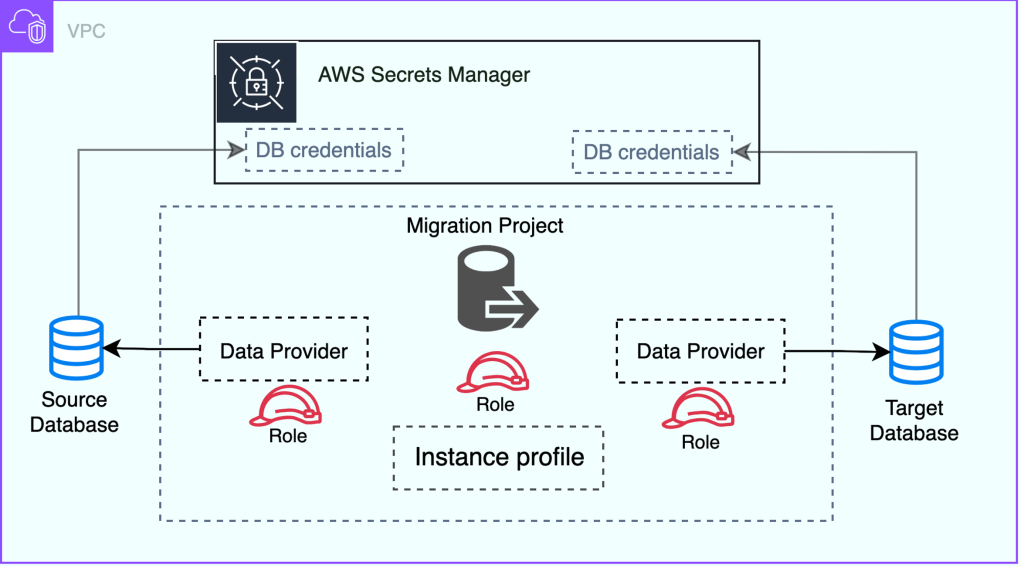

Highly secure – AWS DMS always maintains high AWS standards for security and protecting your data. This is evident by the availability of different AWS security features, such as AWS Secrets Manager, which is used for storing the source and target database credentials, AWS Identity Access Management (IAM) roles and policies used to create instance profile for securing the resources, secure connections between source and target databases by having a provision to enable SSL, and contain all the resources with in Amazon Virtual Private Cloud (Amazon VPC).

Solution overview

At a high level, homogeneous data migrations operate with instance profiles, data providers, and migration projects. When you create a migration project with the compatible source and target data providers of the same type, AWS DMS deploys a serverless environment where your data migration runs. Next, AWS DMS connects to the source data provider, reads the source data, puts the files on the disk, and restores the data using native database tools.

The following diagram illustrates the high-level architecture of homogeneous data migration.

For the purposes of this post, we demonstrate two prominent use cases that can benefit from AWS DMS homogeneous data migration:

Database migration from self-managed PostgreSQL to Amazon RDS for PostgreSQL, or Amazon Aurora PostgreSQL-Compatible.

Database major version upgrade from Amazon RDS for PostgreSQL v11.x to v16.x. Note that all versions greater than 10.4 to 15.x are supported for homogeneous data migration.

The following diagram shows the process of using homogeneous data migrations in AWS DMS to migrate a PostgreSQL database to Amazon RDS for PostgreSQL or Amazon Aurora PostgreSQL-Compatible.

Homogeneous data migration support different types of replication when using PostgreSQL as the source database as shown in the previous diagram.

For full load homogeneous data migrations, AWS DMS uses pg_dump to read data from your source database and store it on the disk attached to the serverless environment. After AWS DMS reads your source data, it uses pg_restore in the target database to restore your data.

For full load and change data capture(CDC) homogeneous data migrations, AWS DMS uses pg_dump for taking schema backup and uses pg_restore in the target database for restore. After schema restore is complete, AWS DMS automatically switches to a logical replication with the initial data synchronization for full load and change data capture(CDC). In this model, one or more subscribers subscribe to one or more publications on a publisher node.

For CDC homogeneous data migrations, AWS DMS requires the native start point to start the replication. If you provide the native start point, AWS DMS captures changes from that point. Alternatively, you can choose Immediately in the data migration settings to automatically capture the start point for the replication when the actual data migration starts.

Use case 1: Database migration from self-managed PostgreSQL to Amazon RDS for PostgreSQL, or Amazon Aurora PostgreSQL-Compatible

In this scenario, we cover the migration of an on-premises PostgreSQL database to Amazon RDS for PostgreSQL or Amazon Aurora PostgreSQL-Compatible. The sample database comprises business logic in the form of stored procedures, functions, and dummy data. The data is migrated using the different replication modes available. It also migrates secondary objects such as stored procedures and partitions.

To migrate a database from self-managed PostgreSQL to Amazon RDS for PostgreSQL, or Amazon Aurora PostgreSQL-Compatible, follow these high-level steps:

Create the IAM resources

Set up the network

Configure your source and target database DMS users with minimum permissions

Store your source and target database credentials in Secrets Manager

Create source and target data providers

Create an instance profile

Create a migration project

Create, configure, and start a data migration

Monitor the data migration status and progress

For more information, see Migrating PostgreSQL databases to Amazon RDS for PostgreSQL with DMS homogeneous data migrations.

Create IAM resources

Before commencing with homogeneous data migrations, set up an IAM policy and an IAM role in your account. The following screenshot shows an IAM policy created with the name HomogeneousDataMigrationPolicy with required permissions.

Associate the HomogeneousDataMigrationPolicy created in the previous step. The following screenshot shows the associated policy.

Make sure the trust policy is updated with the correct AWS Region information. In the following screenshot, the us-east-1 Region is used to set up homogeneous replication.

Set up the network

AWS DMS creates a serverless environment for homogeneous data migrations in a virtual private cloud (VPC) based on the Amazon VPC service. For more information, see Setting up a network for homogeneous data migrations in AWS DMS. To migrate source database without public accessibility, see Migrate an on-premises MySQL database to Amazon Aurora MySQL over a private network using AWS DMS homogeneous data migration and Network Load Balancer.

Complete the following steps to setup subnet group:

On the AWS DMS console, choose Subnets in the navigation pane.

Choose Create subnet group.

In the Subnet group configuration section, provide a name, description, and VPC.

For more information about creating a subgroup, see Creating a subnet group for an AWS DMS migration project.

In the Add subnets section, associate the subnets to create the subnet group.

Choose Create subnet group.

Configure your source and target database users with minimum permissions

In this step, you create a new user on the source and target database to configure the data replication. For more information on configuring the source database, see Configure Your Source Database. For configuring the target database, see Create Your Target Amazon RDS for PostgreSQL Database.

In the following section, a user named rep_admin is created with superuser privileges to use with homogeneous database migration and assigned SELECT permission on all the tables in the public schema. For additional information on user creation and privileges required see Using a PostgreSQL database as a source for homogeneous data migrations in AWS DMS.

Store your source and target database credentials in Secrets Manager

To be able to connect to your source and target database in an AWS DMS migration, store your credentials in Secrets Manager and make sure you replicate these to your Regions. For more information, see Store Database Credentials in AWS Secrets Manager.

The following screenshot shows the Secrets Manager console page with details of the source DB instance secret.

After you add the secret for the target instance in Secrets Manager, you should see the details of the target DB instance secret, as shown in the following screenshot.

Create source and target data providers

In this step, you create the PostgreSQL source database provider. Complete the following steps:

On the AWS DMS console, choose Data providers in the navigation pane.

Choose Create data provider.

For Name, provide a custom name for your source database, such as pgsource.

For Engine type, choose PostgreSQL.

There is no distinction between source and target data providers. After the data provider is created, it can be used as either source data provider or target data provider.

For Server name, enter the source database server name (DNS name) or IP address manually.

For Database name, enter the name of the database to be migrated. For this post, we miragre the database postgres.

For Secure Socker Later (SSL) mode, select the required SSL mode based on your company policies so the data is uncompromised during migration. For this post, we select verify-full to test the migration procedure.

Depending on the SSL mode selected, you can choose an existing CA certificate or create a new certificate.

To create the PostgreSQL target database provider, provide a custom name for the target data provider and select appropriate engine type.

For Engine configuration, select RDS database instance and choose the RDS instance available in the account.

Complete the steps to create the source data provider and select the options appropriately for SSL mode.

Select the existing CA or upload a custom CA.

Choose Create data provider.

The new data providers appear under Data providers on the AWS DMS console, as shown in the following screenshot.

Create an instance profile

You create an instance profile for each migration project to help with the one-time configuration of your network type, VPC, subnet, and VPC security groups. This profile can then reused for the given migration project. For more information, see Creating instance profiles for AWS Database Migration Service. The following screenshot shows the custom name exped-ins-profile for the instance profile. The instance profile name should be unique in an account.

If the source databases are outside the VPC (on premises), you need to assign public IP for the instance profile. Select the option appropriately. In the following screenshot, we have enabled a public IP while configuring the instance profile.

In the additional settings, choose your AWS Key Management Service (AWS KMS) key and create the instance profile.

The new instance profile can be seen on the Instance profiles pages of the AWS DMS console, as shown in the following screenshot.

Configure a PostgreSQL source DB instance

AWS DMS requires certain permissions on the source DB instance to copy or replicate the data to the target instance. For instructions to configure the source DB instance, refer to Using a PostgreSQL database as a source for homogeneous data migrations in AWS DMS.

Create a migration project

You create a migration project by using the resources from the previous steps. Before you proceed, make sure you have the data providers, secrets with database credentials stored in Secrets Manager, IAM role that provides access to Secrets Manager, and instance profile with network and security settings. For more information, see Creating migration projects in AWS Database Migration Service.

As shown in the following screenshot, we created a migration project called pulsar-migration and used the instance profile exped-ins-profile we created in the previous section.

Choose the source and target data and the IAM role created in the previous section.

The following screenshot shows the target database provider details.

You can view the details of the new project on the AWS DMS console, as shown in the following screenshot.

Create, configure, and start a data migration

After you create the migration project, you can start creating the data migration. This involves creating, configuring, and maintenance of migration tasks. For more information, see Creating a data migration in AWS DMS.

Before you start the data migration, make sure you create all the users and roles on the target database as homogeneous migration job do not copy the user/role and schema information to the target. On self managed postgres source, use the pg_dumpall command with the -g option to copy the global objects to the target as global objects include users, roles and user schemas. If you are using Amazon RDS or Amazon Aurora database as source, then additional option –no-role-passwords should be used to perform pg_dumpall with -g option. For additional commands to copy roles and users, refer to the instructions in Managing PostgreSQL users and roles. To find additional information on using pg_dump and pg_restores information, refer to Best practices for migrating PostgreSQL databases to Amazon RDS and Amazon Aurora

In this section, we create a data migration from an on-premises source DB instance (source data provider) to an RDS for PostgreSQL instance (target data provider).

We migrate the DB instance by creating two migration tasks: a full load task and a CDC task.

We recommend migrating the data in two steps, copying the huge datasets first, and then configuring the CDC task to migrate ongoing changes. This provides a way to verify the full data load first and continue migrating the data changes. AWS DMS provides full load and CDC options. AWS DMS automatically creates replication slots and retains changes until the full load is finished.

Create a full load data migration

To start using homogeneous data migrations, create a new data migration. You can create several homogeneous data migrations of different types in a single migration project.

On the AWS DMS console, choose Migration projects in the navigation pane.

Open your migration project.

On the Data migrations tab, choose Create data migration.

For Name, enter a custom name for the data migration.

For Replication type, select Full load.

Select Turn on CloudWatch logs to store data migration logs in Amazon CloudWatch.

If this option is enabled, AWS DMS writes the logs to CloudWatch to see the status of the migration and troubleshoot a failed migration.

Under Advanced settings, for Number of jobs, enter the number of parallel threads that AWS DMS can use to migrate your source data to the target (for this post, 8).

For IAM service role, choose the AWS DMS service role you created earlier.

Complete creating the data migration.

After you create the data migration, its status shows as Ready, as shown in the following screenshot.

To start migrating your data, select your migration and on the Actions menu, choose Start.

For more information, see Managing data migrations in AWS DMS.

The first run of the homogeneous data migration task requires some initial internal setup of creating a serverless environment; this process may take up to 15 minutes.

AWS DMS supports selection rules for homogeneous data migrations when using a PostgreSQL or MongoDB-compatible database as a source.

Create a data CDC-only data migration

At this point, you have to manually create a replication slot in the source database or use an existing replication slot to migrate the data. Along with the logical replication slot name, you need the confirmed_flush_lsn value of the replication slot to configure ongoing replication. The full load with CDC option automatically creates the replication slot for you.

You can use the following code example to get the native start point in your PostgreSQL database:

The following code shows the sql’s for creating a replication slot and querying for confirmed_flush_lsn.

Follow the procedure discussed in the previous section to create CDC task, with the following changes:

For Replication type, select Change data capture (CDC).

For Start mode, select Using a native start point to start the ongoing replication from a specified point.

For Slot name, enter the name of the logical replication slot.

For Native start point, enter the transaction log sequence number (LSN) you captured earlier.

Because we’re configuring ongoing replication, we don’t specify a stop mode.

To run your migration task, select your migration and on the Actions menu, choose Start.

Monitor the data migration status and progress

The AWS DMS console shows the status of the load and task status and other information. The following screenshot shows the task type and current status of the task.

Data migration task logs show the progress or latency of the task in terms of amount of data to be replicated. The latency depends on the performance of logical replication on the PostgreSQL end.

This latency can also be due to long running queries or large amounts of data being inserted on the source PostgreSQL database. You can check the replication lag status in terms of the number of bytes on each of the servers by running the following query:

Refer to PostgreSQL bi-directional replication using pglogical for more information on how to check replication lag or latency from the database directly.

In the previous section, we demonstrated how to create required resources, configure databases and migrate a self managed on-premise PostgreSQL database to Amazon RDS for PostgreSQL using Full Load and CDC-Only migration tasks. In the following section, we walk through the procedure for major version upgrade of your Amazon RDS for PostgreSQL databases.

Use case 2: Upgrade Amazon RDS for PostgreSQL v11.22 to v16.1

Database major version upgrades are critical in nature and require meticulous planning. The complexity of upgrades increases with the size of the database and downtime that’s available. You can choose from a few different options for database major version upgrades; for more information, see Using Amazon RDS Blue/Green Deployments for database updates, Achieving minimum downtime for major version upgrades in Amazon Aurora for PostgreSQL using AWS DMS, and Best practices for upgrading Amazon RDS to major and minor versions of PostgreSQL.

Some of these options require additional effort and expertise. However, the AWS DMS homogeneous data migration feature is straightforward and requires less effort. This feature helps you set up and copy the initial data and incremental data in just a few steps. In this section, we illustrate a use case of upgrading from Amazon RDS for PostgreSQL v11.22 to Amazon RDS for PostgreSQL v16.1.

We focus on only data migration using AWS DMS in this post. Before starting a major version upgrade, It’s highly recommended to follow the process as outlined in How to perform a major version upgrade (until Step 9). Assuming that the few iterations of the upgrade will be performed in the non-production environment, you should plan the detailed steps of your migration and fine-tune them before implementing them in a production environment.

The following are the high-level steps for copying the data for the upgrade using AWS DMS homogeneous data migration:

Complete the initial setup of creating IAM resources, setting up the network, and creating source and target data providers.

Configure replication by configuring a full load and change data capture (CDC) task

Complete post-migration checks (as detailed in Step 14 of How to perform a major version upgrade).

Complete the initial setup

Follow the procedures discussed in the previous sections to create an IAM policy, IAM role, AWS DMS instance profile, subnet group, and source and target database providers. The following screenshot shows the DB instances created. These instances are used as source and target for the upgrade.

Create data providers as discussed in the previous sections. The providers are listed on the Data providers page of the AWS DMS console as shown below.

Configure the data migration

For this major version upgrade, we use the full load and CDC option of homogeneous DMS feature. This task will copy the data using database native options:

Schema load is performed using Postgres native utilities – pg_dump and pg_restore

Full Load and CDC are performed using logical replication slots which is a PostgreSQL native utility

Both utilities are integral to PostgreSQL, so it’s unlikely that any issues will occur.

Following screenshot show the configuration section of creating data migration. You select “Full load and change data capture(CDC)†job to perform initial data copy and change data capture in a single task.

Select “Don’t stop CDC†option to synchronize all the changes on source database to synchronize to the target.

For Stop mode, select Don’t stop CDC.

After you create the data migration, you can start the data migration from the Actions menu.

Complete post-migration steps

Make sure all the schema objects are copied and verified, because AWS DMS doesn’t support copying all the objects. Refer to Best practices for AWS Database Migration Service for more information. Follow the post-migration steps including VACUUM ANALYZE of the target database along with data validation.

To monitor the homogenous data migration status and progress, navigate to the Data migrations tab on the Migration projects page of the AWS DMS console.

For your data migration, see the Status column. For more information about values in this column, see Statuses of homogeneous data migrations in AWS DMS.

Monitoring

You can monitor the data migration task’s progress in DMS console. For example, in the following screenshot show state the migration task status and its progress.

When the initial copy is complete, the task status on the AWS DMS console should look like the following screenshot.

At this point, CDC is in progress and changes are being replicated to the target host.

AWS DMS provide 2 metrics to monitor CDC latency and storage consumption.

OverallCDCLatency – This measures the overall latency during the CDC phase. For PostgreSQL databases, this metric shows the time that passes between last_msg_receipt_time and last_msg_send_time from the pg_stat_subscription view, measured in seconds.

StorageConsumption – This metric shows the storage that your data migration consumes, measured in bytes.

Manually verifying data synchronization and check catalog views

When CDC is in progress you can manually insert a test record on the source and verify it on the target side. The following screenshot shows a test insert on a table in the source database successfully replicated to the target database.

On source database

On target database

You can use additional catalog tables and queries to monitor the replication status. Homogeneous replication creates a logical replication slot in the source database to track changes and replicate using publisher and subscriber. The following screen shot shows confirmed_lsn of created replication slot using pg_replication_slots view.

Use the following query to capture the confirmed_flush_lsn of a replication slot:

The pg_stat_replication view shows the details of the WAL LSN to track the progress of CDC. You can see the replication lag using the LSNs from this view.

Make sure that the WAL locations are the same for sent_location, write_location, and replay_location. This indicates that the target database is at the same LSN position as the source database.

On the target side, you can find the details of the subscription name and other details of its publication using the following query:

The following query is useful to check the active queries on the database instance.

In the previous section we discussed the process to configure and perform major version upgrade of Amazon RDS for PostgreSQL database using AWS DMS homogeneous migration.

Best practices for migration

The following are best practices for migration:

Configure the multi-threaded option and jobs in the advanced settings when performing full load only. Use the optimum number of threads while configuring the full load based on the bandwidth available on your source database in terms of CPU and network. This helps copy of the data faster.

Clean up or archive unwanted data before the migrations. This helps minimize the initial data copy and reduces the CDC sync time significantly on busy production database systems.

Analyze the database and tables before you use the new target database and promote it to production use. This helps make sure PostgreSQL has updated statistics and provides optimum performance.

Refrain from runnng DDLs on the source database during the data replication. At the time of writing, DDL changes are not supported by homogeneous replication. If DDLs are run, the replication task results in an error.

Limitations

There are few notable limitations with this feature:

You can’t use homogeneous data migrations in AWS DMS to migrate data from a higher database version to a lower database version

There’s no built-in tool or feature for data validation with in homogeneous migration project. However you can use AWS DMS to configure validation only task.

Migration of global objects, such as users and roles, is not supported

For more information, see the Limitations for homogeneous data migrations.

Cleanup

AWS resources created by the AWS DMS homogeneous migration incur costs as long as they are in use. When you no longer need the resources, clean them up by deleting the associated data migration under the migration project, along with the migration project.

Conclusion

In this post, we introduced the AWS DMS homogeneous data migration feature and used it to migrate to the AWS Cloud and perform a database major version upgrade for a supported engine. Both use cases are complex and critical in nature, and require meticulous planning and thorough testing. It’s highly recommended that you test this feature for your respective database in a non-production environment before running it in production.

We value your feedback. Share any questions or suggestions in the comments section.

About the authors

HariKrishna Boorgadda is a Senior Consultant with the Professional Services team at Amazon Web Services. He focuses on database migrations to AWS and works with customers to design and implement Amazon RDS and Amazon Aurora architectures.

Sharath Gopalappa is a Sr. Product Manager at Amazon Web Services (AWS) with focus on helping organizations modernize their technology investments with AWS Databases & Analytics services.

Kumar Babu P G is a Lead Consultant with the Professional Services team at Amazon Web Services. He has worked on multiple databases as DBA and is skilled in SQL performance tuning and database migrations. He works closely with customers to help migrate and modernize their databases and applications to AWS.

Source: Read More