Generative AI models have become highly prominent in recent years for their ability to generate new content based on existing data, such as text, images, audio, or video. A specific sub-type, diffusion models, produces high-quality outputs by transforming noisy data into a structured format. Even though the model is significantly advanced, it still lacks control over corrupted data points, leading to suboptimal and slower outputs. A team of researchers from MIT, the University of Oxford, and NVIDIA Research have found an innovative solution called Discrete Diffusion with Planned Denoising to tackle noise in a well-structured manner.Â

Existing methods include autoregressive models and post-processing techniques. Autoregressive models use forward diffusion to add noise, and then the reverse phase learns how to remove the added noise. This two-step process iteratively refines corrupted data and generates coherent outputs. Although efficient, it lacks control of the denoising process and is computationally expensive due to the iterative nature of the reverse process. It leads to degraded production quality in complex scenarios like image generation. Post-processing techniques rely on cleaning the data only after generating the outputs. It is inefficient and time-consuming to handle the noise altogether at the end.Â

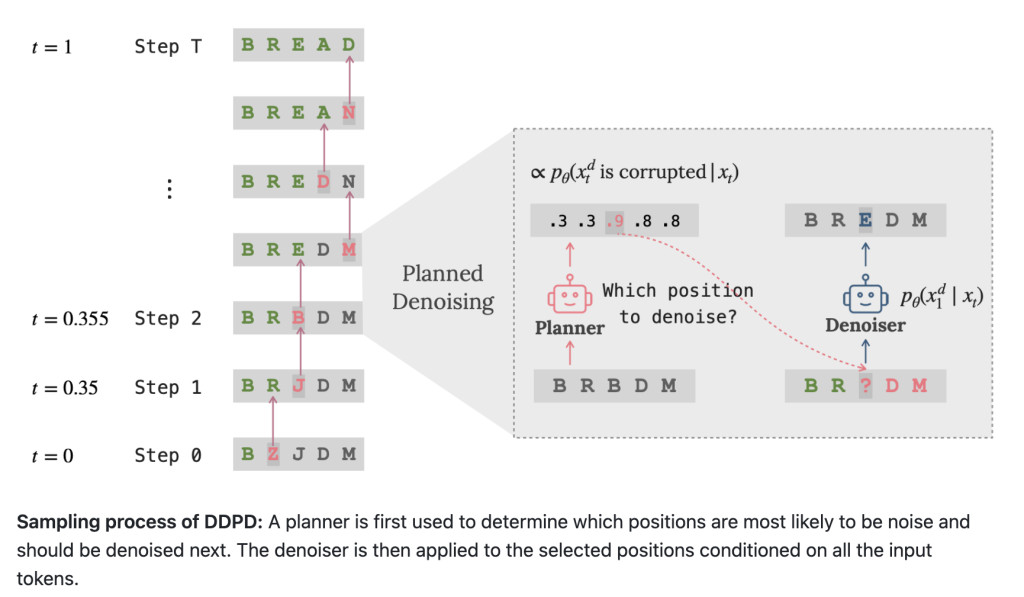

Suboptimal outputs and high resource consumption have thus put forth the need for a new method that can efficiently denoise the corrupted data. The proposed method, Discrete Diffusion with Planned Denoising, strategically selects the sequence of standardized data that needs to be refined based on severity. Advanced techniques such as attention mechanisms are crucial in denoising that particular sequence iteratively. These steps allow for enhanced control over the denoising process during diffusion. It increases output quality and minimizes reliance on post-processing techniques to reduce computational costs.Â

In applications like machine translation or text summarisation, the ability to plan denoising can lead to more fluent and accurate sentences. Similarly, in image generation, DDPD could reduce artifacts and improve the sharpness of high-resolution images, making it particularly useful for artistic style transfer or medical imaging applications. The dual-model novelty of the technical approach lies in its strategic selection at generation time. Performance measures show that DDPD decreases perplexity on benchmark datasets like text8 and OpenWebText, thus bridging the performance difference with autoregressive methods. Validation tests were carried out on datasets of more than a million sentences; the DDPD methodology proved solid and efficient for multiple scenarios.

In summary, DDPD effectively alleviates the inefficient and inaccurate generation of text by innovatively separating processes in planning and denoising. The strengths of this paper include its capability to improve prediction accuracy with reduced computational overhead. However, Validation in real-world applications is still needed to assess its practical applicability. Overall, this work presents a significant advancement in generative modeling techniques, provides a promising pathway toward better natural language processing outcomes, and marks a new benchmark for similar future research in this domain.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post Discrete Diffusion with Planned Denoising (DDPD): A Novel Machine Learning Framework that Decomposes the Discrete Generation Process into Planning and Denoising appeared first on MarkTechPost.

Source: Read MoreÂ