The growing reliance on large language models for coding support poses a significant problem: how best to assess real-world impact on programmer productivity? Current approaches, such as static bench-marking based on datasets such as HumanEval, measure the correctness of the code but cannot capture the dynamic, human-in-the-loop interaction of real programming activity. With LLMs increasingly being integrated into coding environments and deployed in real-time, suggest, or chat settings, it is now time to reconsider measuring not only the ability of LLMs to complete tasks but also their impact on human productivity. A much-needed contribution toward the development of an evaluation framework that is more pragmatic would be to ensure that these LLMs actually improve true coding productivity outside the lab.

Although a lot of LLMs are designed for programming tasks, the evaluation of many of these LLMs remains largely dependent upon static benchmarks such as HumanEval and MBPP, in which models are judged not based on how well they can assist human programmers but based on the correctness of code generated by themselves. While accuracy is vital to quantitatively measure benchmarks, practical aspects in real-world scenarios are generally neglected. All types of programmers continually engage LLMs and modify their work in an iterative manner in real-world practical settings. None of these traditional approaches capture key metrics, such as how much time programmers spend coding, how frequently programmers accept LLM suggestions or the degree to which LLMs actually help solve complex problems. The gap between theoretical rankings and practical usefulness casts a question on the generalisability of these methods since they cannot represent actual LLM use, and the actual productivity gain is hard to measure.

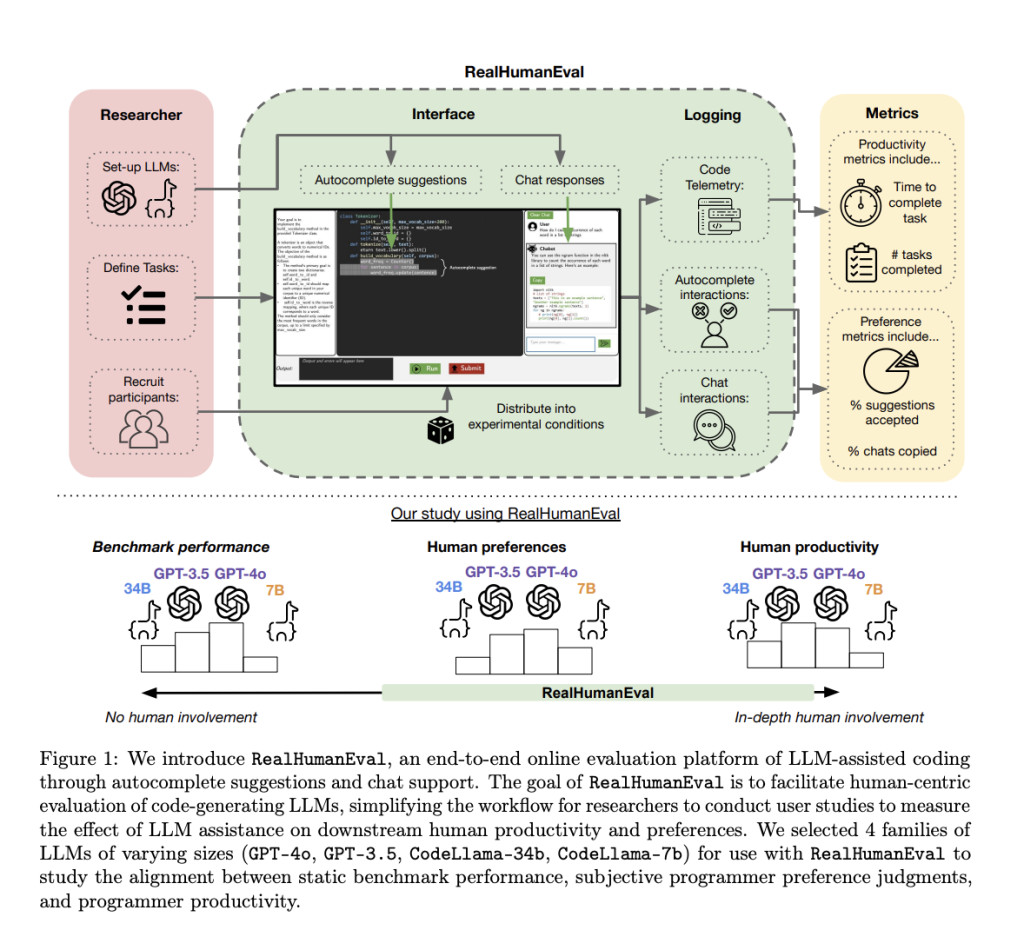

Researchers from MIT, Carnegie Mellon University, IBM Research, UC Berkeley, and Microsoft developed RealHumanEval, a groundbreaking platform designed for human-centric evaluation of LLMs in programming. It allows real-time evaluation of LLMs via two modes of interaction: suggestions over autocomplete or via chat-based assistance. Detailed user interaction logs are recorded on the platform for code suggestions accepted and the time taken to complete a task. Real-human Eval is beyond any static benchmarks by focusing on human productivity metrics that give so much better comprehension of how well LLMs perform once integrated with real-world coding workflows. This helps to bridge the gap between theoretical performance and practice, providing insight into ways in which LLMs help or hinder the coding process.

RealHumanEval allows users to interact both through autocomplete and through chat, recording several aspects of these interactions. The current evaluation tested seven different LLMs, including models from the GPT and CodeLlama families on a set of 17 coding tasks with varying complexity. The system logged a great deal of productivity metrics: completion time per task, number of completed tasks, and how often a user accepted a suggested LLM code. For this experiment, 243 participants took part, and all the collected data was analyzed to see how different LLMs contributed to much more efficiency in coding. It discusses these in detail, and it provides the results of analyzing the interactions to provide insight into the effectiveness of LLMs in the wild coding environment and gives detailed nuances of human-LLM collaboration.

RealHumanEval testing of LLMs demonstrated that the higher-performing models on benchmarks yield significant gains in coding productivity, above all by saving time. For example, than the previous models, GPT-3.5 and CodeLlama-34b completed tasks 19% and 15% faster, respectively, for programmers. At other times, the gain on productivity measures cannot be stated as uniform for all models under consideration. A case in point is that there is insufficient positive evidence regarding CodeLlama-7b. Also, even though the time taken to complete the tasks has been reduced, the no. of tasks completed did not have much change, meaning LLMs will speed up the completion of individual tasks but by and large they don’t necessarily increment the total no. of tasks finished in a given time frame. Again, code suggestion acceptance was different for various models; GPT-3.5 had more in the way of the users’ acceptance than the rest. These results put to light that while LLMs can potentially foster productivity, in actual power to boost output, this is highly contextual.

In conclusion, RealHumanEval is a landmark testbed for LLMs in programming because it focuses on human-centered productivity metrics rather than traditional static benchmarks and therefore offers a much-needed complementary view of how well LLMs support real-world programmers. RealHumanEval allows deep insight into efficiency gains and user interaction patterns that help convey the strengths and limitations of LLMs when used in coding environments. Such would be a contribution to this line of inquiry for future research and development toward AI-assisted programming by providing valuable insights into optimizing such tools for practical use.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post RealHumanEval: A Web Interface to Measure the Ability of LLMs to Assist Programmers appeared first on MarkTechPost.

Source: Read MoreÂ