Point tracking is paramount in video; from 3d reconstruction to editing tasks, a precise approximation of points is necessary to achieve quality results. Over time, trackers have incorporated transformer and neural network-based designs to track individual and multiple points simultaneously. However, these neural networks could be fully exploited only with high-quality training data. Now, while there is an abundance of videos that constitute a good training set, tracking points need to be annotated manually. Synthetic videos seem an excellent substitute to solve the above problem, but they are computationally extravagant and less lucrative than real videos. In the light of this situation, unsupervised learning shows great potential. This article delves into a new effort to take over the state of the art in tracking with a semi-supervised approach and a much simpler mechanism.

Meta put forth Cotracker 3, a new tracking model that allows real videos without annotation for the training process using pseudo labels generated by off-the-shelf teachers. Cotracker3 eliminates components from previous trackers to achieve better results with much smaller architectures and training feedstock. Furthermore, it addresses the question of scalability. Although researchers have done great work in unsupervised tracking with real videos, its complexity and requirements are questionable. The current state of the art in unsupervised tracking needs enormous training videos alongside complex architecture. The preliminary question is, ‘ Are Millions of Training videos necessary for a tracker to be entitled good?’ Additionally, different researchers have made improvements to previous works. Still, it remains to be seen if all of these designs are required for good tracking or if there is a scope for elimination/simplified substitution of some.

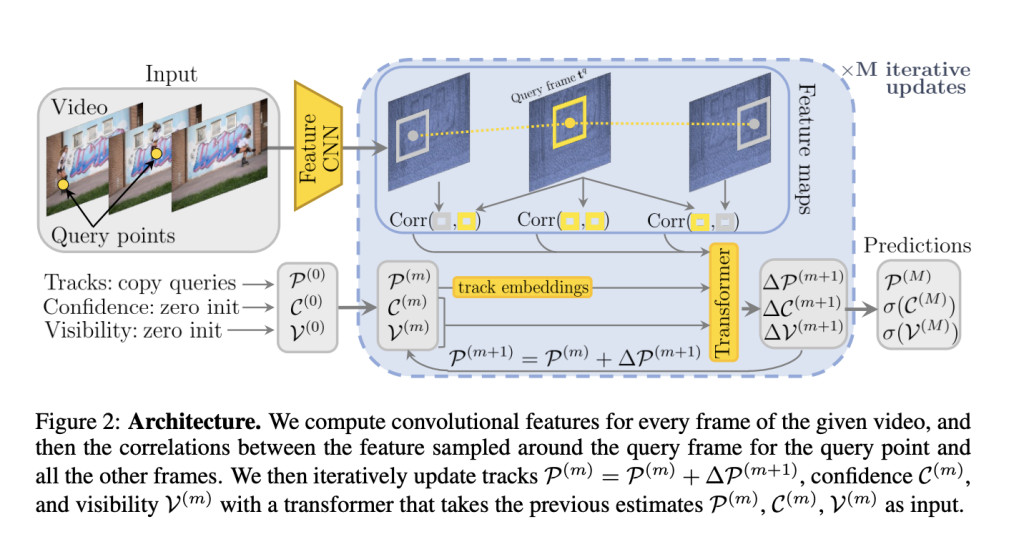

Cotracker3 is an amalgamation of previous works that takes features and improvises on them. For instance, it takes iterative updates, convolutional features from PIPs, and unrolled training from one of its earlier releases, Cotracker. The working methodology of Cotracker 3 is straightforward. It predicts the corresponding point track for each frame in a video as per the given query. It gives it alongside the visibility and confidence score. Visibility shows if the tracked point is visible or occluded. In contrast, confidence measures whether the network is confident that the tracked point is within a certain distance from the ground truth in the current frame. Cotracker 3 comes in two versions – online and offline. The online version operates in a sliding window, only processing the input video sequentially and tracking points forward. In contrast, the offline version processes the entire video as a single sliding window.

For training, the dataset consisted of around 100,000 videos. Next, multiple teacher models were trained on synthetic data. Then, a teacher is randomly chosen for training, and query points are selected from some video frames using the SIRF detection sampling method. Further delving into the technical details for each frame, convolutional networks are employed to extract feature maps and calculate the correlation between these feature vectors. This 4D correlation calculation is done with an MLP. A transformer iteratively updates values of Visibility and Confidence earlier initialized at 0.

CoTracker3 is considerably leaner and faster than other trackers in this field. Compared to its predecessor alone, it has half as many parameters in Cotracker. It also beats the current fastest Tracker by 27% due to its global matching strategy and MLP utilization.CoTracker3 is highly competitive with other trackers across various benchmarks. In some cases, it even superseded state-of-the-art models. When comparing Cotracker3’s online and offline model, it was observed that the online version efficiently tracked occluded points. In contrast, online tracking was feasible in real-time without space constraints.

Cotracker 3 took inspiration from base models and combined their goodness into a smaller package. It used a simple semi-supervised training protocol where videos were annotated with various off-shelf trackers to finetune a model that outperformed all the other trackers, showing that beauty does lie in simplicity.

Check out the Paper, Code, Demo, and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

The post Meta AI Releases Cotracker3: A Semi-Supervised Tracker that Produces Better Results with Unlabelled Data and Simple Architecture appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)