Deep neural networks are powerful tools that excel in learning complex patterns, but understanding how they efficiently compress input data into meaningful representations remains a challenging research problem. Researchers from the University of California, Los Angeles, and New York University propose a new metric, called local rank, to measure the intrinsic dimensionality of feature manifolds within neural networks. They show that as training progresses, particularly during the final stages, the local rank tends to decrease, indicating that the network effectively compresses the data it has learned. The paper presents both theoretical analysis and empirical evidence demonstrating this phenomenon. It links the reduction in local rank to the implicit regularization mechanisms of neural networks, offering a perspective that connects feature manifold compression to the Information Bottleneck framework.

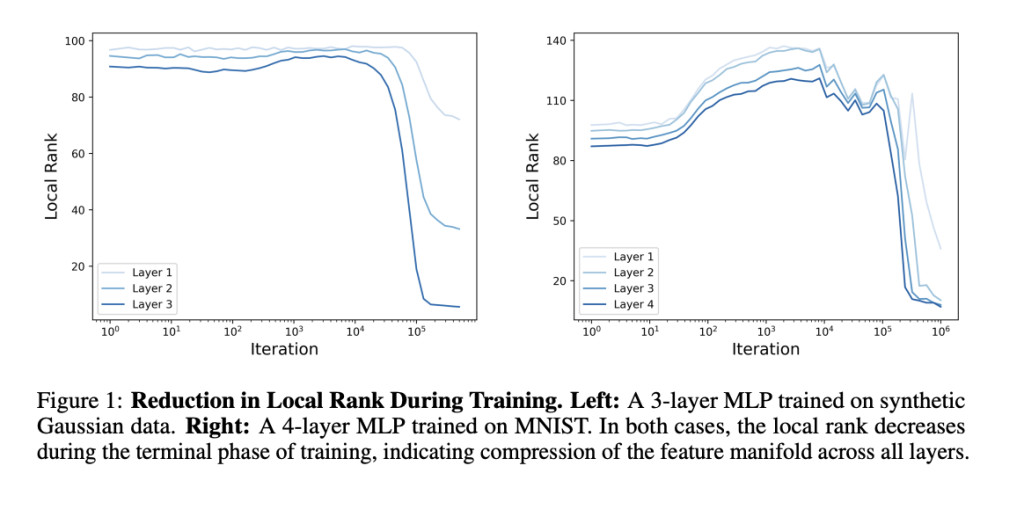

The proposed framework is centered around the definition and analysis of local rank, which is defined as the expected rank of the Jacobian of the pre-activation function with respect to the input. This metric provides a way to capture the true number of feature dimensions in each layer of the network. The theoretical analysis suggests that, under certain conditions, gradient-based optimization leads to solutions where intermediate layers develop low local ranks, effectively forming bottlenecks. This bottleneck effect is an outcome of implicit regularization, where the network minimizes the rank of the weight matrices as it learns to classify or predict. Empirical studies were conducted on both synthetic data and the MNIST dataset, where the authors showed a consistent decrease in local rank across all layers during the final phase of training.

The empirical results reveal interesting dynamics: when training a 3-layer multilayer perceptron (MLP) on synthetic Gaussian data, as well as a 4-layer MLP on the MNIST dataset, the researchers observed a significant reduction in local rank during the final training stages. The reduction occurred across all layers, aligning with the compression phase as predicted by the Information Bottleneck theory. Additionally, the authors tested deep variational information bottleneck (VIB) models and demonstrated that the local rank is closely linked to the IB trade-off parameter β, with clear phase transitions in the local rank as the parameter changes. These findings validate the hypothesis that local rank is indicative of the degree of information compression occurring within the network.

In conclusion, this research introduces local rank as a valuable metric for understanding how neural networks compress learned representations. Theoretical insights, backed by empirical evidence, demonstrate that deep networks naturally reduce the dimensionality of their feature manifolds during training, which directly ties to their ability to generalize effectively. By relating local rank to the Information Bottleneck theory, the authors provide a new lens through which to view representation learning. Future work could extend this analysis to other types of network architectures and explore practical applications in model compression techniques and improved generalization.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post Understanding Local Rank and Information Compression in Deep Neural Networks appeared first on MarkTechPost.

Source: Read MoreÂ