Bayesian Optimization, widely used in experimental design and black-box optimization, traditionally relies on regression models for predicting the performance of solutions within fixed search spaces. However, many regression methods are task-specific due to modeling assumptions and input constraints. This issue is especially prevalent in learning-based regression, which depends on fixed-length tensor inputs. Recent advancements in LLMs show promise in overcoming these limitations by embedding search space candidates as strings, enabling more flexible, universal regressors to generalize across tasks and expand beyond the constraints of traditional regression methods.

Bayesian Optimization uses regressors to solve black-box optimization problems by balancing exploration and exploitation. Traditionally dominated by Gaussian Process (GP) regressors, recent efforts have focused on improving GP hyperparameters through pretraining or feature engineering. While neural network approaches like Transformers offer more flexibility, they are limited by fixed input dimensions, restricting their application to tasks with structured inputs. Recent advances propose embedding string representations of search space candidates for greater task flexibility. This approach enables efficient, trainable regressors to handle diverse inputs, longer sequences, and precise predictions across varying scales, improving optimization performance.

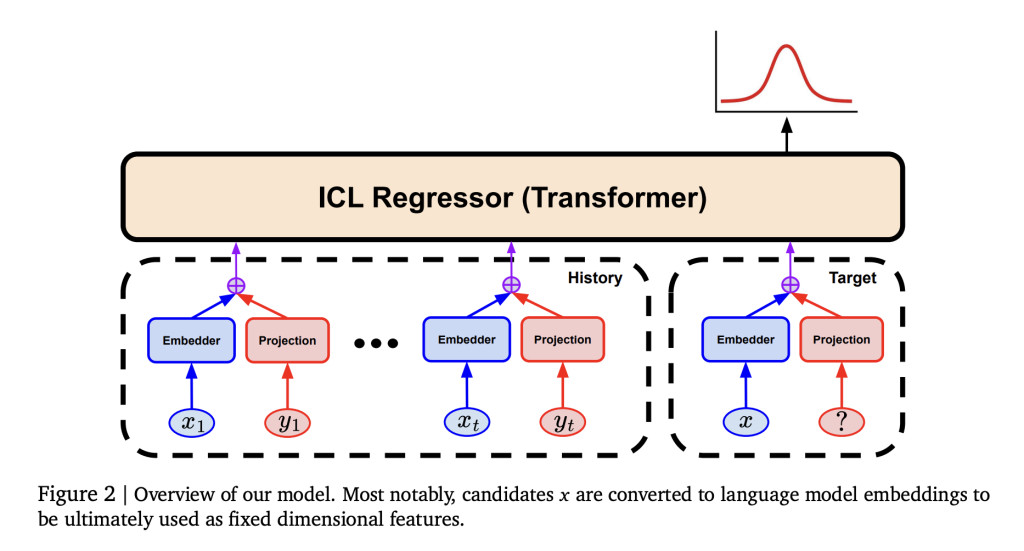

Researchers from UCLA, Google DeepMind, and Google propose the “Embed-then-Regress†paradigm for in-context regression using string embeddings from pretrained language models. Converting all inputs into string representations enables general-purpose regression for Bayesian Optimization across diverse tasks like synthetic, combinatorial, and hyperparameter optimization. Their framework uses LLM-based embeddings to map strings to fixed-length vectors for tensor-based regressors, such as Transformer models. Pretraining on large offline data sets allows uncertainty-aware predictions for unseen objectives. The framework, enhanced with explore-exploit techniques, delivers results comparable to state-of-the-art Gaussian Process-based optimization algorithms.

The method uses an embedding-based regressor for Bayesian optimization, mapping string inputs to fixed-length vectors via a language model. These embeddings are processed by a Transformer to predict outcomes, forming an acquisition function to balance exploration and exploitation. The model, pretrained on offline tasks, uses historical data to make uncertainty-aware predictions. During inference, a mean and deviation output guides optimization. The approach is computationally efficient, using a T5-XL encoder and a smaller Transformer, requiring moderate GPU resources. This framework achieves scalable predictions while maintaining a low inference cost through efficient Transformers and embeddings.

The experiment demonstrates the versatility of the Embed-then-Regress method across a wide range of tasks, focusing on its broad applicability rather than optimizing for specific domains. The algorithm was evaluated on various problems, including synthetic, combinatorial, and hyperparameter optimization tasks, with performance averaged over multiple runs. The results show that the method effectively handles a mix of continuous and categorical parameters in optimization scenarios. The approach highlights its potential in diverse optimization settings, offering a flexible solution for different problem types without needing domain-specific adjustments.

In conclusion, the Embed-then-Regress method showcases the flexibility of string-based in-context regression for Bayesian Optimization across diverse problems, achieving results comparable to standard GP methods while handling complex data types like permutations and combinations. Future research could focus on developing a universal in-context regression model by pretraining across various domains and improving architectural aspects, such as learning aggregation methods for Transformer outputs. Additional applications could include optimizing prompts and code search, which rely on less efficient algorithms. Exploring the use of this approach in process-based reward modeling and stateful environments in language modeling is also promising.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

The post Embed-then-Regress: A Versatile Machine Learning Approach for Bayesian Optimization Using String-Based In-Context Regression appeared first on MarkTechPost.

Source: Read MoreÂ