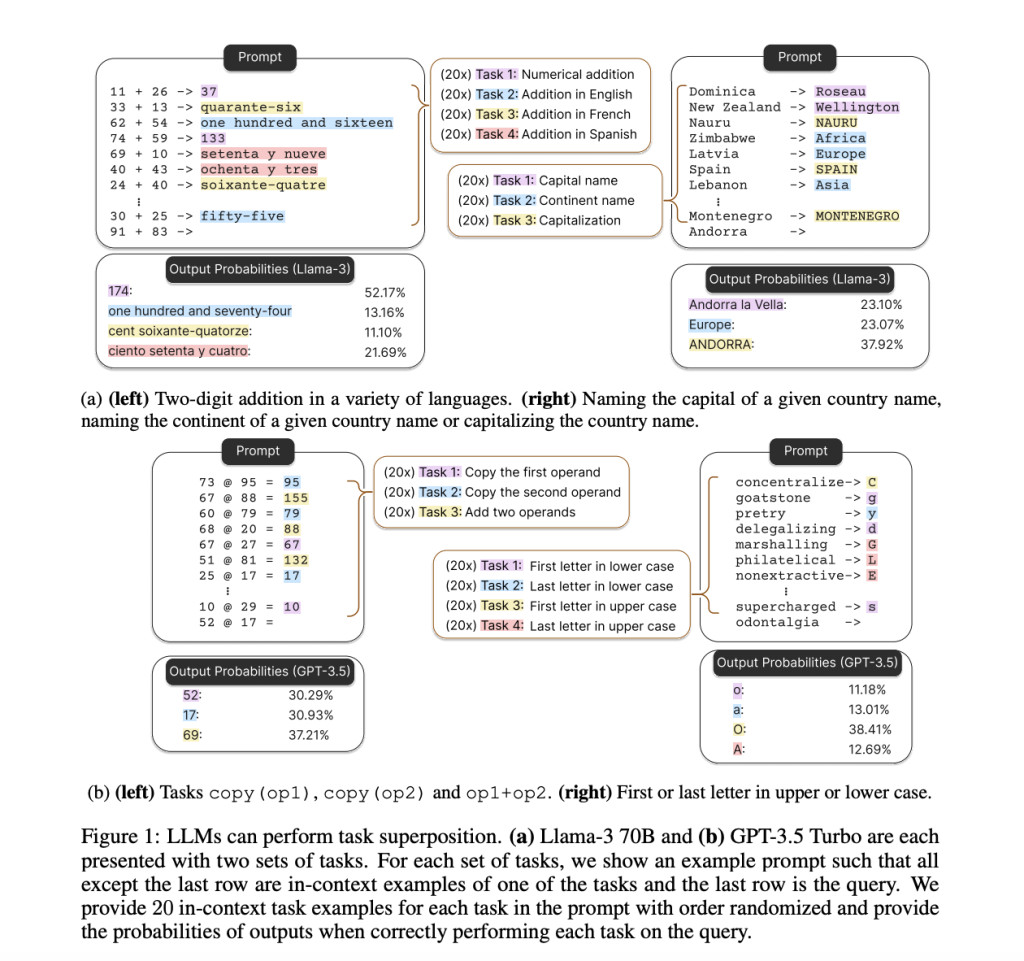

Large Language Models (LLMs) have demonstrated remarkable proficiency in In-Context Learning (ICL), which is a technique that teaches them to complete tasks using just a few examples included in the input prompt and no further training. One of the primary features of ICL is that these models can manage several computationally different ICL tasks simultaneously in a single inference session; the phenomenon is called superposition. Task superposition means that when an LLM is provided relevant examples for each task within the same input prompt, it can process and produce responses for several tasks at once.

In a recent study from the University of Wisconsin-Madison, the University of Michigan, and Microsoft Research, the occurrence of task superposition across different LLM kinds and scales has been empirically supported. Even models taught to learn one task at a time using ICL exhibit this capacity to manage several tasks simultaneously. This implies that the capacity for simultaneous processing is an intrinsic trait that arises throughout the inference process rather than being directly related to the type of training.

Theoretically, the idea of task superposition fits in with the capabilities of transformer architectures, which constitute the basis of the majority of contemporary LLMs. By using techniques like self-attention, which enables them to concentrate on various input segments as required, transformers are renowned for their capacity to handle intricate patterns and dependencies in data. This versatility enables them to represent and interpret task-specific information within a single prompt, making it viable for them to generate responses that simultaneously address numerous tasks.

The study has also explored the internal handling of this task superposition by LLMs. It looks at how they integrate and handle various task vectors, i.e., the internal representations that are specific to each task. In essence, the model balances these task-specific representations by modifying its internal state during inference. This enables the model to generate accurate outputs for every task type that is presented in the input.

One of the study’s main conclusions is that larger LLMs are typically better able to manage several activities at once. The model can handle more jobs concurrently and improves accuracy when calibrating its output probabilities as its size grows. This indicates that larger models are more capable of producing more precise and dependable answers for all of the jobs they are doing and are better at multitasking.

These revelations have clarified the fundamental powers of LLMs and provide credence to the idea that these models are a superposition of simulators. According to this viewpoint, LLMs can simulate a variety of possible task-specific models inside of themselves, enabling them to react flexibly depending on the input’s context. These results also raise interesting concerns about how LLMs actually accomplish several tasks at once, including whether this is a result of their training and optimization or if it stems from a deeper structural property of the model. Gaining a deeper understanding of these mechanisms may help identify the limitations and possible uses of LLMs in managing intricate, multifaceted jobs.

The team has shared their primary contributions as follows.

Through comprehensive experimental and theoretical analysis, the team has shown that task superposition is a common phenomenon across different pretrained LLM families, including GPT-3.5, Llama-3, and Qwen.

The team has empirically shown that task superposition can arise even when the model is taught with instances of only one task at a time, suggesting that this ability is not primarily related to multi-task training.

A theoretical framework has been offered that shows transformer models’ innate ability to perform numerous tasks at once by utilizing their structure for parallel task processing.

The study has explored how LLMs internally manage and mix task vectors and finds that convex combinations of these vectors can replicate the impact of superposition.

It has been found that larger models are able to handle more tasks at once and capture the distribution of in-context instances more accurately, which results in more accurate results.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post How Large Language Models (LLMs) can Perform Multiple, Computationally Distinct In-Context Learning (ICL) Tasks Simultaneously appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)