The challenge lies in generating effective agentic workflows for Large Language Models (LLMs). Despite their remarkable capabilities across diverse tasks, creating workflows that combine multiple LLMs into coherent sequences is labor-intensive, which limits scalability and adaptability to new tasks. Efforts to automate workflow generation have not yet fully eliminated the need for human intervention, making broad generalization and effective skill transfer for LLMs difficult to achieve.

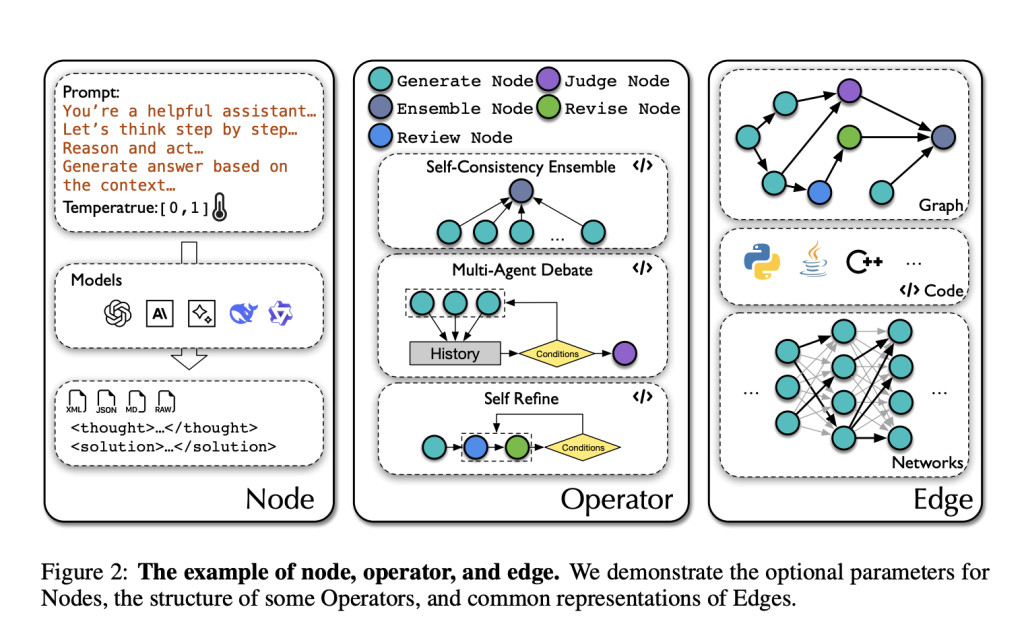

A team of researchers from DeepWisdom, The Hong Kong University of Science and Technology (Guangzhou), Renmin University of China, Nanjing University, Fudan University, King Abdullah University of Science and Technology, Université de Montréal & Mila, The Hong Kong University of Science and Technology introduce AFlow, a novel framework aimed at automating agentic workflow generation. AFlow is designed to solve the existing challenges by framing the workflow optimization problem as a search over code-represented workflows. These workflows are modeled as graphs where nodes represent LLM-invoking actions, and edges represent the dependencies between these actions. Using Monte Carlo Tree Search (MCTS), AFlow optimizes workflows iteratively by making modifications, executing them, and refining the structure based on execution feedback.

AFlow’s structure is built to efficiently explore and optimize workflows with minimal human involvement. The key to AFlow’s efficiency lies in its use of nodes and edges to represent workflows, allowing it to model complex relationships between LLM actions. The nodes are connected in a tree-like structure, enabling diverse configurations that adapt to various task complexities. AFlow uses predefined operators, such as “Ensemble†or “Review & Revise,†which serve as modular building blocks. The workflow optimization proceeds through a series of phases, including node exploration, expansion using LLM-based feedback, and experience backpropagation, ensuring that AFlow can refine workflows with each iteration.

The results of this study, based on six benchmark datasets—HumanEval, MBPP, MATH, GSM8K, HotPotQA, and DROP—demonstrate that AFlow significantly outperforms state-of-the-art manually designed workflows as well as existing automated optimization approaches. Specifically, AFlow achieves an average performance improvement of 5.7% over manually designed methods and a 19.5% enhancement over existing automated systems like ADAS. The researchers also noted that AFlow could generate workflows enabling smaller LLMs to outperform larger models such as GPT-4o, all at only 4.55% of the inference cost, making it a cost-effective alternative for a wide variety of tasks.

In conclusion, AFlow makes significant strides in reducing the need for manual effort in designing agentic workflows, thereby expanding the potential for LLMs to solve a diverse array of tasks effectively. By using MCTS for workflow search and optimization, AFlow not only automates the process but also achieves better performance and cost-efficiency compared to existing methods. This advancement provides a strong foundation for future research in automating workflow generation, making LLMs more accessible and efficient for real-world applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

The post AFlow: A Novel Artificial Intelligence Framework for Automated Workflow Optimization appeared first on MarkTechPost.

Source: Read MoreÂ