In today’s data-driven world, personalized recommendations play a crucial role in enhancing user experiences and driving business growth. Amazon Personalize, a fully managed machine learning (ML) service, enables companies to build sophisticated recommendation systems tailored to their specific use cases. One of the powerful features of Amazon Personalize is BatchInference, which allows you to generate recommendations for a large number of users or items in a single batch operation.

Unlike real-time recommendations, which are generated in real time for individual users, BatchInference is designed to process large datasets, making it ideal for scenarios where you need to generate recommendations in advance or offline. The following are some common use cases for Amazon Personalize BatchInference:

Email marketing campaigns – Generate personalized product recommendations for each user in your email marketing list, increasing the relevance and engagement of your campaigns

Catalog merchandising – Curate personalized product listings or category pages for your ecommerce website, tailoring the shopping experience to individual user preferences

Content recommendations – Recommend relevant articles, videos, or other content to users based on their browsing history and interests, enhancing engagement and user retention

Offline analysis – Generate recommendations for offline analysis, such as identifying cross-sell or upsell opportunities, optimizing inventory management, or conducting market research

Although Amazon Personalize provides a convenient way to generate recommendations, storing and retrieving them efficiently can be a challenge, especially for large datasets. We see that customers use Amazon DynamoDB, a serverless, NoSQL, fully managed database with single-digit millisecond performance at any scale, to solve this challenge. As the number of recommendations or users grows, DynamoDB automatically scales database throughput and storage to nearly unlimited capacity. DynamoDB is well-suited for workloads that require consistent high performance, which is essential for providing a smooth user experience when retrieving and displaying personalized recommendations in real-time. In this post, we explore how to import pre-generated Amazon Personalize recommendations into DynamoDB.

Solution overview

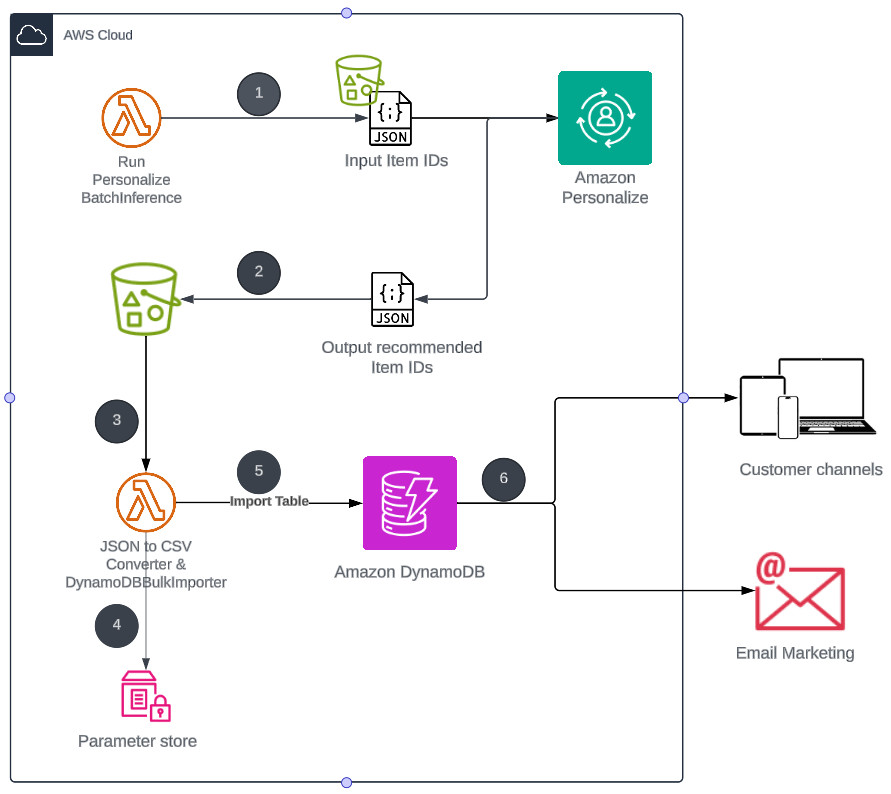

The solution uses Amazon Personalize for generating recommendations, Amazon Simple Storage Service (Amazon S3) for storing the output, AWS Lambda functions for orchestrating the workflow, and DynamoDB for storing and serving the recommendations efficiently. This setup allows for scalable, low latency retrieval of product recommendations for customer-facing channels.

The following architecture diagram illustrates how to create a batch inference job in Amazon Personalize to generate recommendations and store them in DynamoDB.

The workflow consists of the following steps:

A Lambda function creates a batch inference job in Amazon Personalize. This function passes necessary input parameters, such as input JSON file or Amazon Personalize solution Amazon Resource Number (ARN), and calls the Amazon Personalize CreateBatchInferenceJob API to create a batch inference job. Amazon Personalize runs the batch inference job on the supplied item IDs and generates recommendations for the given item IDs based on the trained solution.

Amazon Personalize uploads the output JSON file containing the recommendations to an S3 bucket. The JSON file contains the seed product IDs and corresponding recommended product IDs.

The S3 bucket invokes another Lambda function using Amazon S3 Event Notifications when the JSON file is uploaded.

The Lambda function reads the output JSON file from Amazon S3, extracts the necessary data, and converts it into a CSV format suitable for importing into DynamoDB. It dynamically generates the table name and stores it into AWS Systems Manager Parameter Store. By storing the table name in the Parameter Store, the downstream application does not need to have the table name hardcoded within its codebase. This decoupling allows for greater flexibility and easier maintenance, as the table name can be updated or changed without modifying the application code.

The Lambda function then invokes the DynamoDB ImportTable API to insert bulk records into a DynamoDB table.

The recommendations stored in the DynamoDB table can now be used in various customer-facing channels. Applications or services can query the DynamoDB table to retrieve personalized recommendations for specific users or products, and display them on websites, mobile apps, or other customer touchpoints.

Note: During each Amazon S3 import process, DynamoDB creates a new target table that data will be imported into

Prerequisites

To deploy this solution, you must have the following:

An AWS account.

AWS Identity and Access Management (IAM) permissions to run an AWS CloudFormation

An Amazon Personalize dataset group and solution Refer to Architecting near real-time personalized recommendations with Amazon Personalize to get started with Amazon Personalize.

Deploy the solution with AWS CloudFormation

Complete the following steps to create AWS resources to build a data import pipeline. For this post, we use the AWS Region us-east-1.

Launch the CloudFormation stack:

On the Create stack page, choose Next.

On the Specify stack details page, for PersonalizeSolutionVersionARN, enter the ARN of the solution that you want to run BatchInference on, then choose Next.

On the Configure stack options page, keep the default selections and choose Next.

On the Review page, acknowledge that AWS CloudFormation might create IAM resources, then choose Submit.

It may take up to 10 minutes for the stack to deploy. After it is deployed, the stack status changes to CREATE_COMPLETE.You can check the Resources tab for the resources created by the CloudFormation stack.

Download the JSON file. This file contains the item IDs of various sample products that Amazon Personalize is trained on. We use this file as input for the Amazon Personalize CreateBatchInference API, enabling us to generate recommended product IDs tailored to the specified products.

Upload the JSON file to the S3 bucket created by the CloudFormation stack.

You can obtain the S3 bucket name on the Resources tab of the CloudFormation stack, for the resource PersonalizeToDDbS3Bucket.

Test the solution

To test the solution, complete the following steps:

On the Lambda console, choose Functions in the navigation pane.

Choose the function PersonalizeToDDB_RunBatchInference.

On the Test tab, enter a name for the event (for example, BatchInferenceTest).

Choose Save, then choose Test.

Before you invoke the Lambda function, make sure you uploaded the BatchInference.json file to the S3 bucket as mentioned in the previous steps.The Lambda function will start Amazon Personalize BatchInference job.

On the Amazon Personalize console, navigate to the batch inference jobs for your dataset group to check the status of the job.

It may take up to 30 minutes for the job to finish. The status will change to Active when the job is complete.

Upon completion, the job creates a BatchInference.json.out file and update it to the BatchInference folder of the same S3 bucket where the Batchinference.json file was uploaded.

As soon as BatchInferenceInput.json.out is uploaded to S3 bucket, Amazon S3 invokes the PersonalizeToDDB_StoreModelCachetoDDB Lambda function. This function converts BatchInferenceInput.json.out to a CSV file, uploads it to the BatchInference subfolder of the S3 bucket, and creates a table in DynamoDB by invoking the ImportTable API.

Validate the data

To check the data, complete the following steps:

On the DynamoDB console, choose Tables in the navigation pane.

Choose the table starting with PersonalizeToDDB_.

On the table details page, choose Explore table items.

Confirm that item IDs are populated in the Recommendations

To retrieve recommended product IDs for a specific item from DynamoDB table, you can use the GetItem

Clean up

To avoid incurring future charges, follow these steps to remove the resources:

On the Amazon S3 console, choose Buckets in the navigation pane.

Select the bucket starting with clickstream-clickstreams3bucket and choose Empty.

On the Empty bucket page, enter permanently delete in the confirm deletion field and choose Empty.

On the AWS CloudFormation console, choose Stacks in the navigation pane.

Select the PersonalizeToDDB stack you created and choose Delete.

Conclusion

Combining the power of Amazon Personalize batch inference and DynamoDB offers a compelling solution for businesses seeking to deliver personalized experiences while providing optimal application latency. By pre-computing recommendations and using the high-performance capabilities of DynamoDB, you can provide a seamless and responsive user experience, ultimately driving customer satisfaction and loyalty. Whether you’re an ecommerce platform, a media streaming service, or any business that relies on personalized recommendations, embracing this solution can unlock new levels of efficiency and scalability. Stay ahead of the curve by using the cutting-edge technologies offered by AWS and redefine the boundaries of personalized experiences for your customers.

Do you have follow-up questions or feedback? Leave a comment. We’d love to hear your thoughts and suggestions. To get started with Amazon DynamoDB, refer to the Developer Guide

About the Authors

Pritam Bedse is a Senior Solutions Architect at Amazon Web Services, helping Enterprise customers. His interests and experience include AI/ML, Analytics, Serverless Technology, and customer engagement platforms. Outside of work, you can find Pritam outdoors gardening and grilling.

Karthik Vijayraghavan is a Senior Manager of DynamoDB Specialist Solutions Architects at AWS. He has been helping customers modernize their applications using NoSQL databases. He enjoys solving customer problems and is passionate about providing cost effective solutions that performs at scale. Karthik started his career as a developer building web and REST services with strong focus on integration with relational databases and can relate to customers that are in the process of migration to NoSQL.

Source: Read More