Large Language Models (LLMs) have gained significant attention for their versatility in various tasks, from natural language processing to complex reasoning. A promising application of these models is the development of autonomous multi-agent systems (MAS), which aim to utilize the collective intelligence of multiple LLM-based agents for collaborative problem-solving. However, LLM-based MAS faces two critical challenges: achieving efficient inter-agent communication to minimize computational costs and optimizing the collective performance of the system as a cohesive unit. Current methods fail to solve these challenges, resulting in overly detailed exchanges that increase token usage, longer inference times, and higher computational costs.

Existing methods discussed in this paper include LLM-based MAS and Iterative Refinement of LLMs. The Role-playing in LLM-based MAS for complex reasoning, collaborative software development, and embodied agent interactions have shown promise. Current research has shown that increasing the number and diversity of agents can lead to performance gains. Moreover, iterative refinement paradigms, such as self-reflection mechanisms and parameter updates for example ReST and STaR, have been developed for individual LLMs. However, iterative refinement is yet to be explored in the LLM-based MAS context. These methods are effective in single-agent scenarios but ineffectively adapted to optimize the collective performance of multi-agent systems.

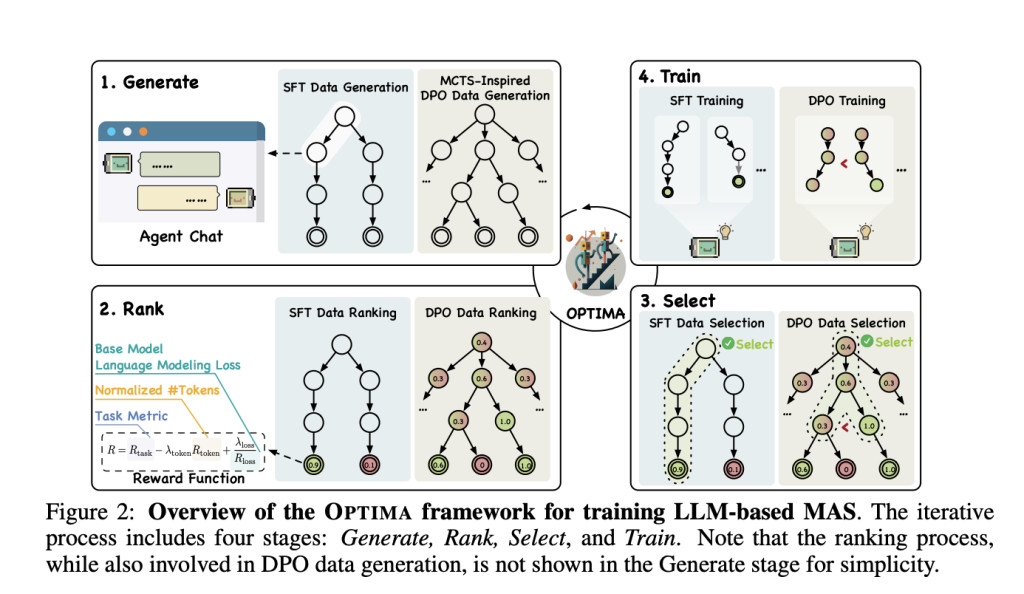

Researchers from Tsinghua University and Beijing University of Posts and Telecommunications have proposed OPTIMA, a novel framework designed to enhance both communication efficiency and task effectiveness in LLM-based MAS. It employs an iterative generate, rank, select, and train paradigm, utilizing a reward function that balances task performance, token efficiency, and communication readability. OPTIMA uses Monte Carlo Tree Search-inspired techniques for data generation, treating conversation turns as tree nodes to explore diverse interaction paths. The method addresses the fundamental challenges in LLM-based MAS, potentially leading to more scalable, efficient, and effective multi-agent systems.

OPTIMA is evaluated on information exchange (IE) and debate multi-agent settings. The IE setting uses datasets like HotpotQA, CBT, etc, with contexts split between agents to support information exchange. The debate setting uses GSM8K, MATH, ARC-C, and MMLU, with one agent as a solver and another as a critic. OPTIMA is compared against single-agent approaches like Chain-of-Thought and Self-Consistency, and multi-agent baselines such as Multi-Agent Debate and AutoForm. Llama 3 8B serves as the base model, focusing on two-agent scenarios and no external tools, allowing a clear analysis of the key elements of multi-agent communication and collaboration.

OPTIMA consistently outperforms baseline methods in both effectiveness and efficiency across different tasks. Its variants show substantial gains in Information Exchange (IE) tasks, especially in multi-hop reasoning scenarios. The iSFT-DPO variant stands out, delivering the best performance while greatly reducing token usage compared to the top baseline. For instance, it improves the F1 score by 38.3% on 2WMHQA while using only 10% of the tokens required by Multi-Agent Debate. In debate tasks, OPTIMA shows better performance and token efficiency for ARC-C and MMLU, while maintaining comparable performance with higher efficiency for MATH and GSM8k tasks.

In conclusion, researchers introduced OPTIMA, a method to enhance communication efficiency and task effectiveness in LLM-based MAS. It demonstrates consistent superiority over single-agent and multi-agent baselines across various tasks. The framework’s key innovations, including iterative training techniques, a balanced reward function, and an MCTS-inspired approach for data generation, contribute to its success in improving communication efficiency and task performance. OPTIMA’s potential to enhance inference scaling laws and adapt to out-of-distribution tasks highlights the importance of efficient communication in multi-agent and LLM systems. Future studies should investigate OPTIMA’s scalability to larger models and more complex scenarios, opening the door to even more advanced multi-agent systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

[Upcoming Event- Oct 17, 2024] RetrieveX – The GenAI Data Retrieval Conference (Promoted)

The post OPTIMA: Enhancing Efficiency and Effectiveness in LLM-Based Multi-Agent Systems appeared first on MarkTechPost.

Source: Read MoreÂ