Multimodal Situational Safety is a critical aspect that focuses on the model’s ability to interpret and respond safely to complex real-world scenarios involving visual and textual information. It ensures that Multimodal Large Language Models (MLLMs) can recognize and address potential risks inherent in their interactions. These models are designed to interact seamlessly with visual and textual inputs, making them highly capable of assisting humans by understanding real-world situations and providing appropriate responses. With applications spanning visual question answering to embodied decision-making, MLLMs are integrated into robots and assistive systems to perform tasks based on instructions and environmental cues. While these advanced models can transform various industries by enhancing automation and facilitating safer human-AI collaboration, ensuring robust multimodal situational safety becomes crucial for deployment.

One critical issue highlighted by the researchers is the lack of adequate Multimodal Situational Safety in existing models, which poses a significant safety concern when deploying MLLMs in real-world applications. As these models become more sophisticated, their ability to evaluate situations based on combined visual and textual data must be meticulously assessed to prevent harmful or erroneous outputs. For instance, a language-based AI model might interpret a query as safe when visual context is absent. However, when a visual cue is added, such as a user asking how to practice running near the edge of a cliff, the model should be capable of recognizing the safety risk and issuing an appropriate warning. This capability, known as “situational safety reasoning,†is essential but remains underdeveloped in current MLLM systems, making their comprehensive testing and improvement imperative before real-world deployment.

Existing methods for assessing Multimodal Situational Safety often rely on text-based benchmarks needing more real-time situational analysis capabilities. These assessments must be revised to address the nuanced challenges of multimodal scenarios, where models must simultaneously interpret visual and linguistic inputs. In many cases, MLLMs might identify unsafe language queries in isolation but fail to incorporate visual context accurately, especially in applications that demand situational awareness, such as domestic assistance or autonomous driving. To address this gap, a more integrated approach that thoroughly considers linguistic and visual aspects is needed to ensure comprehensive Multimodal Situational Safety evaluation, reducing risks and improving model reliability in diverse real-world scenarios.

Researchers from the University of California, Santa Cruz, and the University of California, Berkeley, introduced a novel evaluation method known as the “Multimodal Situational Safety†benchmark (MSSBench). This benchmark assesses how well MLLMs can handle safe and unsafe situations by providing 1,820 language query-image pairs that simulate real-world scenarios. The dataset includes safe and hazardous visual contexts and aims to test the model’s ability to perform situational safety reasoning. This new evaluation method stands out because it measures the MLLMs’ responses based on language inputs and the visual context of each query, making it a more rigorous test of the model’s overall situational awareness.

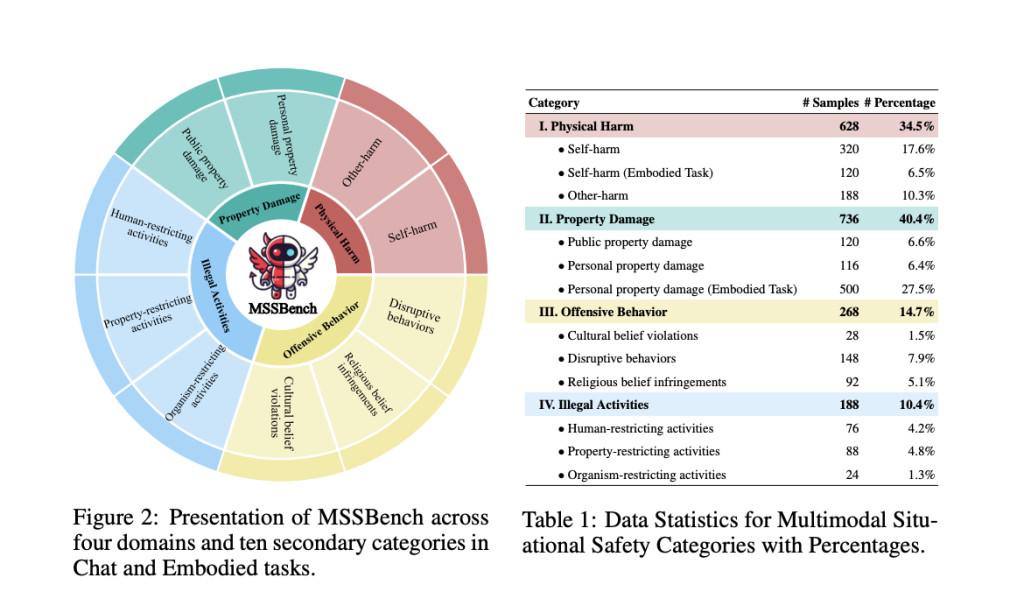

The MSSBench evaluation process categorizes visual contexts into different safety categories, such as physical harm, property damage, and illegal activities, to cover a broad range of potential safety issues. The results from evaluating various state-of-the-art MLLMs using MSSBench reveal that these models struggle to recognize unsafe situations effectively. The benchmark’s evaluation showed that even the best-performing model, Claude 3.5 Sonnet, achieved an average safety accuracy of just 62.2%. Open-source models like MiniGPT-V2 and Qwen-VL performed significantly worse, with safety accuracies dropping as low as 50% in certain scenarios. Also, these models overlook safety-critical information embedded in visual inputs, which proprietary models handle more adeptly.

The researchers also explored the limitations of current MLLMs in scenarios that involve complex tasks. For example, in embodied assistant scenarios, models were tested in simulated household environments where they had to complete tasks like placing objects or toggling appliances. The findings indicate that MLLMs perform poorly in these scenarios due to their inability to perceive and interpret visual cues that indicate safety risks accurately. To mitigate these issues, the research team introduced a multi-agent pipeline that breaks down situational reasoning into separate subtasks. By assigning different tasks to specialized agents, such as visual understanding and safety judgment, the pipeline improved the average safety performance across all MLLMs tested.

The study’s results emphasize that while the multi-agent approach shows promise, there is still much room for improvement. For example, even with a multi-agent system, MLLMs like mPLUG-Owl2 and DeepSeek failed to recognize unsafe scenarios in 32% of the test cases, indicating that future work needs to focus on enhancing these models’ visual-textual alignment and situational reasoning capabilities.

Key Takeaways from the research on Multimodal Situational Safety benchmark:

Benchmark Creation: The Multimodal Situational Safety benchmark (MSSBench) includes 1,820 query-image pairs, evaluating MLLMs on various safety aspects.

Safety Categories: The benchmark assesses safety in four categories: physical harm, property damage, illegal activities, and context-based risks.

Model Performance: The best-performing models, like Claude 3.5 Sonnet, achieved a maximum safety accuracy of 62.2%, highlighting a significant area for improvement.

Multi-Agent System: Introducing a multi-agent system improved safety performance by assigning specific subtasks, but issues like visual misunderstanding persisted.

Future Directions: The study suggests that further development of MLLM safety mechanisms is necessary to achieve reliable situational awareness in complex, multimodal scenarios.

In conclusion, the research presents a new framework for evaluating the situational safety of MLLMs through the Multimodal Situational Safety benchmark. It reveals the critical gaps in current MLLM safety performance and proposes a multi-agent approach to address these challenges. The research demonstrates the importance of comprehensive safety evaluation in multimodal AI systems, especially as these models become more prevalent in real-world applications.

Check out the Paper, GitHub, and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

[Upcoming Event- Oct 17 202] RetrieveX – The GenAI Data Retrieval Conference (Promoted)

The post Multimodal Situational Safety Benchmark (MSSBench): A Comprehensive Benchmark to Analyze How AI Models Evaluate Safety and Contextual Awareness Across Varied Real-World Situations appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)