Multimodal foundation models, like GPT-4 and Gemini, are effective tools for a variety of applications because they can handle data formats other than text, such as images. However, these models are underutilized when it comes to evaluating massive amounts of multidimensional time-series data, which is essential in industries like healthcare, finance, and the social sciences. Sequential measurements made over time, or time-series data, are a rich source of information that current models don’t fully utilize. This indicates a squandered chance to glean deeper, more complex insights that might propel data-driven decision-making in these domains.

In order to see time-series data through plots, recent research from Google AI has suggested a unique yet simple solution to this challenge by utilizing the vision encoders already present in multimodal models. This method transforms time-series data into visual plots and feeds them into the model’s vision component instead of giving raw numerical sequences to the models, which frequently results in subpar performance. This removes the requirement for further model training, which could be costly and time-consuming.

The research has shown through empirical evaluations that supplying raw time-series data in text format is not as effective as using this visual technique. The significant cost savings associated with using model APIs is one of the main benefits of employing visual representations of time-series data. Compared to text-based sequences of the same data, much fewer tokens, which are units of information processed by the model, are needed for visual input when the data is represented as plots, resulting in up to a 90% decrease in model costs.Â

A single plot may convey the same information with significantly fewer visual tokens in instances where time-series data would normally be represented by thousands of text tokens, which not only makes the process more efficient but also more cost-effective.

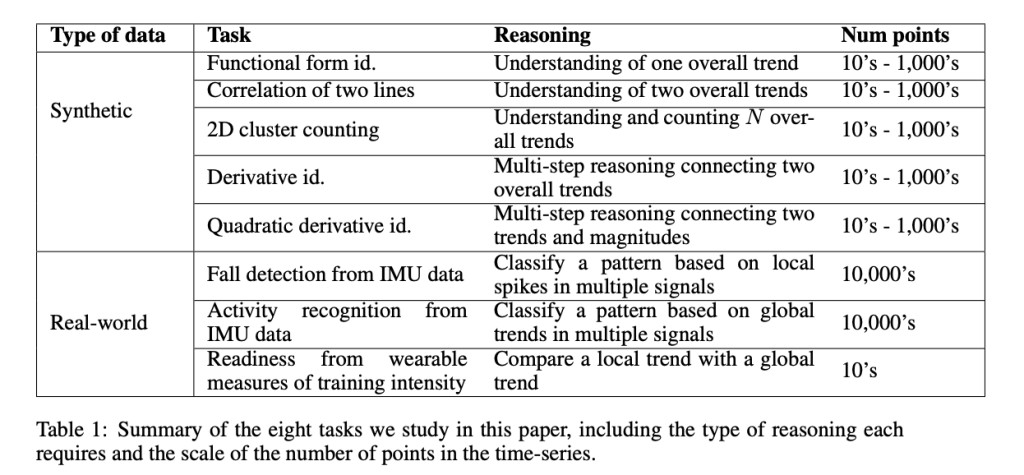

Synthetic data trials have been used to validate the premise that using plots to visualize time-series data would improve model performance. Simple tasks like determining the functional form of clean data were the starting point for these experiments, which then moved on to more difficult challenges like deriving significant trends from noisy scatter plots. The resilience of this technique has been proved by the model’s performance in these controlled studies.

The researchers used the technique for real-world consumer health activities like fall detection, activity recognition, and preparedness evaluation to further verify its generalisability beyond synthetic data. In order for the model to reach the right conclusions on these tasks, it must do multi-step reasoning on heterogeneous and noisy data. The visual plot-based strategy was maintained to perform better than the text-based one, even with these demanding jobs.

The results demonstrated that adopting visual representations of time-series data significantly improved performance on both synthetic and real-world tasks. The performance increased by up to 120% in synthetic tasks known as zero-shot tasks, in which the models were given no prior knowledge. The results showed significantly more improvement in real-world tasks, with up to 150% performance increase over using raw text data, such as activity recognition and fall detection.

In conclusion, these results have demonstrated the possibility of handling complex time-series data by utilizing the innate visual capabilities of multimodal models such as GPT and Gemini. Plots have been used to depict this data, and this method not only lowers costs but also improves performance, making it a workable and scalable option for a variety of applications. This approach makes it possible to apply foundation models in new ways in fields where time-series data is essential, enabling more effective and efficient data-driven insights.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

[Upcoming Event- Oct 17 202] RetrieveX – The GenAI Data Retrieval Conference (Promoted)

The post Enhancing Time-Series Analysis in Multimodal Models through Visual Representations for Richer Insights and Cost Efficiency appeared first on MarkTechPost.

Source: Read MoreÂ