New developments in Large Language Models (LLMs) have shown how well these models perform sophisticated reasoning tasks like coding, language comprehension, and math problem-solving. However, there is less information about how effectively these models work in terms of planning, especially in situations where a goal must be attained through a sequence of interconnected actions. Because planning frequently calls for models to comprehend constraints, manage sequential decisions, function in dynamic contexts, and retain recollection of previous activities, it is a more difficult topic for LLMs to handle.

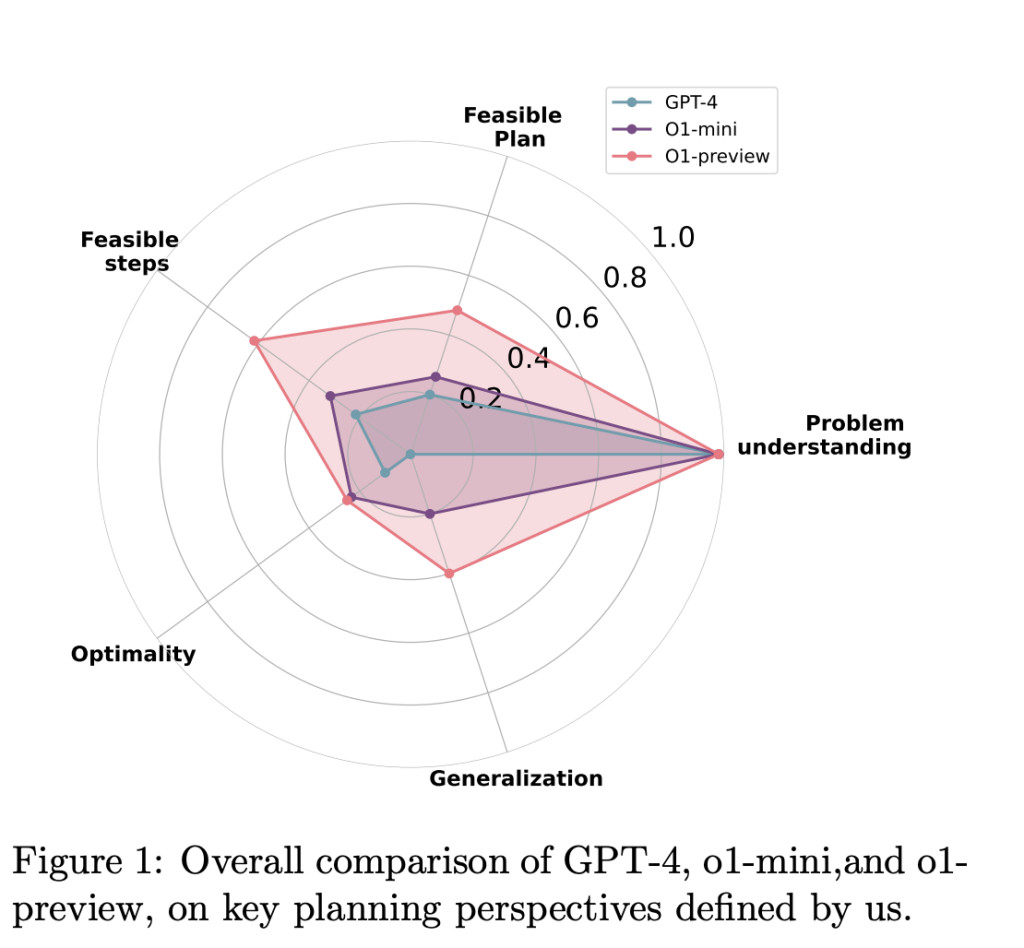

In recent research, a team of researchers from University of Texas at Austin have assessed the planning capabilities of OpenAI’s o1 model, which is a newcomer to the LLM field that was created with improved reasoning capabilities. The study tested the model’s performance in terms of three primary dimensions: generalisability, optimality, and feasibility, using a variety of benchmark tasks.

The ability of the model to provide a plan that can be carried out and complies with the requirements and limitations of the task is referred to as feasibility. For instance, jobs in settings like Barman and Tyreworld are heavily constrained, requiring the utilization of resources or actions in a specified order, and failing to follow these instructions fails. In this regard, the o1-preview model demonstrated some amazing strengths, especially in its capacity to self-evaluate its plans and adhere to task-specific limitations. The model’s capacity to evaluate itself enhances its likelihood of success by enabling it to more accurately determine if the steps it generates comply with the task’s requirements.

While coming up with workable designs is a vital first step, optimality or how well the model completes the task is also essential. Finding a solution alone is frequently insufficient in many real-world scenarios, as the solution also needs to be efficient in terms of the amount of time, resources used, and procedures required. The study found that although the o1-preview model outperformed the GPT-4 in the following limitations, it frequently produced less-than-ideal designs. This indicates that the model frequently included pointless or redundant actions, which resulted in ineffective solutions.Â

For example, the model’s answers were workable but included needless repeats that may have been avoided with a more optimized approach in environments like Floortile and Grippers, which demand excellent spatial reasoning and task sequencing.

The capacity of a model to apply newly learned planning techniques to unique or unfamiliar problems for which it has not received explicit training is known as generalization. This is a crucial component in real-world applications since activities are frequently dynamic and need flexible and adaptive planning techniques. The o1-preview model had trouble generalizing in spatially complicated environments like Termes, where jobs include managing 3D spaces or many interacting objects. Its performance drastically declined in new, spatially dynamic tasks, even while it could keep structure in more familiar activities.

The study’s findings have demonstrated the o1-preview model’s advantages and disadvantages in relation to planning. On the one hand, the model’s capabilities above GPT-4 are evident in its capacity to adhere to limits, control state transitions, and assess the viability of its own plans. Because of this, it is more dependable in structured settings where adherence to rules is essential. However, there are still a lot of substantial decision-making and memory management constraints in the model. For tasks requiring strong spatial reasoning, in particular, the o1-preview model often produces less-than-ideal designs and has difficulty generalizing to unfamiliar environments.

This pilot study lays the framework for future research targeted at overcoming the stated limitations of LLMs in planning tasks. The crucial areas in need of development are as follows.

Memory Management: Reducing the number of unnecessary steps and increasing work efficiency could be achieved by improving the model’s capacity to remember and make effective use of previous activities.

Decision-Making: More work is required to improve the sequential decisions made by LLMs, making sure that each action advances the model towards the objective in the best possible way.

Generalization: Improving abstract thinking and generalization methods could improve LLM performance in unique situations, especially those involving symbolic reasoning or spatial complexity.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

[Upcoming Event- Oct 17 202] RetrieveX – The GenAI Data Retrieval Conference (Promoted)

The post Evaluating the Planning Capabilities of Large Language Models: Feasibility, Optimality, and Generalizability in OpenAI’s o1 Model appeared first on MarkTechPost.

Source: Read MoreÂ