Large language models (LLMs) have revolutionized natural language processing and artificial intelligence, enabling a variety of downstream tasks. However, most advanced models focus predominantly on English and a limited set of high-resource languages, leaving many European languages underrepresented. This lack of linguistic diversity creates significant barriers for non-English speakers, limiting their access to the capabilities of AI technologies. To address this problem, a team of researchers from Unbabel, Instituto de Telecomunicações, Instituto Superior Técnico, Carnegie Mellon University, MICS, CentraleSupelec, Université Paris-Saclay, Illuin Technology, University of Edinburgh, Equall, and Aveni introduce the EuroLLM project that aims to develop multilingual language models capable of understanding and generating text in all official European Union languages, as well as other relevant languages such as Arabic, Chinese, and Russian.

The EuroLLM project seeks to create LLMs that support all European Union languages, thereby bridging the gap left by predominantly English-focused open-weight LLMs. The project has developed two initial models: EuroLLM-1.7B and EuroLLM-1.7B-Instruct, which have shown promising results on multilingual benchmarks and machine translation tasks. This summary provides an overview of the EuroLLM project, detailing its data collection and filtering process, the development of a multilingual tokenizer, the model configurations, and evaluation results of its initial models.

Data Collection and Filtering

The EuroLLM models were trained on a diverse dataset collected from multiple sources to support all targeted languages. The final corpus was divided into four categories: web data, parallel data, code/math data, and high-quality data. The data collection process included deduplication, language identification, perplexity filtering, and heuristic filtering to ensure quality. For example, English web data was sourced from the FineWeb-edu dataset, while other high-resource languages utilized data from RedPajama-Data-v2. Additionally, parallel data was collected to improve alignment between languages and enhance the model’s machine translation capabilities.

Data Mixture

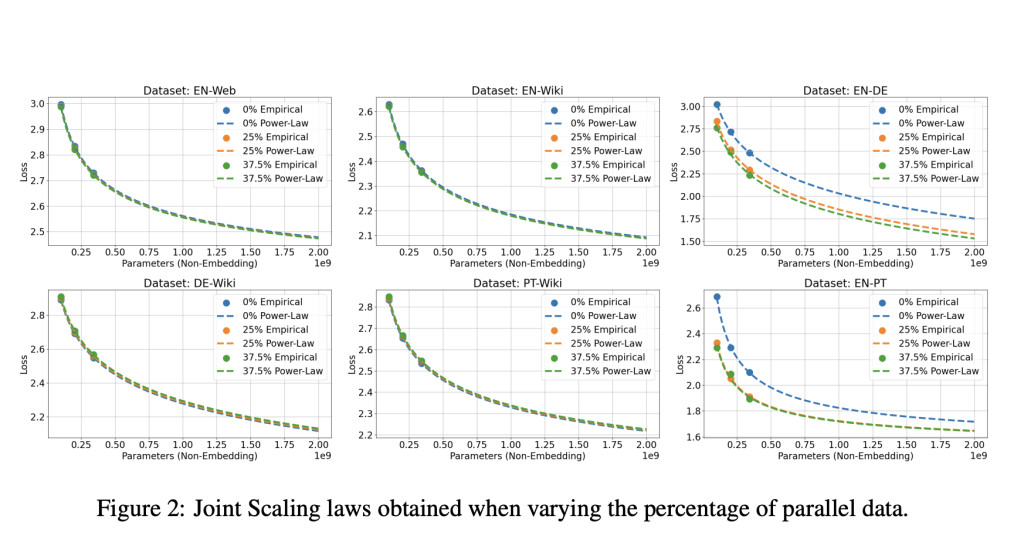

The training corpus was carefully curated to balance data from different languages and domains. English was allocated 50% of the total tokens in the initial training phase, with the remaining tokens distributed among other languages and code/math data. During the annealing phase, the proportion of English data was reduced to 32.5% to increase the model’s multilingual capabilities. The data mixture also included a significant amount of parallel data, which was set at 20% for each language, based on findings that it improved cross-language alignment without negatively impacting other domains.

Tokenizer

The EuroLLM project developed a multilingual tokenizer with a vocabulary of 128,000 pieces, using the SentencePiece framework. The larger vocabulary allowed the model to efficiently handle multiple languages, reducing fertility (pieces per word) compared to other tokenizers like Mistral and LLaMa-3. This tokenizer was essential for enabling effective multilingual support across a wide range of languages.

Model Configuration

EuroLLM-1.7B uses a standard dense Transformer architecture with several modifications to enhance performance. The model features grouped query attention (GQA) for increased inference speed, pre-layer normalization for improved training stability, and the SwiGLU activation function for better downstream results. The model was pre-trained on 4 trillion tokens using 256 Nvidia H100 GPUs, with a learning rate scheduler that included a warm-up phase and linear decay. The trapezoid scheduler was found to outperform the cosine scheduler on multilingual benchmarks and machine translation tasks.

Post-Training and Fine-Tuning

To enable EuroLLM-1.7B to follow natural language instructions, the model was fine-tuned on the EuroBlocks dataset, which included human-written and synthetic data covering a wide range of languages and tasks. The resulting model, EuroLLM-1.7B-Instruct, was trained using supervised fine-tuning with cross-entropy loss, enabling it to become an instruction-following conversational model.

Results

The EuroLLM models were evaluated on general benchmarks and machine translation tasks. On commonsense inference (Hellaswag) and science exam questions (Arc Challenge), EuroLLM-1.7B matched or outperformed other models like Gemma-2b and TinyLlama for most languages, showcasing its increased multilingual capabilities. For machine translation, EuroLLM-1.7B-Instruct outperformed Gemma-2b and was competitive with Gemma-7b, despite having fewer parameters. These results demonstrate the effectiveness of the EuroLLM models in both understanding and generating text across multiple languages.

Conclusion and Future Work

The EuroLLM project has successfully developed multilingual language models that support all European Union languages, addressing the need for inclusive LLMs beyond English. Future work will focus on scaling up the number of model parameters and further improving data quality to enhance the performance of multilingual LLMs for Europe.

Check out the Paper and Model on HF. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

Interested in promoting your company, product, service, or event to over 1 Million AI developers and researchers? Let’s collaborate!

The post EuroLLM Released: A Suite of Open-Weight Multilingual Language Models (EuroLLM-1.7B and EuroLLM-1.7B-Instruct) Capable of Understanding and Generating Text in All Official European Union languages appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)