Recurrent neural networks (RNNs) have been foundational in machine learning for addressing various sequence-based problems, including time series forecasting and natural language processing. RNNs are designed to handle sequences of varying lengths by maintaining an internal state that captures information across time steps. However, these models often struggle with vanishing and exploding gradient issues, which reduce their effectiveness for longer sequences. To address this limitation, various architectural advancements have been developed over the years, enhancing the ability of RNNs to capture long-term dependencies and perform more complex sequence-based tasks.

A significant challenge in sequence modeling is the computational inefficiency of existing models, particularly for long sequences. Transformers have emerged as a dominant architecture, achieving state-of-the-art results in numerous applications such as language modeling and translation. However, their quadratic complexity concerning sequence length renders them resource-intensive and impractical for many applications with longer sequences or limited computational resources. This has led to a renewed interest in models that can balance performance and efficiency, ensuring scalability without compromising on accuracy.

Several current methods have been proposed to tackle this problem, such as state-space models like Mamba, which utilize input-dependent transitions to efficiently manage sequences. Other methods, like linear attention models, optimize training by reducing the computation required for longer sequences. Despite achieving performance comparable to transformers, these methods often involve complex algorithms and require specialized techniques for efficient implementation. Moreover, attention-based mechanisms like Aaren and S4 have introduced innovative strategies to address the inefficiencies, but they still face limitations, such as increased memory usage and complexity in implementation.

The researchers at Borealis AI and Mila—Université de Montréal have reexamined traditional RNN architectures, specifically the Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) models. They introduced simplified, minimal versions of these models, named minLSTM and minGRU, to address the scalability issues faced by their traditional counterparts. By removing hidden state dependencies, the minimal versions no longer require backpropagation through time (BPTT) and can be trained in parallel, significantly improving efficiency. This breakthrough enables these minimal RNNs to handle longer sequences with reduced computational costs, making them competitive with the latest sequence models.

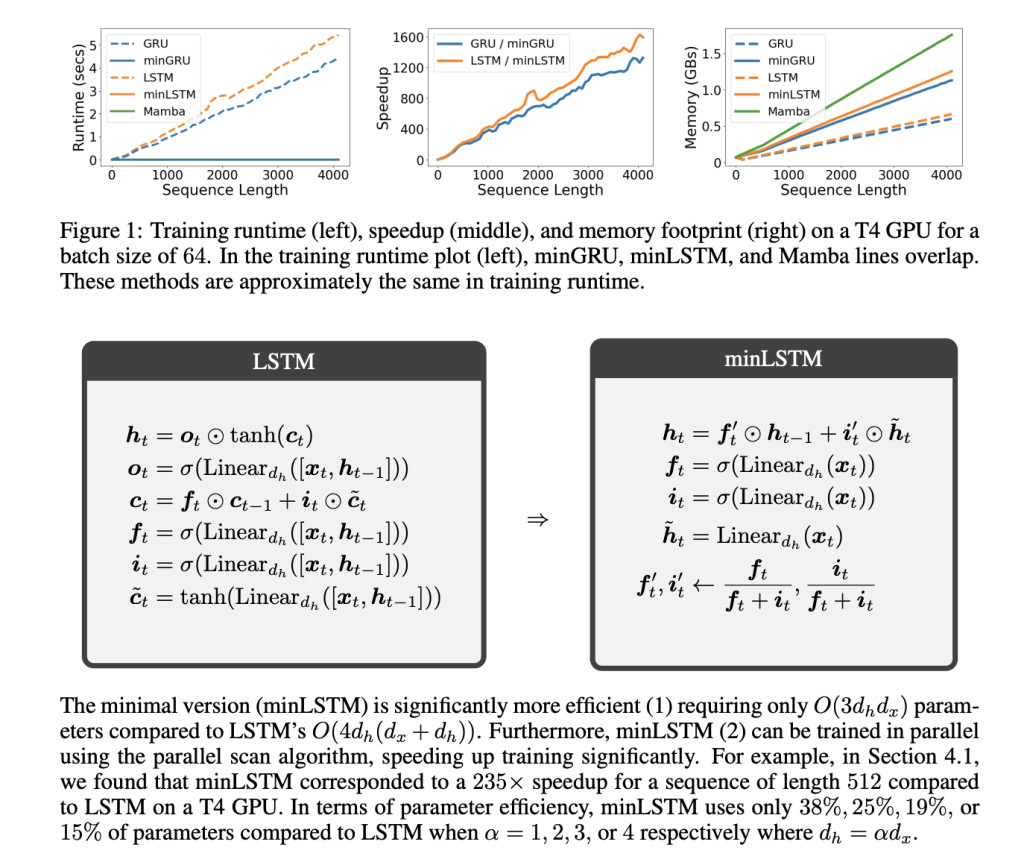

The proposed minimal LSTM and GRU models eliminate various gating mechanisms that are computationally expensive and unnecessary for many sequence tasks. By simplifying the architecture and ensuring the outputs are time-independent in scale, the researchers were able to create models that use up to 33% fewer parameters than traditional RNNs. Further, the modified architecture allows for parallel training, making these minimal models up to 175 times faster than standard LSTMs and GRUs when handling sequences of length 512. This improvement in training speed is crucial for scaling up the models for real-world applications that require handling long sequences, such as text generation and language modeling.

In terms of performance and results, the minimal RNNs demonstrated substantial gains in training time and efficiency. For example, on a T4 GPU, the minGRU model achieved a 175x speedup in training time compared to the traditional GRU for a sequence length of 512, while minLSTM showed a 235x improvement. For even longer sequences of length 4096, the speedup was even more pronounced, with minGRU and minLSTM achieving speedups of 1324x and 1361x, respectively. These improvements make the minimal RNNs highly suitable for applications requiring fast and efficient training. The models also performed competitively with modern architectures like Mamba in empirical tests, showing that the simplified RNNs can achieve similar or even superior results with much lower computational overhead.

The researchers further tested the minimal models on reinforcement learning tasks and language modeling. In the reinforcement learning experiments, the minimal models outperformed existing methods such as Decision S4 and performed comparably with Mamba and Decision Transformer. For example, on the Hopper-Medium dataset, the minLSTM model achieved a performance score of 85.0, while the minGRU scored 79.4, indicating strong results across varying levels of data quality. Similarly, in language modeling tasks, minGRU and minLSTM achieved cross-entropy losses comparable to transformer-based models, with minGRU reaching a loss of 1.548 and minLSTM achieving a loss of 1.555 on the Shakespeare dataset. These results highlight the efficiency and robustness of the minimal models in diverse sequence-based applications.

In conclusion, the research team’s introduction of minimal LSTMs and GRUs addresses the computational inefficiencies of traditional RNNs while maintaining strong empirical performance. By simplifying the models and leveraging parallel training, the minimal versions offer a viable alternative to more complex modern architectures. The findings suggest that with some modifications, traditional RNNs can still be effective for long sequence modeling tasks, making these minimal models a promising solution for future research and applications in the field.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

Interested in promoting your company, product, service, or event to over 1 Million AI developers and researchers? Let’s collaborate!

The post Revisiting Recurrent Neural Networks RNNs: Minimal LSTMs and GRUs for Efficient Parallel Training appeared first on MarkTechPost.

Source: Read MoreÂ