The rapid advancement of generative AI has made image manipulation easier, complicating the detection of tampered content. While effective, current Image Forgery Detection and Localization (IFDL) methods need to work on two key challenges: the black-box nature of their detection principles and limited generalization across various tampering methods like Photoshop, DeepFake, and AIGC-Editing. The rise of powerful image editing models has further blurred the line between real and fake content, posing risks such as misinformation and legal issues. To address these challenges, researchers are exploring Multimodal Large Language Models (M-LLMs) for more explainable IFDL, enabling clearer identification and localization of manipulated regions.

Current IFDL methods often focus on specific tampering types, while universal techniques aim to detect a wider range of manipulations by identifying image artifacts and irregularities. Models like MVSS-Net and HiFi-Net employ multi-scale feature learning and multi-branch modules to improve detection accuracy. Although these methods achieve satisfactory performance, they need more explainability and help to generalize across different datasets. Meanwhile, LLMs have demonstrated exceptional text-generation and visual understanding abilities. Recent studies have integrated LLMs with image encoders, but their use for universal tamper detection and localization still needs to be explored.

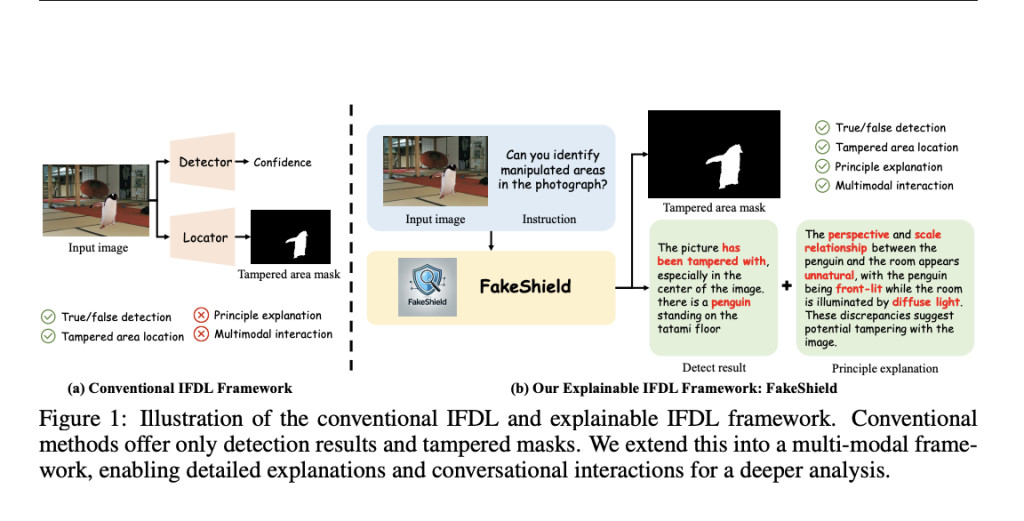

Researchers from Peking University and the South China University of Technology introduced FakeShield, an explainable Image Forgery Detection and Localization (e-IFDL) framework. FakeShield evaluates image authenticity, generates tampered region masks, and explains using pixel-level and image-level tampering clues. They enhanced existing datasets using GPT-4o to create the Multi-Modal Tamper Description Dataset (MMTD-Set) for training. Additionally, they developed the Domain Tag-guided Explainable Forgery Detection Module (DTE-FDM) and Multi-modal Forgery Localization Module (MFLM) to interpret different tampering types and align visual-language features. Extensive experiments show FakeShield’s superior performance in detecting and localizing various tampering methods compared to traditional IFDL techniques.

The proposed MMTD-Set enhances traditional IFDL datasets by integrating text descriptions with visual tampering information. Using GPT-4o, tampered images and their corresponding masks are paired with detailed descriptions, focusing on tampering artifacts. The FakeShield framework comprises two key modules: the DTE-FDM for tamper detection and explanation and the MFLM for precise mask generation. These modules work together to improve detection accuracy and interpretability. Experiments show that FakeShield outperforms previous methods across PhotoShop, DeepFake, and AIGC-Editing datasets in detecting and localizing image forgeries.

The MMTD-Set dataset uses Photoshop, DeepFake, and self-constructed AIGC-Editing tampered images for training and testing. The proposed FakeShield framework, incorporating the DTE-FDM and MFLM, is compared against state-of-the-art methods like SPAN, MantraNet, and HiFi-Net. Results demonstrate superior performance in detecting and localizing forgeries across multiple datasets. FakeShield’s integration of GPT-4o and domain tags enhances its ability to handle diverse tampering types, making it more robust and accurate than competing image forgery detection and localization methods.

In conclusion, the study introduces FakeShield, a pioneering application of M-LLMs for explainable IFDL. FakeShield can detect manipulations, generate tampered region masks, and provide explanations by analyzing pixel-level and semantic clues. It leverages the MMTD-Set built using GPT-4o to enhance tampering analysis. By incorporating the DTE-FDM and the MFLM, FakeShield achieves robust detection and localization across diverse tampering types like Photoshop edits, DeepFake, and AIGC-based modifications, outperforming existing methods in explainability and accuracy.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

Interested in promoting your company, product, service, or event to over 1 Million AI developers and researchers? Let’s collaborate!

The post FakeShield: An Explainable AI Framework for Universal Image Forgery Detection and Localization Using Multimodal Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ