Advancements in Extended Reality (XR) have allowed for the fusion of real-world entities within the virtual world. However, despite the innumerable sensors, plethora of cameras, and expensive computer vision techniques, this integration poses a few critical questions. 1 ) Does this blend truly capture the essence of real-world objects or merely treat them as a backdrop? 2) If we continue along the path at this velocity, would it be “feasibly†accessible to the masses soon? When seen stand-alone without machine learning interventions, the future of XR seems hazy – A) Current endeavors transport surrounding objects into XR, but this integration is superficial and lacks meaningful interaction. B ) Masses are not most exuberant when they go before the technological constraints to experience the XR mentioned in part (A). When AI and its multiple fascinating applications, such as real-time unsupervised segmentation and generative AI content generation, come into perspective, solid ground is set for XR to achieve this XR future encompassing a seamless integration.

A team of researchers at Google recently unveiled XR-Objects, and in their literal words, they claim to make XR as immersive as – “right-clicking a digital file to open its context menu, but applied to physical objects.†The paper introduces ‘Augmented Object Intelligence’ that employs AI to extract digital information from analog objects, a task established earlier as strenuous. AOI represents a paradigm shift towards seamless integration of real and virtual content and gives users the freedom to context-appropriate digital interactions. Google Researchers combined AR developments in spatial understanding via SLAM with object detection and segmentation integrated with Multimodal Large Language Model (MLLM)Â

XR Object offers an object-centric interaction in contradistinction to the application-centric approach of Google Lens. Here, interactions are directly anchored to objects within the user’s environment, further improved by a World-Space UI, which saves one the hassle of navigating through applications and manually selecting objects. To ensure aesthetic appeal and avoid clutter, digital information is presented in semi-transparent bubbles that serve as subtle minimalist prompts.

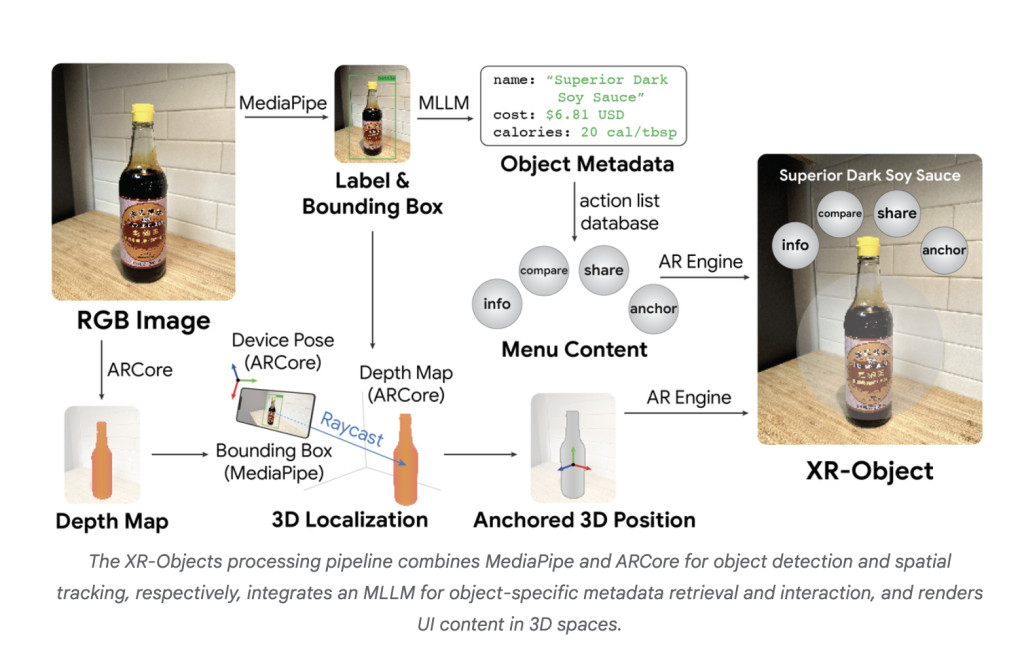

The framework to achieve this state-of-the-art in XR is straightforward. The quarter-fold strategy is – A) Object Detection and B) Localisation and Anchoring of objects. C) Coupling each object with MLLM D) Action Execution. Google MediaPipe library, which essentially uses a mobile-optimized CNN, comes in handy for the first task and generates 2D bounding boxes that initiate AR anchoring and localization. Currently, this CNN is trained on a COCO dataset that categorizes around 80 objects. Initially, Depth Maps are used for AR localization, and an object proxy template containing the object’s context menu is initiated. At last, an MLLM(PaLI) is coupled with each object, and the cropped bounding box from step A becomes the prompt. This makes the algorithm stand out and identify “Superior Dark Soy Sauce†from the ordinary bottle kept in your kitchen.

Google performed a user study to compare XR Object against Gemini, and the results were no surprise given the above context. XR achieved sweet victories in time consumption and form factor for HMD. The form factor for the phone was split between chatbot and XR objects. The HALIE survey results for both Chatbot and XR were similar. The subject users also gave appreciative feedback for XR on how helpful and efficient it was. Users also provided feedback to improve its ergonomic feasibility.

This new AOI paradigm is promising and would grow with acceleration in LLM functionalities. It would be interesting to see if its counter Meta, which has made massive strides in segmentation and LLM, would develop new solutions to supersede XR Objects and take XR to a new zenith.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

Interested in promoting your company, product, service, or event to over 1 Million AI developers and researchers? Let’s collaborate!

The post XR-Objects: A New Open-Source Augmented Reality Prototype that Transforms Physical Objects into Interactive Digital Portals Using Real-Time Object Segmentation and Multimodal Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ