Diagnostic errors are common and can result in significant harm to patients. While various approaches like education and reflective practices have been employed to reduce these errors, their success has been limited, especially when applied on a larger scale. LLMs, which can generate responses similar to human reasoning from text prompts, have shown promise in handling complex cases and patient interactions. These models are beginning to be incorporated into healthcare, where they will likely enhance, rather than replace, human expertise. Further research is required to understand their impact on improving diagnostic reasoning and accuracy.

Researchers conducted a randomized clinical vignette study to assess how GPT-4, an AI language model, impacts physicians’ diagnostic reasoning compared to traditional diagnostic resources. Physicians were randomized into two groups: one using GPT-4 alongside conventional resources and the other using only traditional tools. Results showed no significant improvement in overall diagnostic accuracy for the GPT-4 group, though it did enhance efficiency, with less time spent per case. GPT-4 alone outperformed both physician groups in diagnostic performance. These findings suggest potential benefits of AI-physician collaboration, but further research is needed to optimize this integration in clinical settings.

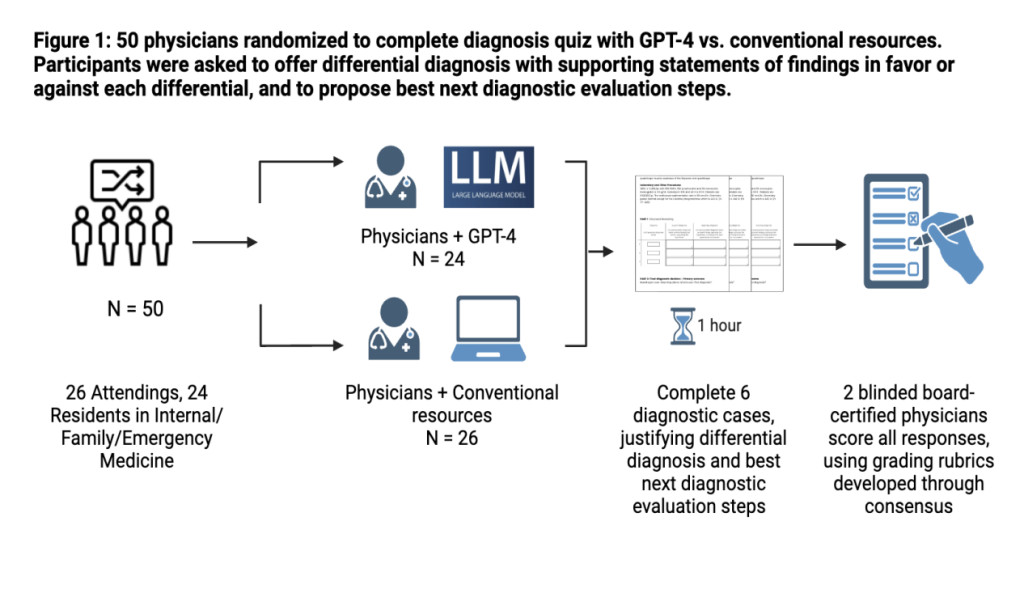

Participants were randomized into two groups: one with access to GPT-4 via the ChatGPT Plus interface and the other using conventional diagnostic resources. They were given an hour to complete as many as six clinical vignettes adapted from real patient cases. The study aimed to evaluate diagnostic reasoning using structured reflection as the primary outcome, alongside secondary measures like diagnostic accuracy and time spent on each case. Participants were compensated for their involvement, with residents receiving $100 and attendings up to $200.

The vignettes were based on landmark studies and included patient history, physical exams, and lab results, ensuring relevance to modern medical practice. To evaluate diagnostic performance holistically, the researchers used a structured reflection grid where participants could provide their reasoning and propose the next diagnostic steps. Performance was scored based on the correctness of differential diagnoses, supporting and opposing evidence, and appropriate next steps. Statistical analyses assessed differences between the GPT-4 and control groups, considering factors like participant experience and case difficulty. The study’s results highlighted GPT-4’s potential in aiding diagnostic reasoning, with further analysis of physician-AI collaboration needed for clinical integration.

The study involved 50 US physicians (26 attendings, 24 residents) with a median of 3 years of practice. Participants were split into two groups: one used GPT-4, and the other used conventional resources. The GPT-4 group achieved a slightly higher diagnostic performance (median score 76.3 vs. 73.7), but the difference was not statistically significant (p=0.6). Time spent per case was also somewhat less with GPT-4, though insignificant (519 vs. 565 seconds, p=0.15). Subgroup analyses showed similar trends. GPT-4 alone outperformed humans using conventional methods, scoring significantly higher diagnostic accuracy (p=0.03).

The study found that providing physicians access to GPT-4, an LLM, did not significantly enhance their diagnostic reasoning for complex clinical cases, despite the LLM alone outperforming both human participants. Time spent per case was slightly reduced for those using GPT-4, but the difference was insignificant. Although GPT-4 showed potential in improving diagnostic accuracy and efficiency, more research is needed to optimize its integration into clinical workflows. The study emphasizes the need for better clinician-AI collaboration, including training in prompt engineering and exploring how AI can effectively support medical decision-making.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Interested in promoting your company, product, service, or event to over 1 Million AI developers and researchers? Let’s collaborate!

The post Evaluating the Impact of GPT-4 on Physician Diagnostic Reasoning: Insights and Future Directions for AI Integration in Clinical Practice appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)