One of the critical challenges in the development and deployment of Large Language Models (LLMs) is ensuring that these models are aligned with human values. As LLMs are applied across diverse fields and tasks, the risk of these models operating in ways that may contradict ethical norms or propagate cultural biases becomes a significant concern. Addressing this challenge is essential for the safe and ethical integration of AI systems into society, particularly in sensitive areas such as healthcare, law, and education, where value misalignment could have profound negative consequences. The central challenge lies in effectively capturing and embedding a diverse and comprehensive set of human values within these models, ensuring that they perform in a manner consistent with ethical principles across different cultural contexts.

Current approaches to aligning LLMs with human values include techniques such as Reinforcement Learning with Human Feedback (RLHF), constitutional learning, and safety fine-tuning. These methods typically rely on human-annotated data and predefined ethical guidelines to instill desired behaviors in AI systems. However, they are not without significant limitations. RLHF, for example, is vulnerable to the subjective nature of human feedback, which can introduce inconsistencies and cultural biases into the training process. Moreover, these approaches often struggle with computational inefficiencies, making them less viable for real-time applications. Importantly, existing methods tend to provide a limited view of human values, often failing to capture the complexity and variability inherent in different cultural and ethical systems.

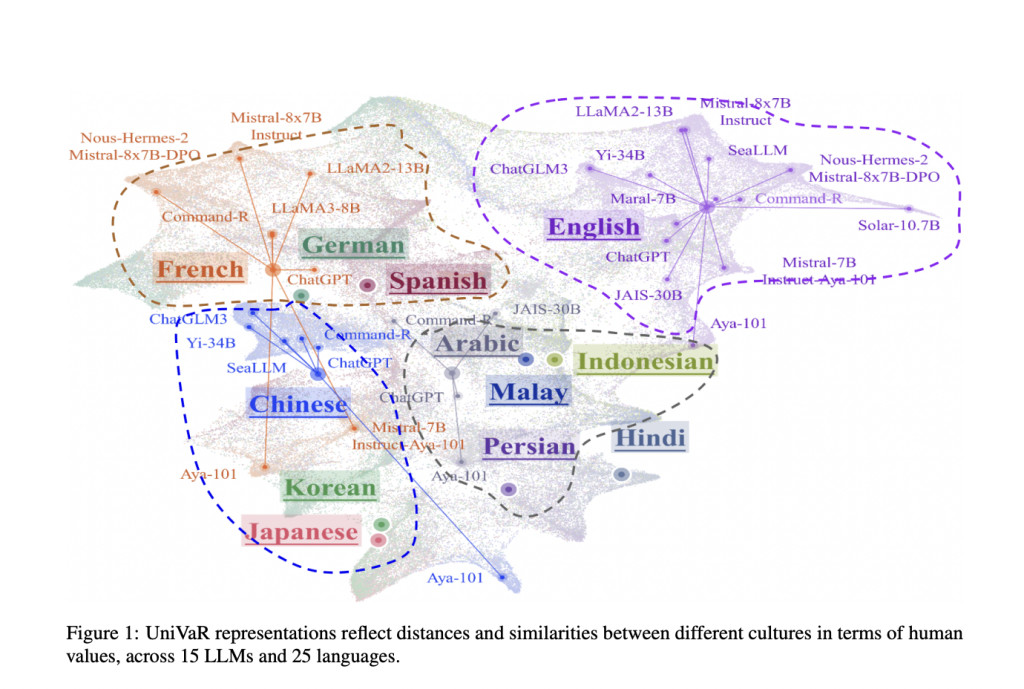

The researchers from Hong Kong University of Science and Technology propose UniVaR, a high-dimensional neural representation of human values in LLMs. This method is distinct in its ability to function independently of the model architecture and training data, making it adaptable and scalable across various applications. UniVaR is designed to be a continuous and scalable representation that is self-supervised from value-relevant outputs of multiple LLMs and evaluated across different models and languages. The innovation of UniVaR lies in its capacity to capture a broader, more nuanced spectrum of human values, enabling a more transparent and accountable analysis of how LLMs prioritize these values across different cultural and linguistic contexts.

UniVaR operates by learning a value embedding Z that represents the value-relevant factors of LLMs. The approach involves eliciting value-related responses from LLMs through a curated set of question-answer pairs (QA pairs). These QA pairs are then processed using multi-view learning to compress information, removing irrelevant data while retaining value-relevant aspects. The researchers utilized a dataset comprising approximately 1 million QA pairs, which were generated from 87 core human values and translated into 25 languages. This dataset was further processed to reduce linguistic variations, ensuring consistency in the representation of values across different languages.

UniVaR demonstrates substantial improvements in accurately capturing and representing human values within LLMs compared to existing models. It achieves significantly higher performance metrics, with a top-1 accuracy of 20.37% in value identification tasks, far surpassing the traditional models like BERT and RoBERTa, which achieve accuracies ranging from 1.78% to 4.03%. Additionally, UniVaR’s overall accuracy in more comprehensive evaluations is markedly superior, reflecting its effectiveness in embedding and recognizing diverse human values across different languages and cultural contexts. This significant enhancement underscores UniVaR’s capability to address the complexities of value alignment in AI, offering a more reliable and nuanced approach than previously available methods.

This proposed method represents a significant advancement in aligning LLMs with human values. UniVaR offers a novel, high-dimensional framework that overcomes the limitations of existing methods by providing a continuous, scalable, and culturally adaptable representation of human values. By delivering accurate and nuanced value representations across different languages and cultures, UniVaR contributes to the ethical deployment of AI technologies, ensuring that LLMs operate in a manner consistent with diverse human values and ethical principles.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 52k+ ML SubReddit.

We are inviting startups, companies, and research institutions who are working on small language models to participate in this upcoming ‘Small Language Models’ Magazine/Report by Marketchpost.com. This Magazine/Report will be released in late October/early November 2024. Click here to set up a call!

The post Are Language Models Culturally Aware? This AI Paper Unveils UniVaR: a Novel AI Approach to High-Dimension Human Value Representation appeared first on MarkTechPost.

Source: Read MoreÂ