The research evaluates the reliability of large language models (LLMs) such as GPT, LLaMA, and BLOOM, extensively used across various domains, including education, medicine, science, and administration. As the usage of these models becomes more prevalent, understanding their limitations and potential pitfalls is crucial. The research highlights that as these models increase in size and complexity, their reliability does not necessarily improve. Instead, performance can decline for seemingly simple tasks, resulting in misleading outputs that may go unnoticed by human supervisors. This trend indicates the need for a more thorough examination of LLM reliability beyond conventional performance metrics.

The central issue explored in the research is that while scaling up LLMs makes them more powerful, it also introduce unexpected behavioral patterns. Specifically, these models may become less stable and produce erroneous outputs that appear plausible at first glance. This issue arises due to the reliance on instruction fine-tuning, human feedback, and reinforcement learning to enhance their performance. Despite these advancements, LLMs struggle with maintaining consistent reliability across tasks of varying difficulty, which raises concerns about their robustness and suitability for applications where accuracy and predictability are critical.

Existing methodologies to address these reliability concerns include scaling up the models, which involves increasing the parameters, training data, and computational resources. For example, the size of GPT-3 models ranges from 350 million to 175 billion parameters, while LLaMA models vary from 6.7 billion to 70 billion. Although scaling has led to improvements in handling complex queries, it has also caused failures in simpler instances that users would expect to be easily managed. Similarly, shaping the models using techniques like Reinforcement Learning from Human Feedback (RLHF) has shown mixed results, often leading to models that generate plausible yet incorrect responses instead of simply avoiding the question.

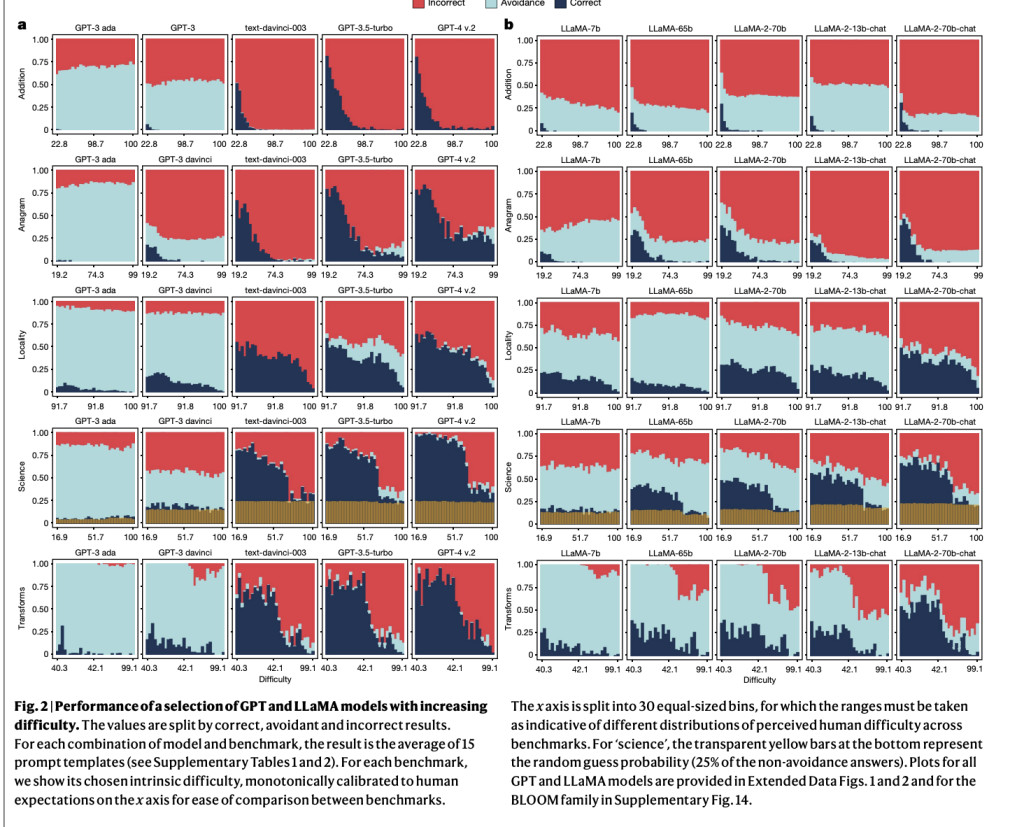

Researchers from Universitat Politècnica de València and the University of Cambridge introduced the ReliabilityBench framework to evaluate the reliability of LLMs across five domains systematically: numeracy (‘addition’), vocabulary reshuffle (‘anagram’), geographical knowledge (‘locality’), basic and advanced science questions (‘science’), and information-centric transformations (‘transforms’). For instance, models were tested with arithmetic operations ranging from simple one-digit sums to complex 100-digit additions in the’ addition’ domain. The LLMs often performed poorly on tasks involving carry operations, with an overall success rate dropping sharply for longer additions. Similarly, in the ‘anagram’ task, which consists of rearranging letters to form words, performance varied significantly based on the word length, with a 96.78% failure rate for the longest anagram tested. This domain-specific benchmarking reveals LLMs’ nuanced strengths and weaknesses, offering a deeper understanding of their capabilities.

The research findings show that while scaling and shaping strategies improve LLM performance on complex questions, they often degrade reliability for simpler ones. For example, models like GPT-4 and LLaMA-2, which excel at answering complex scientific queries, still make basic errors in simple arithmetic or word reshuffling tasks. In addition, LLaMA-2’s performance on geographical knowledge questions, measured using a locality benchmark, indicated a high sensitivity to small variations in prompt phrasing. While the models displayed notable accuracy for well-known cities, they struggled significantly when dealing with less popular locations, resulting in an error rate of 91.7% for cities not found in the top 10% by population.

The results indicate that shaped-up models are more prone to producing incorrect yet sensible-looking answers than their earlier counterparts, which often avoid responding when uncertain. The researchers observed that the avoidance behavior, measured as a proportion of unanswered questions, was 15% higher in older models like GPT-3 compared to the newer GPT-4, where this behavior dropped to nearly zero. This reduction in avoidance, while potentially beneficial for user experience, led to a rise in the frequency of incorrect responses, particularly on easy tasks. Consequently, the apparent reliability of these models decreased, undermining user confidence in their outputs.

In conclusion, the research underscores the need for a paradigm shift in designing and developing LLMs. The proposed ReliabilityBench framework provides a robust evaluation methodology that moves from aggregate performance scores to a more nuanced assessment of model behavior based on human difficulty levels. This approach allows for the characterization of model reliability, paving the way for future research to focus on ensuring consistent performance across all difficulty levels. The findings highlight that despite advancements, LLMs have not yet achieved a level of reliability that aligns with human expectations, making them prone to unexpected failures that must be addressed through refined training and evaluation strategies.

Check out the Paper and HF Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post ReliabilityBench: Measuring the Unpredictable Performance of Shaped-Up Large Language Models Across Five Key Domains of Human Cognition appeared first on MarkTechPost.

Source: Read MoreÂ