Amazon Timestream for LiveAnalytics is a fast, scalable, and serverless time-series database that makes it straightforward and cost-effective to store and analyze trillions of events per day. Customers across a broad range of industry verticals have adopted Amazon Timestream to derive real-time insights, monitor critical business applications, and analyze millions of real-time events across applications, websites, and devices, including Internet of Things (IoT). Timestream can automatically scale to meet the demands of your workload without any overhead required to manage the underlying infrastructure, enabling you to focus on building your applications.

In response to customer feedback, we introduced a new pricing model designed to provide a more cost-effective and predictable solution for querying time series data. The previous pricing model for queries, which charged based on the amount of data scanned and had a minimum of 10 MB per query, could be expensive for use cases where queries often scanned only a few KBs of time series data. In addition, it was challenging to limit the spend on queries. To address these concerns, we introduced a new pricing model that charges based on the compute resources used rather than the amount of data scanned by the queries. This new pricing model, based on Timestream Compute Units (TCUs), offers a more cost-effective and predictable way to query your time series data. It aligns costs with the actual resources used, and you can configure the maximum number of compute resources to be used for queries, aiding adherence to budgets.

In this post, we provide an overview of TCUs, cost controls using TCUs, and how to estimate the desired compute units for your workload for optimal price-performance.

Timestream Compute Units

A TCU is a serverless compute capacity allocated for your query needs in Timestream for LiveAnalytics. Each TCU comprises 4 vCPUs and 16 GB of memory. When you query your time series data, the service determines the number of TCUs required, primarily based on the complexity of your queries (real-time and analytical queries) and the amount of data being processed among other parameters. Because each compute unit runs multiple queries at the same time, the service evaluates whether the query can be served with the TCUs being used or if additional compute units are required. If required, the service scales to the required compute units on demand. The number of TCUs and the duration of usage of the compute units by your query workload determines the associated cost, making it transparent and cost-effective to manage your query workload.

Cost control

One of the benefits of TCUs is the ability to help control costs and manage query spending more effectively. You can set MaxQueryTCU per account per AWS Region. By setting this max limit, you can make sure the service only scales to the configured MaxQueryTCU for your query workload. By setting a maximum TCU limit, you can establish a cost ceiling for your workload, so you stay within budget.

You can set the maximum number of compute units in multiples of 4. You are only charged for the compute resources you use, which means that if you set the maximum TCUs to 128 but consistently use only 8 TCUs, you will only be charged for the duration during which you used the 8 TCUs. The pay-per-use pricing along with configuring the limits of usage provides a high degree of cost transparency and predictability, enabling you to better plan and manage your query cost. When you onboard the service, each AWS account has a default MaxQueryTCU limit of 200.

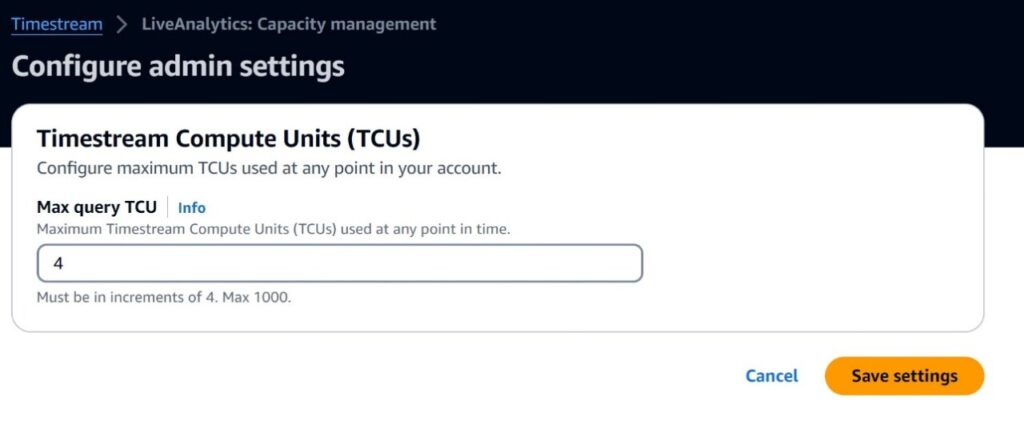

You can use the UpdateAccountSettings API or configure MaxQueryTCU on the AWS Management Console to increase or decrease MaxQueryTCU through the following steps. We provide more details on how to estimate your TCU needs later in this post.

In Admin dashboard on the Timestream console, choose Configure admin settings.

Set your desired value for Max query TCU and choose Save settings.

If your account is currently billed for the amount of data scanned by your queries and you can make a one-time choice to opt in to TCUs, an additional pop-up appears where you need to enter confirm and choose Confirm.

Depending on your current usage, reducing the maximum TCU limit change might take up to 24 hours to be effective. You’re billed only for the TCUs that your queries actually consume. Having a higher maximum query TCU limit doesn’t impact your costs unless those TCUs are used by your workload.

Billing with TCUs

With TCUs, you’re billed for the compute resources used on a per-second basis and for a minimum of 30 seconds. The usage unit of these compute units is TCU-hours. When your application submits queries to Timestream, the service checks if it can process the requests on the compute units in use. If not, the service scales up to add additional compute units to handle the queries. After running the queries, the service waits for up to 10 seconds for additional queries and if there are no additional queries within this period, it scales down the number of compute units in use to optimize resources and minimize costs.

To illustrate the billing with an example, let’s assume your application has a query pattern with queries of latency under 1 second for 40 seconds in a minute. The application sends queries to Timestream at the start of the minute t1 second to t40 second in a minute.

The compute units are billed for a minimum of 30 seconds. The service scales down the compute units when the minimum time elapses and when there are no queries for up to 10 seconds. We assume the service allocates 4 TCUs at t1 for the queries and all subsequent queries are placed on the same 4 TCUs. You are billed for 50 seconds (0.0138889 TCU-hours) of 4 TCU usage, 4 * 0.01388 TCU-hours = 0.05552 TCU-hours. Assuming this pattern repeats every minute (t1-t60) for 8 hours a day, the total TCU-hours consumed would be 0.05552 TCU-hours * 60 minutes * 8 hours = 26.6496 TCU-hours per day. After you get the TCU-hours, you can get the respective query pricing per desired Region and calculate price accordingly.

Let’s walk through a couple more examples for a better understanding of billing:

Your workload uses 20 TCUs for 3 hours. You’re billed for 60 TCU-hours (20 TCUs x 3 hours).

Your workload uses 12 TCUs for 30 minutes, and then 20 TCUs for the next 30 minutes. You’re billed for 16 TCU-hours (12 TCUs x 0.5 hours + 20 TCUs x 0.5 hours).

Estimating the number of TCUs

Timestream for LiveAnalytics automatically scales to adjust capacity and performance so you don’t need to manage the underlying infrastructure or provision capacity. It features a fully decoupled architecture where data ingestion, storage, and query can scale independently, allowing it to offer virtually infinite scale for an application’s needs.

The number of compute units required depends on the characteristics of the workload, such as query concurrency, data model, data scanned by queries, and others. To estimate the number of queries that can be handled, we simulated an infrastructure monitoring use case where the data is collected and analyzed in Timestream for LiveAnalytics and visualized through Grafana. Similar to real-world scenarios, we simulated two popular observability query patterns with more than one user monitoring the metrics, increasing the users from 1 to 21. We then varied MaxQueryTCU settings (4 and 8) to monitor the volume of queries handled and corresponding latencies as the users increase.

In this use case, we measured the query volume and latency metrics at 4 and 8 TCUs for the last point query pattern where we retrieve the latest memory utilization of the host (lastpoint-query.ipynb) and binning the CPU utilization for the last 10 mins (single-groupby-orderby.ipynb). For these workloads, we start with 1 user issuing the queries to the service and scale the number of concurrent users to 21. The tests are run for 1 minute for each configuration of the users and corresponding latencies (p50, p90, p99), the number of queries per minute, and throttles (if any) are observed. Refer to README.md for additional details.

In the first simulation, we set MaxQueryTCU to 4 and run the following query, which retrieves the most recent memory utilization for a given host:

The following graphs show the latency percentiles (seconds), queries per minute, and throttling counts vs. number of users.

We start with one user and continue to increase the number of users accessing the time series data. With just 4 TCUs, the service supports 4,560 queries per minute, which is approximately 76 queries per second (QPS), with p99 latency of less than 160 milliseconds. As the number of Grafana users increases, we see an increase in the latency, and thereby a decrease in the number of queries per minute but minimal throttles. This is because the default SDK maximum retries is set to 3 (a best practice) and the SDK retries the queries before throttling. Because the latency of a query includes the retry time, beyond 7 concurrent users (76 QPS), we notice an increase in p99 latency primarily due to the multiple retry attempts. If sub-second query latency is acceptable for your use case, you can support up to 9 concurrent users and 4,853 queries per minute with 4 TCUs.

We increase the MaxQueryTCU to 8 and rerun the test. Because the service allocates compute units on demand, the service starts with 4 TCU and as the workload increases, allocates additional 4 TCUs. The following graphs show the metrics with 8 TCU.

With 8 TCUs, the service supports 9,556 queries (approximately 159 QPS) with p99 latency of less than 200 milliseconds. As the number of Grafana users increases, we see an increase in the latency (less than 330 milliseconds), and therefore a slight decrease in the number of queries per minute but no throttles. Notably, if sub-500 milliseconds query latency is acceptable for your use case, you can support up to 15 concurrent users and 9,556 queries per minute with just 8 TCUs.

You can increase the MaxQueryTCU up to 1000, enabling you to scale as your user base grows. Depending on your desired spend, you could continue to increase the MaxQueryTCU or serve additional users using the existing TCU if the latencies are acceptable for your use case.

Next, we repeat the simulation with Query 2, which performs binning, grouping, and ordering, which is a relatively more resource-intensive query than Query 1:

The following graphs show the corresponding metrics for Query 2.

Similar to the earlier test, we start with one user and continue to increase the number of users accessing the data. With just 4 TCUs, you can support 3,310 (approximately 55 QPS) for 6 concurrent users at 180 milliseconds of p99 latency. As the number of Grafana users increases, so does the latency, primarily due to overhead from retries and thereby fewer queries per minute.

The following graphs show the metrics with 8 TCUs.

With 8 TCUs, you can support 6,051 (approximately 100 QPS) for 15 concurrent users at sub-500 milliseconds of p99 latency.

You can use the required TCUs for your desired performance. You could start with fewer TCUs and continue to increase MaxQueryTCU in your account until you meet your desired performance criteria or find the right balance between price and performance. Time series data often comes in higher volume and lower granularity. The scheduled query feature in Timestream is designed to support aggregation and rollup operations, making it ideal for generating data for dashboards and visualizations from the derived table (where data is already aggregated and the amount of data volume is reduced) for better performance. Refer to Improve query performance and reduce cost using scheduled queries in Amazon Timestream for more information.

As demonstrated in our simulations, 4 TCUs have supported query volumes of 76 QPS (equivalent to 199 million queries per month) and 55 QPS (equivalent to 143 million queries per month). Query volumes supported depend on the complexity of the queries and could be higher than shown in the simulations. Notably, your spend for 76 QPS and 55 QPS remains the same, because TCUs are charged based on the duration of compute usage, not the number of queries. To maximize the benefits of concurrent query runs, it’s essential to optimize your data model, queries, and workload to effectively utilize the compute resources, which can reduce costs, increase query throughput, and improve resource utilization.

Estimating costs using the AWS Pricing Calculator

You can use the AWS Pricing Calculator to estimate your Timestream for LiveAnalytics monthly costs. In this section, we provide general guidance on what we consider as real-time and analytical queries. With that, you can estimate the query costs using the AWS Pricing Calculator for your workload.

Real-time queries – Queries that analyze the measures within a small window (a few minutes, such as 5 or 15) or queries that typically scan a few hundred records.

Analytical queries – Queries that analyze measures across large time ranges (such as 12 hours, days, or months) and can include complex joins and subqueries, typically scanning a few thousand records. These queries need higher compute (CPU/memory) for aggregating and segmenting the data for better performance.

This is general guidance; there could be some costly queries demanding interpolation, derivation, or other complex functions within a small window that may not qualify as real-time queries.

For simplicity, the pricing calculator assumes seven concurrent queries per second are served with 4 TCUs at a latency of 1 second. In our experiment, we achieved 72 QPS with a p99 latency of less than 160 milliseconds. The pricing calculator divides the number of queries to be uniformly distributed across the given time unit (per second, minute, hours, day, month). In some cases, where queries don’t run for more than 30 seconds (including the idle period), you’re not charged for the entire minute, and the calculator doesn’t take this into consideration, so your actual totals could be much lower than displayed. You could do further calculations based on the examples shared earlier in this post for exact costs if you have a better understanding of your query workload.

Monitoring TCUs

To effectively monitor your TCU usage, Timestream for Live Analytics provides the Amazon CloudWatch metric QueryTCU. This metric measures the number of compute units used in a minute and is updated every minute. With this metric, you can track the maximum and minimum TCUs used in a minute, giving you an insight into your compute usage. Additionally, you can set alarms on this metric to receive real-time notifications when your compute usage exceeds a certain threshold, helping you optimize your usage and thereby lower your query cost.

The following graph shows a maximum of QueryTCU used for the last 3 hours.

The maximum TCUs don’t directly help you calculate the price, but instead give an insight into how many max/min TCUs are used. To get the exact number of TCU-hours, start Cost Explorer by opening the AWS Cost Management console and filter by Timestream for LiveAnalytics.

All AWS accounts that onboard to the service after April 29, 2024, will default to using TCUs for query pricing. If you have onboarded to the service prior to April 29, 2024, you can do a one-time opt-in to TCUs through API or use AWS Timestream console, as demonstrated in the Cost control section. Transitioning to the TCU-based pricing provides better cost management and removal of per-query minimum bytes metered. Opting into the TCU-based pricing model is optional and an irreversible change, which means if you’ve transitioned your account to use TCUs for query pricing, you can’t transition to using bytes scanned for query pricing.

Conclusion

In this post, we discussed TCUs, billing, cost estimation, and demonstrated query workload simulation with 4 and 8 TCU configuration. With effective resource utilization, you could scale your workload without any increase in costs. The pay-per-use pricing along with configuring the limits of TCU usage provides a high degree of cost transparency and predictability, enabling you to better plan and manage your query cost.

For more information, refer to the following resources:

Timestream Compute Unit (TCU)

Data Modeling Best Practices to Unlock the Value of your Time-series Data

Amazon Timestream pricing

We encourage you to start using Timestream for LiveAnalytics today and enjoy the many benefits it offers. If you want to learn more, reach out through your usual AWS Support contact or post your questions on AWS re:Post for Amazon Timestream.

About the Authors

Balwanth Reddy Bobilli is a Specialist Solutions Architect at AWS based out of Utah. Prior to joining AWS, he worked at Goldman Sachs as a Cloud Database Architect. He is passionate about databases and cloud computing. He has great experience in building secure, scalable, and resilient solutions in the cloud, specifically with cloud databases.

Praneeth Kavuri is a Senior Product Manager in AWS working on Amazon Timestream. He enjoys building scalable solutions and working with customers to help deploy and optimize database workloads on AWS.

Kuldeep Porwal is a Senior Software Engineer at Amazon Timestream. He has experience in building secure and scalable distributed systems across databases. He previously worked on Alexa and transportation orgs within Amazon.

Source: Read More