Training a Large CNN for Image Classification:

Researchers developed a large CNN to classify 1.2 million high-resolution images from the ImageNet LSVRC-2010 contest, spanning 1,000 categories. The model, which contains 60 million parameters and 650,000 neurons, achieved impressive results, with top-1 and top-5 error rates of 37.5% and 17.0%, respectively—significantly outperforming previous methods. The architecture comprises five convolutional layers and three fully connected layers, ending with a 1,000-way softmax. Key innovations, such as using non-saturating neurons and employing dropout to prevent overfitting, enabled efficient training on GPUs. CNN’s performance improved in the ILSVRC-2012 competition, achieving a top-5 error rate of 15.3%, compared to 26.2% by the next-best model.

The success of this model reflects a broader shift in computer vision towards machine learning approaches that leverage large datasets and computational power. Previously, researchers doubted that neural networks could solve complex visual tasks without hand-designed systems. However, this work demonstrated that with sufficient data and computational resources, deep learning models can learn complex features through a general-purpose algorithm like backpropagation. The CNN’s efficiency and scalability were made possible by advancements in GPU technology and larger datasets such as ImageNet, enabling the training of deep networks without significant overfitting issues. This breakthrough marks a paradigm shift in object recognition, paving the way for more powerful and data-driven models in computer vision.

Dataset and Network Architecture:

The researchers utilized ImageNet, a comprehensive dataset comprising over 15 million high-resolution images across approximately 22,000 categories, all sourced from the web and labeled via Amazon’s Mechanical Turk. For the ImageNet Large-Scale Visual Recognition Challenge (ILSVRC), which began in 2010 as part of the Pascal Visual Object Challenge, they focused on a subset of ImageNet containing around 1.2 million training images, 50,000 validation images, and 150,000 test images distributed evenly across 1,000 categories. To ensure uniform input dimensions for their CNN, all photos were resized to 256 × 256 pixels by scaling the shorter side to 256 and centrally cropping the image. The only additional preprocessing step involved subtracting the mean pixel activity from each image, allowing the network to train on raw RGB values effectively.

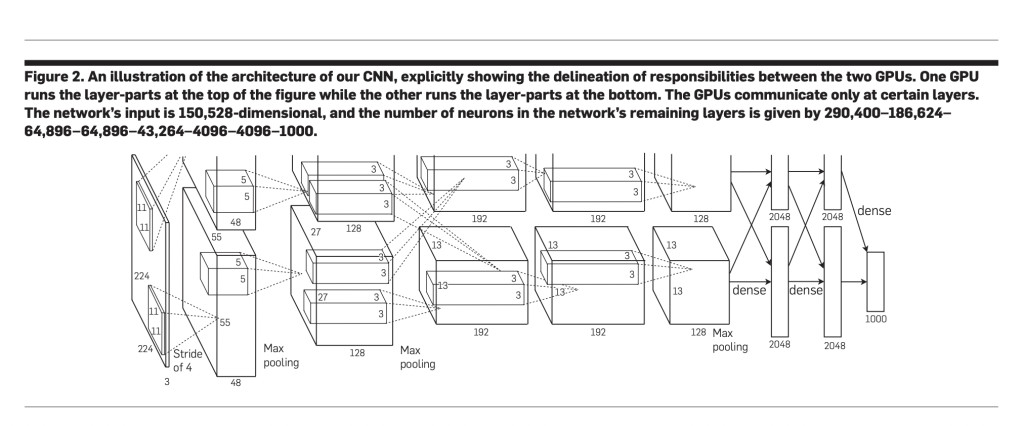

The CNN architecture developed by the researchers consisted of eight layers, including five convolutional layers and three fully connected layers, culminating in a 1,000-way softmax output. This deep network, containing 60 million parameters and 650,000 neurons, was optimized for high performance through several novel features. They employed Rectified Linear Units (ReLUs) instead of traditional tanh activations to accelerate training, demonstrating significantly faster convergence on the CIFAR-10 dataset. The network was distributed across two GTX 580 GPUs to manage the extensive computational demands using a specialized parallelization strategy that minimized inter-GPU communication. Additionally, local response normalization and overlapping pooling were implemented to enhance generalization and reduce error rates. Training the network took five to six days, leveraging optimized GPU implementations of convolution operations to achieve state-of-the-art performance in object recognition tasks.

Reducing Overfitting in Neural Networks:

The network, containing 60 million parameters, faces overfitting due to insufficient training data constraints. To address this, the researchers apply two key techniques. First, data augmentation artificially expands the dataset through image translations, reflections, and RGB intensity alterations via PCA. This method helps reduce top-1 error rates by over 1%. Second, we employ dropout in fully connected layers, randomly deactivating neurons during training to prevent co-adaptation and improve feature robustness. Dropout increases training iterations but is crucial in reducing overfitting without increasing computational costs.

Results on ILSVRC Competitions:

The CNN model achieved top-1 and top-5 error rates of 37.5% and 17.0% on the ILSVRC-2010 dataset, outperforming previous methods like sparse coding (47.1% and 28.2%). In the ILSVRC-2012 competition, the model reached a top-5 validation error rate of 18.2%, which improved to 16.4% when predictions from five CNNs were averaged. Further, pre-training on the ImageNet Fall 2011 dataset, followed by fine-tuning, reduced the error to 15.3%. These results significantly surpass prior methods using dense features, which reported a top-5 test error of 26.2%.

Discussion:

The large, deep CNN achieved record-breaking performance on the challenging ImageNet dataset, with top-1 and top-5 error rates of 37.5% and 17.0%, respectively. Removing any convolutional layer reduced accuracy by about 2%, demonstrating the importance of network depth. Although unsupervised pre-training was not used, it may further improve results. Over time, as hardware and techniques improved, error rates dropped by a factor of three, bringing CNNs closer to human-level performance. The success of our model spurred widespread adoption of deep learning in companies like Google, Facebook, and Microsoft, revolutionizing computer vision.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Revolutionizing Image Classification: Training Large Convolutional Neural Networks on the ImageNet Dataset appeared first on MarkTechPost.

Source: Read MoreÂ