Previous research on reasoning frameworks in large language models (LLMs) has explored various approaches to enhance problem-solving capabilities. Chain-of-Thought (CoT) introduced articulated reasoning processes, while Tree-of-Thought (ToT) and Graph-of-Thought (GoT) expanded on this concept by incorporating branching possibilities and complex relationships between reasoning steps. Cumulative Reasoning (CR) introduced collaborative processes involving multiple specialized LLMs. These frameworks aimed to capture the non-linear and iterative nature of human reasoning but faced challenges in computational efficiency and implementation complexity.

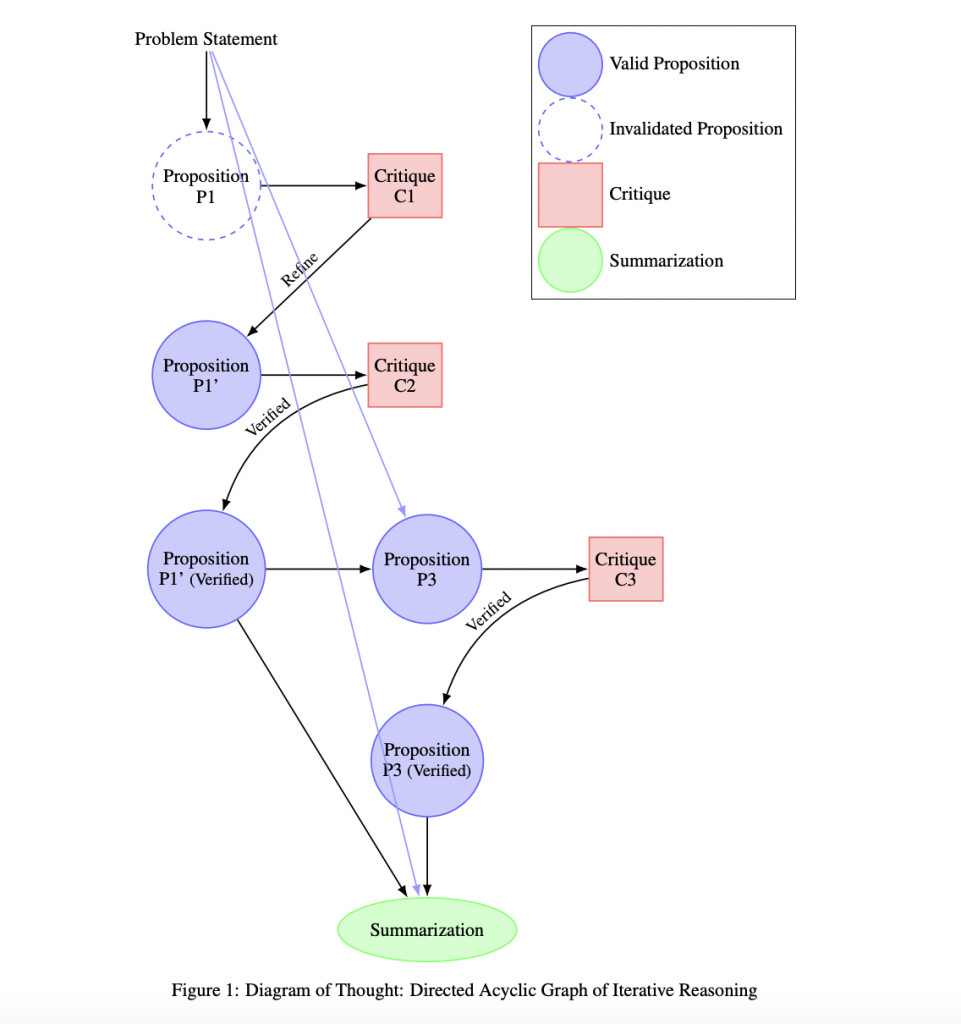

The Diagram of Thought (DoT) framework builds upon these prior approaches, integrating their strengths into a unified model within a single LLM. By representing reasoning as a directed acyclic graph (DAG), DoT captures the nuances of logical deduction while maintaining computational efficiency. This integration allows for a more coherent and streamlined reasoning process compared to earlier frameworks. DoT addresses the limitations of previous methods and provides a sophisticated model capable of handling the complexities of human-like reasoning in a computationally efficient manner.

The DoT framework enhances reasoning capabilities in large language models by modeling iterative reasoning as a directed acyclic graph within a single LLM. It incorporates natural language critiques for richer feedback and utilizes auto-regressive next-token prediction with role-specific tokens. DoT’s theoretical foundation in Topos theory ensures logical consistency. By embedding the entire reasoning process within one model, DoT eliminates complexities associated with multi-model collaboration. This approach addresses the limitations of previous frameworks, enhances training efficiency, and emphasizes the development of next-generation reasoning-specialised models with robust capabilities for complex reasoning tasks.

Researchers from Tsinghua University and Shanghai Artificial Intelligence Laboratory developed the DoT framework, constructing it as a DAG integrating propositions, critiques, refinements, and verifications. The methodology employs role-specific tokens for proposing, criticizing, and summarising, facilitating iterative reasoning improvement. Auto-regressive next-token prediction enables seamless transitions between proposing ideas and critical evaluation, enriching the feedback loop without external intervention. This approach streamlines the reasoning process within a single large language model (LLM), addressing the limitations of previous frameworks.

The DoT framework is formalized within Topos theory, providing a robust mathematical foundation that ensures logical consistency and soundness in the reasoning process. This formalism clarifies the relationship between reasoning processes and categorical logic, which is crucial for reliable outcomes in LLMs. While specific experimental results are not detailed, the integration of critiques and dynamic reasoning aspects aims to enhance the model’s ability to handle complex reasoning tasks effectively. The methodology focuses on improving both training and inference processes, potentially advancing the capabilities of next-generation reasoning-specialized models.

The DoT framework demonstrates enhanced reasoning capabilities in large language models through a directed acyclic graph structure. It facilitates the iterative improvement of propositions via natural language feedback and role-specific contributions. The Topos-theoretic validation ensures logical consistency and soundness. Implemented within a single model, DoT streamlines both training and inference processes, eliminating the need for multiple models or external control mechanisms. This approach enables exploration of complex reasoning pathways, resulting in more accurate conclusions and coherent reasoning processes. The framework’s effectiveness positions it as a significant advancement in developing reasoning-specialized models for complex tasks.

In conclusion, DoT framework represents iterative reasoning as a directed acyclic graph within a single large language model. It integrates propositions, critiques, refinements, and verifications, utilizing role-specific tokens for seamless transitions in the reasoning process. The topos-theoretic formalization provides a mathematical foundation, ensuring logical consistency and soundness. The Summarizer role synthesizes validated propositions into a coherent chain of thought, enhancing reliability. This approach bridges practical implementation with mathematical rigor, positioning DoT as a robust framework for developing next-generation reasoning-specialised models. The framework’s innovative design and theoretical grounding demonstrate significant potential for improving reasoning processes in large language models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Diagram of Thought (DoT): An AI Framework that Models Iterative Reasoning in Large Language Models (LLMs) as the Construction of a Directed Acyclic Graph (DAG) within a Single Model appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)