The significant advancements in Large Language Models (LLMs) have led to the development of agentic systems, which integrate several tools and APIs to fulfill user inquiries through function calls. By interpreting natural language commands, these systems can perform sophisticated tasks independently, such as information retrieval and device control. However, much research hasn’t been done on using these LLMs locally, on laptops or smartphones, or at the edge. The primary limitation is the large size and high processing demands of these models, which usually require cloud-based infrastructure to function properly.

In recent research from UC Berkeley and ICSI, the TinyAgent framework has been introduced as an innovative technique to train and deploy task-specific little language model agents in order to fill this gap. Because of their ability to manage function calls, these agents can operate independently on local devices and are not dependent on cloud-based infrastructure. By concentrating on smaller, more effective models that preserve the key functionalities of bigger LLMs and the ability to carry out user requests by coordinating other tools and APIs, TinyAgent provides a comprehensive solution for advancing sophisticated AI capabilities.

The TinyAgent framework starts with open-source models that need to be modified in order to correctly execute function calls. The LLMCompiler framework has been used to accomplish this, fine-tuning the models to guarantee that they can execute commands consistently. The methodical curation of a high-quality dataset designed especially for function-calling jobs is a crucial step in this approach. Using this specific dataset to refine the models, TinyAgent generates two variants: TinyAgent-1.1B and TinyAgent-7B. Despite being much smaller than their larger equivalents, like GPT-4-Turbo, these models are highly precise at handling particular jobs.

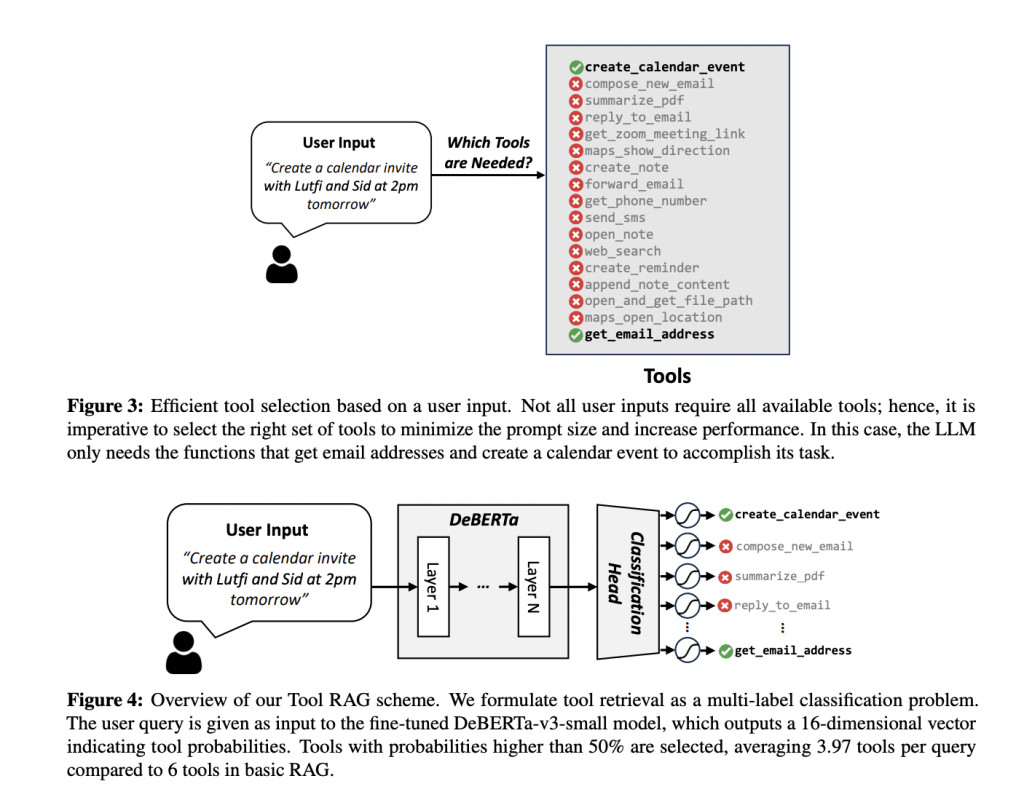

A unique tool retrieval technique is one of the main contributions of the TinyAgent framework, as it helps shorten the input prompt during inference. By doing this, the model is able to choose the right tool or function more quickly and effectively, all without being bogged down by extensive or unnecessary input data. To further improve its inference performance, TinyAgent also uses quantization, a method that shrinks the size and complexity of the model. In order to guarantee that the compact models can function properly on local devices, even with constrained computational resources, these optimizations are essential.

The TinyAgent framework has been deployed as a local Siri-like system for the MacBook in order to showcase the system’s real-world applications. Without requiring cloud access, this system can comprehend orders from users sent by text or voice input and carry out actions like starting apps, creating reminders, and doing information searches. By storing user data locally, this localized deployment not only protects privacy but also does away with the necessity for an internet connection, which is a critical feature in situations where reliable access might not be available.

The TinyAgent framework has demonstrated some amazing results. The function-calling capabilities of much bigger models, such as GPT-4-Turbo, have been demonstrated to be met, and in some cases exceeded, by the TinyAgent models despite their reduced size. This is a good accomplishment because it shows that smaller models may accomplish highly specialized tasks effectively and efficiently when they are trained and optimized using the appropriate methods.

In conclusion, TinyAgent offers a great method for enabling edge devices to harness the potential of LLM-driven agentic systems. While retaining strong performance in real-time applications, TinyAgent provides an effective, privacy-focused substitute for cloud-based AI systems by optimizing smaller models for function calling and employing strategies like tool retrieval and quantization.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post TinyAgent: An End-to-End AI Framework for Training and Deploying Task-Specific Small Language Model Agents appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)