A major challenge in the field of Speech-Language Models (SLMs) is the lack of comprehensive evaluation metrics that go beyond basic textual content modeling. While SLMs have shown significant progress in generating coherent and grammatically correct speech, their ability to model acoustic features such as emotion, background noise, and speaker identity remains underexplored. Evaluating these dimensions is crucial, as human communication is heavily influenced by such acoustic cues. For example, the same phrase spoken with different intonations or in different acoustic environments can carry entirely different meanings. The absence of robust benchmarks to assess these features limits the practical applicability of SLMs in real-world tasks such as sentiment detection in virtual assistants or multi-speaker environments in live broadcasting systems. Overcoming these challenges is vital for advancing the field and enabling more accurate and context-aware speech processing.

Current evaluation techniques for SLMs primarily focus on semantic and syntactic accuracy through text-based metrics such as word prediction and sentence coherence. These methods include benchmarks like ProsAudit, which evaluates prosodic elements like natural pauses, and SD-eval, which assesses models’ ability to generate text responses that fit a given audio context. However, these methods have significant limitations. They either focus on a single aspect of acoustics (such as prosody) or rely on generation-based metrics that are computationally intensive, making them unsuitable for real-time applications. Additionally, text-based evaluations fail to account for the richness of non-linguistic information present in speech, such as speaker identity or room acoustics, which can drastically alter the perception of spoken content. As a result, existing approaches are insufficient for evaluating the holistic performance of SLMs in environments where both semantic and acoustic consistency are critical.

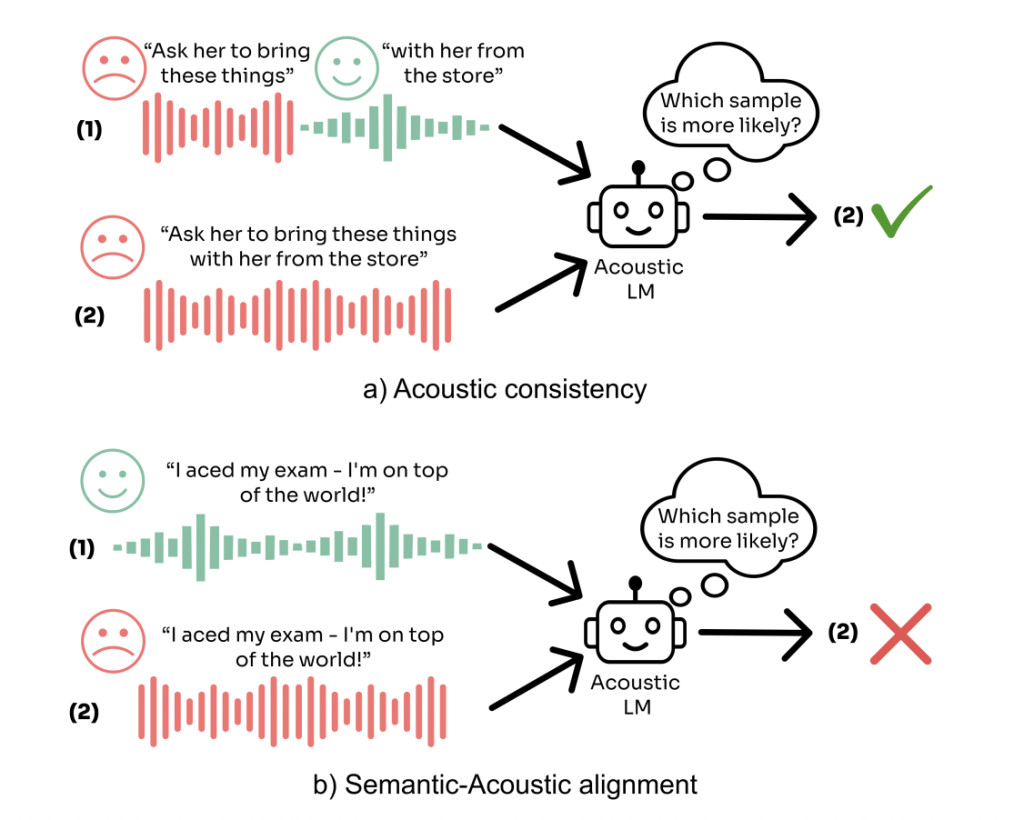

Researchers from the Hebrew University of Jerusalem introduce SALMON, a comprehensive evaluation suite specifically designed to assess the acoustic consistency and acoustic-semantic alignment capabilities of SLMs. SALMON introduces two primary evaluation tasks: (i) acoustic consistency and (ii) acoustic-semantic alignment, which test how well a model can maintain acoustic properties and align them with the spoken text. For instance, SALMON evaluates whether a model can detect unnatural shifts in speaker identity, background noise, or sentiment within an audio clip. It uses a modeling-based approach that assigns higher likelihoods to acoustically consistent samples compared to those with altered or misaligned features. This methodology allows for fast and scalable evaluation of even large models, making it well-suited for real-world applications. By focusing on a wide range of acoustic elements such as sentiment, speaker identity, background noise, and room acoustics, SALMON represents a significant innovation in the way SLMs are assessed, pushing the boundaries of speech model evaluation.

SALMON employs multiple acoustic benchmarks to evaluate various aspects of speech consistency. These benchmarks use datasets specifically curated to test models on dimensions such as speaker consistency (using the VCTK dataset), sentiment consistency (using the Expresso dataset), and background noise consistency (using LJ Speech and FSD50K). The acoustic consistency task evaluates whether the model can maintain features like speaker identity throughout a recording or detect changes in room acoustics. For example, in the room impulse response (RIR) consistency task, a speech sample is recorded with different room acoustics in each half of the clip, and the model must correctly identify this change.

In the acoustic-semantic alignment task, the suite challenges models to match the background environment or sentiment of the speech with the appropriate acoustic cues. For example, if the speech refers to a “calm beach,†the model should assign a higher likelihood to a recording with ocean sounds than one with construction noise. This alignment is tested using data synthesized from Azure Text-to-Speech systems and curated through manual filtering to ensure clear and unambiguous examples. The benchmarks are computationally efficient, as they do not require human intervention or additional models during runtime, making SALMON a scalable solution for evaluating SLMs across a range of acoustic environments.

The evaluation of multiple Speech Language Models (SLMs) using SALMON revealed that while current models can handle basic acoustic tasks, they significantly underperform compared to humans in more complex acoustic-semantic tasks. Human evaluators consistently scored above 90% on tasks such as sentiment alignment and background noise detection, while models like TWIST 7B and pGSLM achieved far lower accuracy levels, often performing only marginally better than random chance. For simpler tasks, such as gender consistency, models like pGSLM performed better, achieving 88.5% accuracy. However, on more challenging tasks that require nuanced acoustic understanding, such as detecting room impulse responses or maintaining acoustic consistency across diverse environments, even the best models lagged far behind human capabilities. These results indicate a clear need for improvement in the ability of SLMs to jointly model semantic and acoustic features, emphasizing the importance of advancing acoustic-aware models for future applications.

In conclusion, SALMON provides a comprehensive suite for evaluating acoustic modeling in Speech Language Models, addressing the gap left by traditional evaluation methods that focus primarily on textual consistency. By introducing benchmarks that assess acoustic consistency and semantic-acoustic alignment, SALMON allows researchers to identify the strengths and weaknesses of models in various acoustic dimensions. The results demonstrate that while current models can handle some tasks, they fall significantly short of human performance in more complex scenarios. As a result, SALMON is expected to guide future research and model development towards more acoustic-aware and contextually enriched models, pushing the boundaries of what SLMs can achieve in real-world applications.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post How Well Can AI Models Capture the Sound of Emotion? This AI Paper Unveils SALMON: A Suite for Acoustic Language Model Evaluation appeared first on MarkTechPost.

Source: Read MoreÂ