Generative Large Language Models (LLMs) are capable of in-context learning (ICL), which is the process of learning from examples given within a prompt. However, research on the precise principles underlying these models’ ICL performance is still underway. The inconsistent experimental results are one of the main obstacles, making it challenging to provide a clear explanation for how LLMs make use of ICL.Â

To overcome this, in recent research, a team of researchers from Michigan State University and Florida Institute for Human and Machine Cognition has introduced a framework that includes retrieving internal information and learning from in-context instances as the two processes to evaluate the mechanisms of in-context learning. In this approach, the team has concentrated on regression challenges, where the model must predict continuous values instead of labels with categories.Â

It has been shown that LLMs can do regression on real-world datasets. This shows that the models are capable of handling more complicated, quantitative issues and are not just restricted to tasks related to text production or classification. In this way, targeted experiments can be conducted that evaluate the proportion of the model’s performance from retrieving previously learned information (from its training data) and the proportion from the model adjusting to new instances given in the context.

This process functions on a spectrum between two extremes: full learning, where the model successfully learns new patterns from the examples given within the prompt, and pure knowledge retrieval, where the model uses its internal knowledge without learning anything new from the in-context examples. A number of variables, such as the model’s past understanding of the job, the kind of information in the prompt, and the abundance or scarcity of in-context examples, affect how much the model depends on one mechanism over another.

The team has used three different LLMs and several datasets in their studies to test the hypothesis, demonstrating that the results hold true for a range of models and data circumstances. The findings have shed important light on how LLMs strike a balance between recalling knowledge that has already been learned and adjusting to unique situations. The team has also studied how the model’s dependence on these two processes can change depending on the task configuration, including the problem’s difficulty and the quantity of in-context instances.

The analysis also clarifies how LLM performance can be optimized through prompt engineering. Depending on the particular issue being addressed, the model’s capacity to engage in meta-learning from in-context examples can be improved, or it can be trained to concentrate more on information retrieval by carefully crafting prompts. With a better grasp of LLMs, developers can use them for a greater variety of tasks and perform better when learning new patterns and retrieving pertinent information.

The team has summarized their primary contributions as follows.Â

The team has demonstrated that LLMs can effectively complete regression tasks on realistic datasets through in-context learning.

A unique theory has been put out for ICL, arguing that LLMs employ both pre-existing knowledge retrieval and learning from in-context instances when drawing conclusions. This approach provides a cohesive viewpoint that makes sense of the results of previous studies.

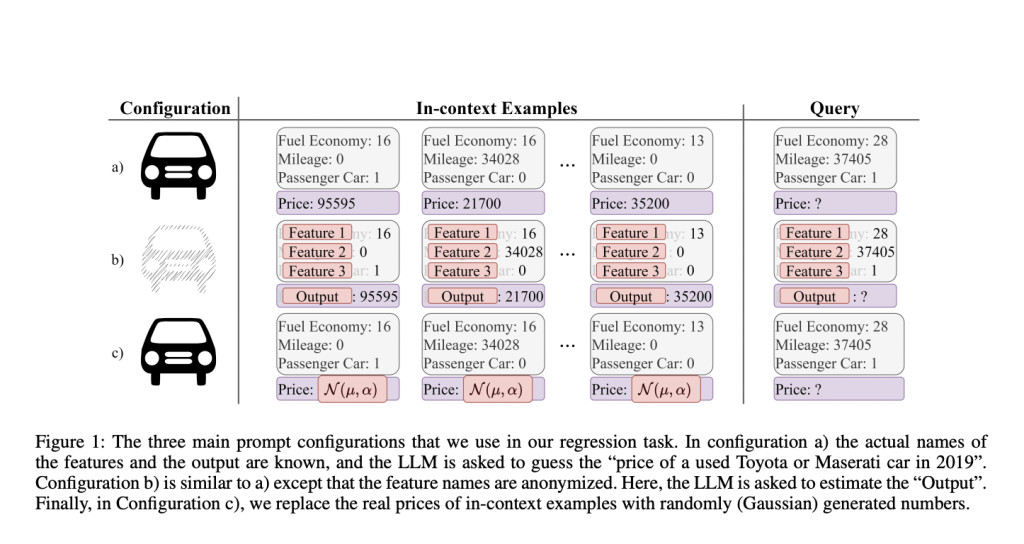

To enable more thorough testing and insights, the team has presented a unique methodology that systematically compares several ICL mechanisms across several LLMs, datasets, and prompt designs.

The team has offered a rapid engineering toolkit to optimize balance for particular tasks, as well as a thorough analysis of how LLMs strike a balance between accessing internal knowledge and learning from new cases.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Learning and Knowledge Retrieval: A Comprehensive Framework for In-Context Learning in Large Language Models (LLMs) appeared first on MarkTechPost.

Source: Read MoreÂ