OpenAI has once again pushed the boundaries of AI with the release of OpenAI Strawberry o1, a large language model (LLM) designed specifically for complex reasoning tasks. OpenAI o1 represents a significant leap in AI’s ability to reason, think critically, and improve performance through reinforcement learning. It embodies a new era in AI development, setting the stage for enhanced programming, mathematics, and scientific reasoning performance. Let’s delve into the features, performance metrics, and implications of OpenAI o1.

Introduction of OpenAI o1

OpenAI introduced OpenAI Strawberry o1 with a clear focus on reasoning capabilities that go beyond what previous models like GPT-4o have achieved. The model is designed to think before responding, producing a long internal chain of thought that simulates human problem-solving techniques. This new model leverages reinforcement learning, a method where the model learns from feedback, refining its internal logic and improving its problem-solving approach over time. OpenAI o1 ranks among the top performers in various competitive exams and benchmarks, including programming contests like Codeforces and math competitions like the USA Math Olympiad.

This new model also exceeds human PhD-level performance in physics, biology, and chemistry, as evidenced by its performance on the GPQA (General Physics Question Answering) benchmark. OpenAI’s decision to release an early version of OpenAI o1, called OpenAI o1-preview, highlights their commitment to continuously improving the model while making it available for real-world testing through ChatGPT and trusted API users.

Technical Advancements in Reinforcement Learning

One of the most impressive aspects of OpenAI o1 is its use of reinforcement learning to build a “chain of thought.†Unlike traditional LLMs that generate immediate answers, OpenAI o1 has been trained to reason through a problem step by step. This ability is crucial for tackling complex tasks, especially those that require long-term reasoning, such as advanced mathematics or coding problems. Through its training, OpenAI o1 has become proficient at breaking down large problems into smaller, more manageable pieces and refining its thought process in real time.

OpenAI o1 has consistently improved performance with more time spent on training (train-time compute) and problem-solving (test-time compute). This capability contrasts with the traditional approach of LLM pretraining, where scaling improvements were primarily dependent on dataset size. In the case of OpenAI o1, the model’s reasoning capabilities become more refined the longer it works on a problem, demonstrating the effectiveness of reinforcement learning in complex scenarios.

OpenAI o1’s Benchmark Performance

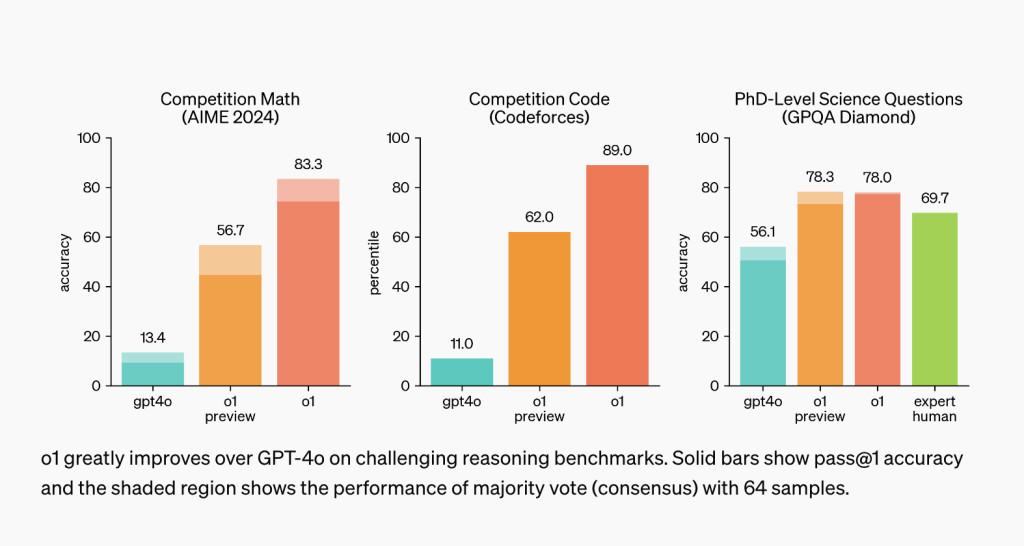

To demonstrate the advancements of OpenAI o1, OpenAI tested the model on various benchmarks, including competitive programming exams, math tests, and science challenges. The results were remarkable. For instance, on the USA Math Olympiad qualifier (AIME), OpenAI o1 performed at a level comparable to the top 500 math students in the U.S. GPT-4o, by comparison, only solved 12% of the problems. In contrast, OpenAI o1 averaged a 74% success rate, with an impressive 93% accuracy when using consensus among multiple samples.

Similarly, OpenAI o1 outperformed human experts with PhDs on the GPQA diamond benchmark, which tests the model’s understanding of advanced physics, biology, and chemistry problems. While this does not imply that OpenAI o1 is superior to PhD experts in every way, it does showcase the model’s ability to solve highly specialized problems at a human-expert level.

In terms of programming, OpenAI o1 achieved an Elo rating of 1807 in simulated Codeforces programming contests, outperforming 93% of human competitors. This result marks a significant improvement over GPT-4o, which achieved an Elo rating of 808. These performance metrics indicate that OpenAI o1 is capable of solving routine tasks and tackling highly complex problems across multiple domains.

Chain of Thought: A New Paradigm for AI Reasoning

A defining feature of OpenAI o1 is its chain of thought, a process by which the model engages in internal reasoning before delivering an answer. This approach mirrors how humans solve problems, especially in mathematics and coding. By thinking step by step, OpenAI o1 can analyze and correct its mistakes, try different strategies, and ultimately refine its solutions. This capability dramatically improves the model’s accuracy on reasoning-heavy tasks.

OpenAI illustrated this process with examples where OpenAI o1 solved challenging problems in coding, cipher decoding, and crosswords. In these scenarios, the model used its chain of thought to methodically work through each step of the problem, leading to more accurate solutions. This feature sets OpenAI o1 apart from earlier models that needed more capacity for such deep, iterative reasoning.

Human Preference and Safety Considerations

Beyond its technical capabilities, OpenAI o1 was also evaluated based on human preferences. OpenAI compared responses from OpenAI o1-preview and GPT-4o on various prompts across domains. Human evaluators overwhelmingly preferred OpenAI o1-preview’s responses in areas that required reasoning, such as data analysis, coding, and math. However, OpenAI o1 was not always the preferred choice for natural language tasks, indicating that the model may not be suited for every use case.

Safety has always been a priority for OpenAI, and OpenAI o1 incorporates new safety measures to ensure responsible usage. OpenAI o1’s chain of thought reasoning has opened new avenues for alignment and safety. By integrating safety policies directly into the model’s reasoning process, OpenAI found that the model became more robust in adhering to safety guidelines, even in edge cases or scenarios where users attempted to “jailbreak†the system. These improvements were evident in OpenAI o1’s performance on safety benchmarks, where it outperformed GPT-4o in categories such as preventing harmful content and handling adversarial prompts.

Future Implications and Applications

The release of OpenAI o1 marks a major step in developing AI capable of complex reasoning. Its ability to outperform humans in specialized tasks, combined with its reinforcement learning framework, makes it well-suited for applications in science, engineering, and other fields that demand critical thinking.

OpenAI expects that future iterations of OpenAI o1 will further enhance its reasoning abilities, opening the door to even more sophisticated applications. Whether in academic research, software development, or scientific discovery, OpenAI o1 represents the future of AI-assisted problem-solving. The model’s potential to align AI reasoning with human values and principles also offers hope for safer and more responsible AI systems in the years to come.

In conclusion, OpenAI o1 sets a new standard for large language models, demonstrating unparalleled reasoning capabilities across various domains. Its use of reinforcement learning to build a chain of thought represents a significant innovation in AI research that promises to unlock new possibilities for AI applications in everyday tasks and specialized fields.

Check out the Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post OpenAI Introduces OpenAI Strawberry o1: A Breakthrough in AI Reasoning with 93% Accuracy in Math Challenges and Ranks in the Top 1% of Programming Contests appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)