Phind has officially announced the release of its new flagship model, Phind-405B, along with an innovative Phind Instant model aimed at revolutionizing AI-powered search and programming tasks. These advancements represent a milestone in technical capabilities, empowering developers and technical users with more efficient, powerful tools for complex problem-solving.

Introduction of Phind-405B

Phind-405B is the cornerstone of the latest release, marking a major milestone in Phind’s development. Built on Meta Llama 3.1 405B, Phind-405B is engineered to excel in programming and technical tasks. With an ability to handle up to 128K tokens of context, including a 32K context window available at launch, this model is designed for high-context technical challenges. Phind-405B is now available for all Phind Pro users, giving them immediate access to its advanced capabilities.

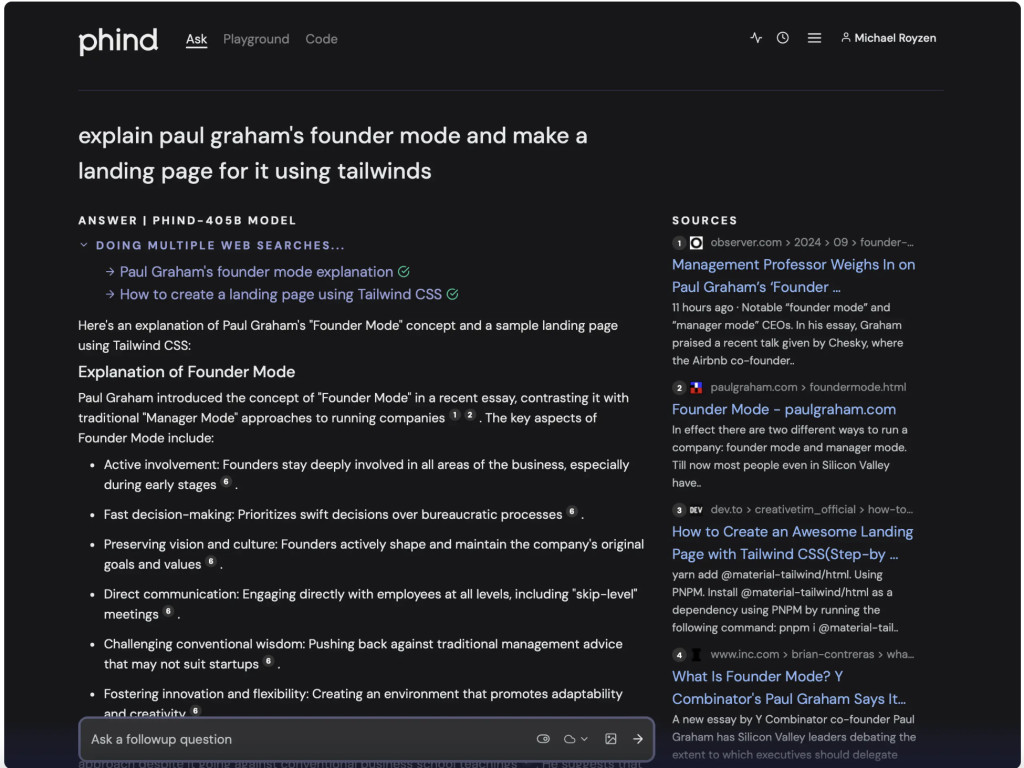

One of the model’s most impressive features is its performance in real-world tasks, particularly web app development. In a notable example, when tasked with creating a landing page for “Paul Graham’s Founder Mode,†Phind-405B utilized multiple searches and produced a range of design options. Its capabilities go beyond basic programming; it offers solutions that merge creativity and efficiency.

Phind-405B also matches the performance of Claude 3.5 Sonnet on the HumanEval 0-shot metric, with a remarkable 92% accuracy. This is among the top models for tasks requiring precision and technical expertise. Phind has trained this model on 256 H100 GPUs using FP8 mixed precision, which ensures that the model performs without sacrificing quality while reducing memory usage by 40%. This remarkable efficiency enables the model to run smoother and faster while maintaining the high standards expected in technical environments.

Phind Instant: A Leap in Search Speed

With Phind-405B, Phind has also introduced Phind Instant, a model aimed at addressing the common issue of AI-powered search latency. Unlike traditional search engines like Google, AI-driven searches often suffer from delays despite delivering higher-quality answers. Phind Instant aims to close this gap by offering lightning-fast response times while maintaining the depth and accuracy of its answers.

Based on Meta Llama 3.1 8B and running on Phind-customized NVIDIA TensorRT-LLM inference servers, Phind Instant processes up to 350 tokens per second. These impressive speeds are achieved through FP8 mixed precision, flash decoding, and fused CUDA kernels for MLP (Multilayer Perceptron). The technical optimizations behind Phind Instant allow it to be a highly responsive tool, especially in environments where quick retrieval of accurate information is crucial.

Phind Instant’s introduction highlights the company’s commitment to improving the user experience in real-time search scenarios. The model’s architecture and implementation demonstrate Phind’s attention to detail in optimizing speed and quality.

Improvements in Search Efficiency

In conjunction with the release of these models, Phind has also rolled out several improvements to its search infrastructure. Recognizing that every millisecond counts in search, Phind has reduced latency by up to 800 milliseconds per search. This improvement comes from a newly trained model that prefetches web results before the user completes typing. This proactive approach dramatically enhances the search experience, particularly when time-sensitive or complex queries are involved.

Further enhancing the search capabilities, Phind has introduced a new, larger embedding model, which is 15 times bigger than its predecessor. Despite the increased model size, latency has been reduced thanks to the implementation of 16-way parallelism during the computation of the embeddings. These technical enhancements ensure that the most relevant information is fed into the model, further improving the relevance and accuracy of search results.

A Broader Vision for the Future

Phind’s latest developments focus on empowering developers and technologists by streamlining their workflows and enabling faster experimentation. By introducing Phind-405B and Phind Instant, the company is positioning itself as a leader in providing tools for complex technical queries. What sets Phind apart is its dedication to solving real-world problems while also enabling users to explore curiosities beyond the technical realm. With robust tools, developers can move from ideation to implementation much faster, paving the way for innovation.

As Phind continues to expand its offerings, the company has expressed gratitude to its key partners, including Meta, NVIDIA, Voltage Park, SF Compute, and AWS. These partnerships underscore the collaborative effort behind Phind’s technical breakthroughs and the broader implications these models have for AI and machine learning communities.

In conclusion, the release of Phind-405B and Phind Instant addresses both speed and quality in AI search and technical problem-solving, and Phind has solidified its role as a leader in the field. The future looks promising for developers and users who rely on high-performance models for their projects.

The post Phind Presents Phind-405B: Phind’s Flagship AI Model Enhancing Technical Task Efficiency and Lightning-Fast Phind Instant for Superior Search Performance appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)