Explainable AI (XAI) has emerged as a critical field, focusing on providing interpretable insights into machine learning model decisions. Self-explaining models, utilizing techniques such as backpropagation-based, model distillation, and prototype-based approaches, aim to elucidate decision-making processes. However, most existing studies treat explanations as one-way communication tools for model inspection, neglecting their potential to actively contribute to model learning.

Recent research has begun to explore the integration of explanations into model training loops, as seen in explanatory interactive learning (XIL) and related concepts in human-machine interactive learning. While some studies have proposed explain-then-predict models and touched on self-reflection in AI, the full potential of explanations as a foundation for reflective processes and model refinement remains underexplored. This paper aims to address this gap by investigating the integration of explanations into the training process and their capacity to enhance model learning.

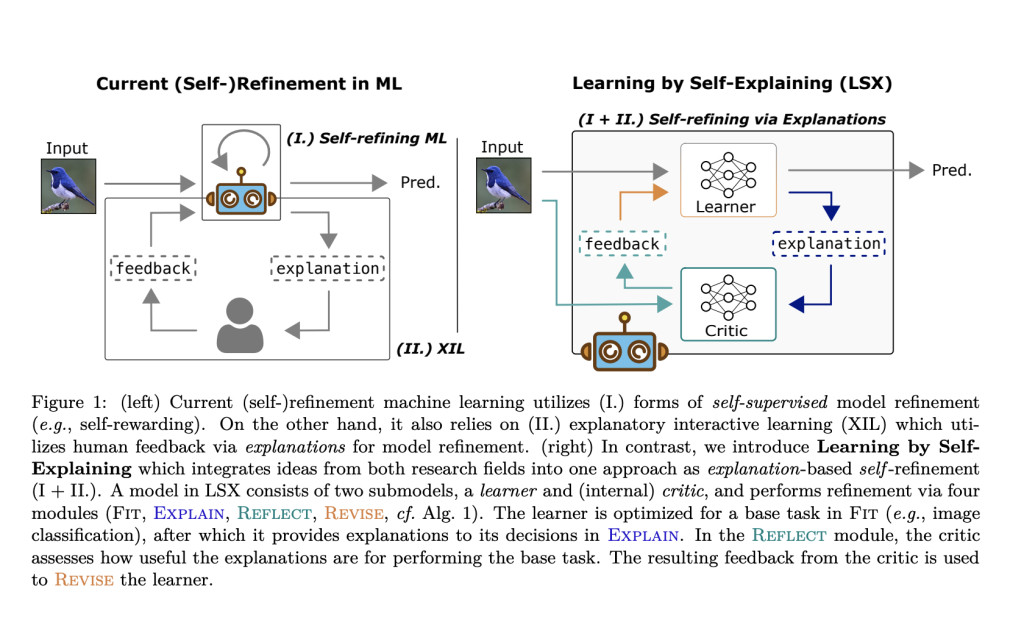

Learning by Self-Explaining (LSX) introduces a novel workflow for enhancing AI model learning, particularly in image classification. It integrates self-refining AI and human-guided explanatory machine learning, utilizing explanations to improve model performance without immediate human feedback. LSX enables a learner model to optimize based on both the original task and feedback from its own explanations, assessed by an internal critic model. This approach aims to produce more relevant explanations and improve model generalization. The paper outlines extensive experimental evaluations across various datasets and metrics to demonstrate LSX’s effectiveness in advancing explainable AI and self-refining machine learning.

LSX introduces a novel approach integrating self-explanations into AI model learning processes. It consists of two key components: the learner model, which performs primary tasks and generates explanations, and the internal critic, which evaluates explanation quality. LSX operates through an Explain, Reflect, Revise cycle, where the learner provides explanations, the critic assesses their usefulness, and the learner refines its approach based on feedback. This methodology emphasizes the importance of explanations not only for understanding model decisions but also for enhancing learning capabilities.

The LSX framework aims to improve model performance, including generalization, knowledge consolidation, and explanation faithfulness. By incorporating self-explanations into learning, LSX mitigates confounding factors and enhances explanation relevance. This reflective learning approach enables models to learn from both data and their own explanations, fostering deeper understanding and continuous improvement. LSX represents a significant advancement in explainable AI, promoting the development of more interpretable and reflective AI systems through dynamic interaction between the learner and internal critic components.

The experimental evaluations of LSX demonstrate significant improvements in model generalization. Held-out test set accuracy measurements across datasets like MNIST, ChestMNIST, and CUB-10 show substantial enhancements, with LSX achieving competitive or superior performance compared to traditional methods. The study also assesses explanation faithfulness using comprehensiveness and sufficiency metrics, revealing that LSX produces relevant and accurate explanations. A strong correlation between model accuracy and explanation distinctiveness further underscores the effectiveness of the approach.

LSX’s self-refinement process, where the model evaluates its learned knowledge through explanations, contributes to its ability to revise predictions based on internal critical feedback. This iterative refinement is central to the LSX methodology. Overall, the results indicate that LSX offers multiple benefits, including improved generalization, enhanced explanation faithfulness, and mitigation of shortcut learning. The study concludes that self-explanations play a crucial role in enhancing AI models’ reflective capabilities and overall performance, positioning LSX as a promising approach in explainable AI.

In conclusion, LSX introduces a novel approach to AI learning, emphasizing the role of explanations in model self-refinement. Experimental evaluations demonstrate LSX’s advantages in enhancing generalization, knowledge consolidation, explanation faithfulness, and mitigating shortcut learning. Future research directions include applying LSX to different modalities and tasks, integrating memory buffers for explanation refinement, incorporating background knowledge, exploring connections with causal explanations, and developing inherently interpretable models. These findings underscore LSX’s potential to significantly advance AI learning processes, offering promising avenues for further exploration in explainable and interpretable AI.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Learning by Self-Explaining (LSX): A Novel Approach to Enhancing AI Generalization and Faithful Model Explanations through Self-Refinement appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)