Large language models (LLMs) have demonstrated remarkable reasoning capabilities across various domains. But do they also possess metacognitive knowledge – an understanding of their thinking processes? This intriguing question is explored in a new paper that investigates the metacognitive capabilities of LLMs, specifically in the context of mathematical problem-solving. A team of researchers from Mila, University of Montreal, Princeton University, The University of Cambridge, and Google DeepMind develop an innovative approach to extract and leverage LLMs’ implicit knowledge about mathematical skills and concepts, with promising results for enhancing mathematical reasoning.

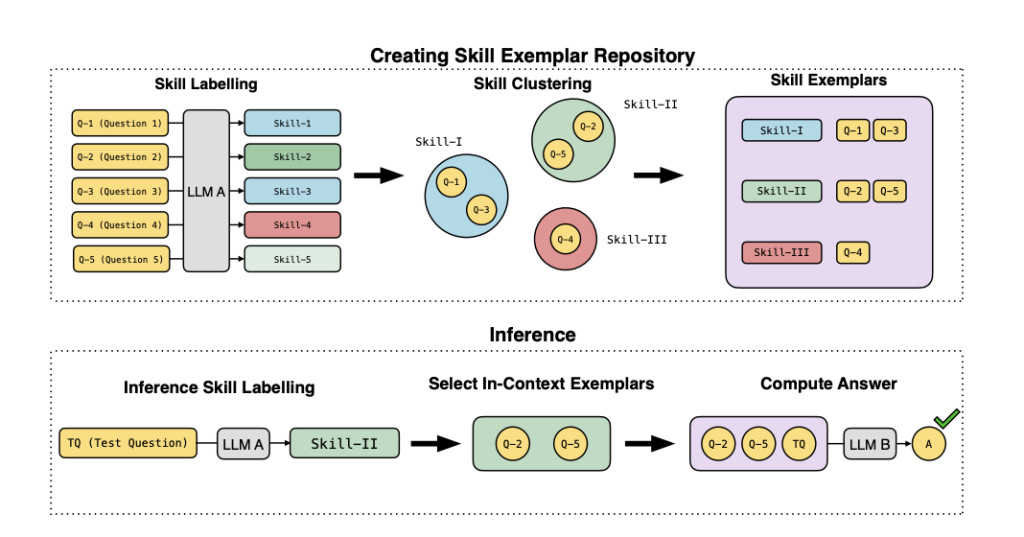

Current methods for improving LLM performance on mathematical tasks often rely on generic prompting techniques like chain-of-thought reasoning. While effective, these approaches don’t take advantage of any potential metacognitive knowledge within the models. The researchers propose a novel method to tap into LLMs’ latent understanding of mathematical skills. Their approach involves using a powerful LLM like GPT- 4 to assign fine-grained skill labels to mathematical questions, followed by semantic clustering to obtain broader skill categories. This results in a “Skill Exemplar Repository†– a curated set of questions tagged with interpretable skill labels.

The key innovation is using this repository during inference on new math problems. When presented with a question, the LLM is first asked to identify the most relevant skill from the repository. It is then given exemplar questions/answers associated with that skill as in-context examples before attempting the solution. This skill-based prompting approach was evaluated on challenging datasets like GSM8K and MATH, covering various mathematical difficulties. On the MATH dataset, it achieved an impressive 11.6% improvement over standard chain-of-thought prompting. The method also boosted performance when integrated with program-aided language models (PALs) that generate code-based solutions.Â

Importantly, the researchers demonstrated that the skill knowledge extracted by a powerful model like GPT-4 transfers effectively to enhance the performance of weaker LLMs. The approach also showed strong generalization, improving results when applied to several other math word problem datasets beyond those used for creating the skill repository. This study offers compelling evidence that LLMs possess meaningful metacognitive knowledge about mathematical problem-solving. By developing techniques to extract and operationalize this knowledge, the researchers have opened up exciting new avenues for enhancing LLMs’ mathematical reasoning capabilities.Â

The skill-based approach provides several key advantages: it allows for more targeted and relevant in-context examples, can be seamlessly integrated with existing prompting methods, and demonstrates strong transferability across models and datasets. While there is room for improvement, particularly in handling problems requiring multiple skills, this work represents a significant step towards more sophisticated mathematical reasoning in AI systems. Beyond mathematics, the methodology presented could be adapted to uncover and leverage metacognitive knowledge in other domains. As such, this research advances our understanding of LLMs’ cognitive processes and points towards promising new directions for improving their overall capabilities through metacognitive bootstrapping.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post From Computation to Comprehension: Metacognitive Insights in LLM-based Mathematical Problem Solving appeared first on MarkTechPost.

Source: Read MoreÂ