Large language models (LLMs) have significantly progressed in various domains, including natural language understanding and code generation. These models can generate coherent text and solve complex tasks. However, LLMs face challenges when applied to more specialized areas such as competitive programming and code generation. This field focuses on improving the models’ ability to generate diverse, accurate solutions to coding problems, using computational power more effectively during inference. This is crucial for applications where LLMs must provide multiple high-quality solutions to a single issue, such as in competitive coding environments.

One of the core issues in LLM-driven code generation is the need for more diversity in the generated solutions. When tasked with developing multiple potential answers to a coding problem, these models often produce highly similar outputs, even if they are incorrect. This redundancy limits the models’ capacity to explore alternative approaches and affects their overall performance. This issue is primarily due to post-training objectives, often leading to models focusing on producing one “correct†answer, reducing their ability to generate varied, creative solutions. As a result, search processes during inference time become less efficient, and the models struggle to achieve higher accuracy rates when evaluated on complex benchmarks.

Existing methods in code generation, such as repeated sampling, attempt to address this problem by generating several solutions and hoping that at least one is correct. In this approach, the LLM samples outputs repeatedly, trying to improve its pass rate over time. However, this method has shown limitations. For example, when applied to competitive coding benchmarks like LiveCodeBench, repeated sampling achieves only a pass@200 score of 60.6%. This means that out of 200 attempts, the model correctly solves the problem only 60.6% of the time. Such performance metrics indicate that current methods must fully use the model’s potential and computational resources.

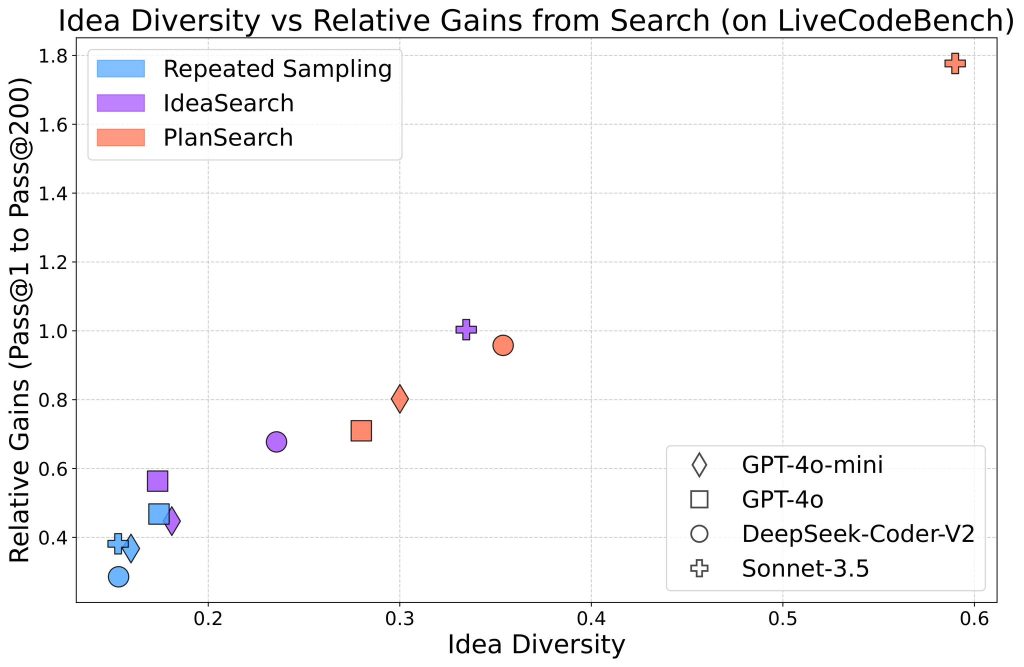

Researchers from Scale AI, California Institute of Technology, Northeastern University, and Cursor AI introduced a new method called PlanSearch. This approach focuses on increasing the diversity of solutions by searching in the natural language “idea space†before the model generates code. Instead of directly generating code solutions, PlanSearch first creates a variety of high-level observations and sketches about the problem, which are combined into different plans for solving the task. This method allows the LLM to explore a broader range of possibilities and generate more diverse solutions. By framing the problem in natural language, PlanSearch enables the model to think through various strategies before committing to a final solution, increasing the chance of success.

PlanSearch operates in stages, beginning with the generation of first-order observations about the problem, such as identifying potential algorithms or data structures that might be useful. For example, in a coding problem, the model might observe that a hash map or greedy search could be applied. These observations are then combined into second-order observations, creating more refined strategies for solving the problem. After generating both levels of observations, PlanSearch translates these ideas into pseudocode and eventually into executable code. To further increase diversity, the method prompts the model to regenerate its strategy and critique previous solutions, thus producing a wider range of possibilities. This multi-stage process allows the model to explore more diverse potential solutions than traditional methods.

When tested on LiveCodeBench, a benchmark designed for competitive programming tasks, PlanSearch achieved a pass@200 score of 77%, a substantial improvement over the 60.6% achieved by repeated sampling and the baseline score of 41.4% without any search method. In other benchmarks like HumanEval+ and MBPP+, PlanSearch outperformed traditional methods, achieving pass@200 scores of 98.5% and 93.7%, respectively. These results demonstrate the approach’s effectiveness across multiple competitive coding tasks, making it a powerful tool for improving LLM performance in code generation.

Along with improved pass rates, PlanSearch offers significant gains in terms of efficiency. For example, when combined with Claude 3.5 Sonnet, a state-of-the-art LLM, PlanSearch outperformed models that were not using search methods even after just a few attempts. This indicates that PlanSearch enhances accuracy and reduces the computational resources required to achieve high-quality results. The introduction of PlanSearch has proven to be a major step forward, particularly for coding tasks where the ability to generate diverse solutions quickly is critical.

In conclusion, PlanSearch addresses a key limitation in LLM-driven code generation: the need for more diversity in the solutions generated by traditional methods. By shifting the search process to the natural language idea space, the technique encourages the exploration of a broader range of strategies, leading to more accurate and diverse results. This approach has significantly improved across multiple benchmarks, with pass@200 scores reaching 77% on LiveCodeBench and over 90% on other coding tasks. Also, by enhancing diversity and efficiency, PlanSearch represents a major advancement in LLM code generation, offering a promising solution to generating accurate, diverse outputs in complex coding environments.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Scale AI Proposes PlanSearch: A New SOTA Test-Time Compute Method to Enhance Diversity and Efficiency in Large Language Model Code Generation appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)