Graph Neural Networks (GNNs) have emerged as the leading approach for graph learning tasks across various domains, including recommender systems, social networks, and bioinformatics. However, GNNs have shown vulnerability to adversarial attacks, particularly structural attacks that modify graph edges. These attacks pose significant challenges in scenarios where attackers have limited access to entity relationships. Despite the development of numerous robust GNN models to defend against such attacks, existing approaches face substantial scalability issues. These challenges stem from high computational complexity due to complex defense mechanisms and hyper-parameter complexity, which requires extensive background knowledge and complicates model deployment in real-world scenarios. Consequently, there is a pressing need for a GNN model that achieves adversarial robustness against structural attacks while maintaining simplicity and efficiency.

Researchers have overcome structural attacks in graph learning through two main approaches: developing effective attack methods and creating robust GNN models for defense. Attack strategies like Mettack and BinarizedAttack use gradient-based optimization to degrade model performance. Defensive measures include purifying modified structures and designing adaptive aggregation strategies, as seen in GNNGUARD. However, these robust GNNs often suffer from high computational overhead and hyper-parameter complexity. Recent efforts like NoisyGCN and EvenNet aim for efficiency by simplifying defense mechanisms but still introduce additional hyper-parameters requiring careful tuning. While these approaches have made significant strides in reducing time complexity, the challenge of developing simple yet robust GNN models persists, driving the need for further innovation in the field.

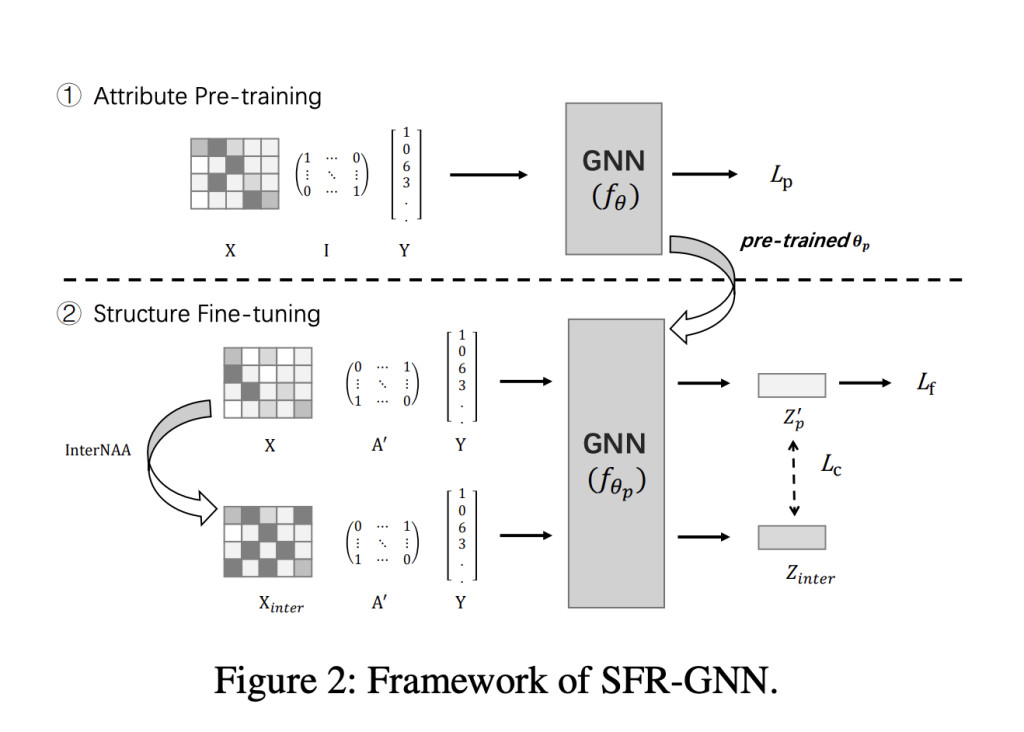

Researchers from The Hong Kong Polytechnic University, The Chinese University of Hong Kong, and Shanghai Jiao Tong University introduce SFR-GNN (Simple and Fast Robust Graph Neural Network), a unique two-step approach to counter structural attacks in graph learning. The method pre-trains on node attributes, then fine-tunes on structural information, disrupting the “paired effect†of attacks. This simple strategy achieves robustness without additional hyper-parameters or complex mechanisms, significantly reducing computational complexity. SFR-GNN’s design makes it nearly as efficient as vanilla GCN while outperforming existing robust models in simplicity and ease of implementation. By pairing manipulated structures with pre-trained embeddings instead of original attributes, SFR-GNN effectively mitigates the impact of structural attacks on model performance.

SFR-GNN introduces a two-stage approach to counter structural attacks in graph learning: attribute pre-training and structure fine-tuning. The pre-training stage learns node embeddings solely from attributes, excluding structural information, to produce uncontaminated embeddings. The fine-tuning stage then incorporates structural information while mitigating attack effects through unique contrastive learning. The model employs Inter-class Node attribute augmentation (InterNAA) to generate alternative node features, further reducing the impact of contaminated structural information. By learning from less harmful mutual information, SFR-GNN achieves robustness without complex purification mechanisms. SFR-GNN’s computational complexity is comparable to vanilla GCN and significantly lower than existing robust GNNs, making it both efficient and effective against structural attacks.

SFR-GNN has demonstrated remarkable performance in defending against structural attacks on graph neural networks. Experiments conducted on widely used benchmarks like Cora, CiteSeer, and Pubmed, as well as large-scale datasets ogbn-arxiv and ogbn-products, show that the proposed SFR-GNN method consistently achieves top or second-best performance across various perturbation ratios. For instance, on the Cora dataset under Mettack with a 10% perturbation ratio, SFR-GNN achieves 82.1% accuracy, outperforming baselines that range from 69% to 81%. The method also shows significant improvements in training time, achieving over 100% speedup on Cora and Citeseer compared to the fastest existing methods. On large-scale graphs, SFR-GNN demonstrates superior scalability and efficiency, surpassing even GCN in speed while maintaining competitive accuracy.

SFR-GNN emerges as an innovative and effective solution for defending against structural attacks on graph neural networks. By employing an innovative “attributes pre-training and structure fine-tuning†strategy, SFR-GNN eliminates the need to purify modified structures, significantly reducing computational overhead and avoiding additional hyper-parameters. Theoretical analysis and extensive experiments validate the method’s effectiveness, demonstrating robustness comparable to state-of-the-art baselines while achieving a remarkable 50%-136% improvement in runtime speed. Also, SFR-GNN exhibits superior scalability on large-scale datasets, making it particularly suitable for real-world applications that demand both reliability and efficiency in adversarial environments. These findings position SFR-GNN as a promising advancement in the field of robust graph neural networks, offering a balance of performance and practicality for various graph-based tasks under potential structural attacks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post SFR-GNN: A Novel Graph Neural Networks (GNN) Model that Employs an ‘Attribute Pre-Training and Structure Fine-Tuning’ Strategy to Achieve Robustness Against Structural Attacks appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)