The paper “MemLong: Memory-Augmented Retrieval for Long Text Modeling†addresses a critical limitation regarding the ability to process long contexts in the field of Large Language Models (LLMs). While LLMs have shown remarkable success in various applications, they struggle with long-sequence tasks due to traditional attention mechanisms’ quadratic time and space complexity. The increasing memory demands during text generation exacerbate this challenge. The authors propose a novel solution, MemLong, which integrates an external retrieval mechanism to enhance long-context language modeling. By leveraging historical information retrieval, MemLong aims to significantly extend the context length that LLMs can handle, thus broadening their applicability in tasks such as long-document summarization and multi-turn dialogue.

Current methods for managing long contexts in LLMs often involve reducing attention mechanisms’ computational complexity or employing memory selection strategies. Techniques such as sparse attention operations have been developed to alleviate the computational burden but frequently compromise model performance. Other approaches, like token-level memory selection, can lead to the loss of semantic information. Retrieval-Augmented Language Modeling (RALM) has emerged as a promising direction, incorporating retrieval mechanisms to improve long-text processing capabilities. However, these existing methods need to be revised, including distribution shifts in stored information and the impracticality of retraining large models. In response to these limitations, the authors introduce MemLong, which employs a non-differentiable retrieval-memory module combined with a partially trainable decoder-only language model. This innovative approach utilizes a fine-grained, controllable retrieval attention mechanism that focuses on semantically relevant chunks of information.

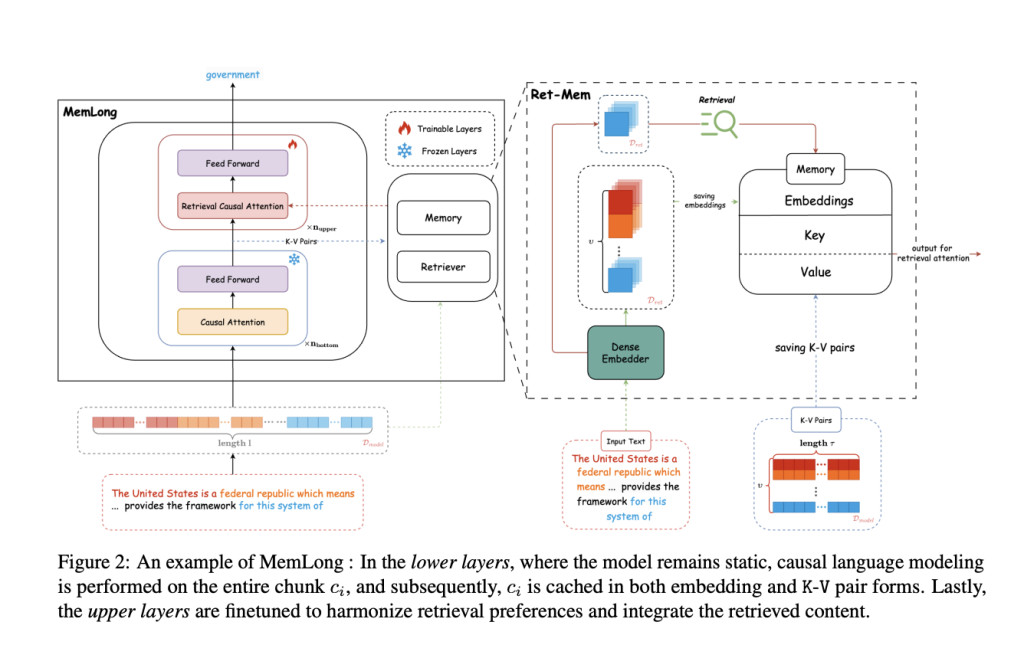

MemLong operates by storing past contexts in a non-trainable memory bank, allowing for efficient retrieval of key-value (K-V) pairs during text generation. The model consists of two main components: a retrieval mechanism and a memory component. During the generation process, MemLong can retrieve relevant historical information based on the current input, thereby augmenting the context available to the model. This retrieval mechanism is designed to maintain distributional consistency, ensuring that the information stored in memory does not drift as the model parameters are updated. Additionally, MemLong is highly efficient, requiring only minor adjustments to the upper layers of the model, which significantly reduces the training costs. Notably, MemLong can extend the context length from 4,000 to an impressive 80,000 tokens on a single GPU, showcasing its potential for handling extensive text inputs.

MemLong’s performance has been rigorously evaluated across multiple long-context language modeling benchmarks. The results unequivocally demonstrate that MemLong consistently outperforms other state-of-the-art LLMs, including OpenLLaMA, particularly in retrieval-augmented in-context learning tasks. MemLong achieves improvements of up to 10.2 percentage points over existing models, a testament to its effectiveness in managing long contexts without sacrificing the model’s original capabilities. The architecture of MemLong allows for a dynamic memory management system that intelligently updates the stored information based on retrieval frequency, ensuring that the most relevant data is prioritized while outdated information is discarded. This dynamic approach, combined with a retrieval causal attention mechanism, enables MemLong to effectively integrate both local and historical context, enhancing its overall performance in long-text processing.

In conclusion, the research presented in “MemLong: Memory-Augmented Retrieval for Long Text Modeling†offers a compelling solution to the challenges faced by LLMs in handling long contexts. By integrating a retrieval mechanism with a memory component, MemLong effectively extends the context length while maintaining computational efficiency and model performance. This innovative approach addresses the limitations of previous methods, providing a robust framework for future developments in long-text modeling and retrieval-augmented applications.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post MemLong: Revolutionizing Long-Context Language Modeling with Memory-Augmented Retrieval appeared first on MarkTechPost.

Source: Read MoreÂ